Contents

Meet the First Recipients of FLI’s New Grants Program

This month we are delighted to announce the inaugural winners of our new AI safety research grants, funded by Vitalik Buterin. Out of a total of almost 100 applications, an external review panel of 25 leading academics selected these eight PhDs and one Postdoctoral Fellow. Let’s meet them!

PhDs

The Vitalik Buterin PhD Fellowship in AI Existential Safety is for students starting PhD programs in 2022 who plan to work on AI existential safety research, or for existing PhD students who would not otherwise have funding to work on AI existential safety research. It will fund students for 5 years of their PhD, with extension funding possible. At universities in the US, UK, or Canada, annual funding will cover tuition, fees, and the stipend of the student’s PhD program up to $40,000, as well as a fund of $10,000 that can be used for research-related expenses such as travel and computing. At universities not in the US, UK or Canada, the stipend amount will be adjusted to match local conditions. Fellows will also be invited to workshops where they will be able to interact with other researchers in the field.

These are the PhD Fellows this year, and their research focuses (pictures above, clockwise starting top left):

Usman Anwar – Research on inverse constrained reinforcement learning, impact regularizers, and distribution generalization, at the University of Cambridge.

Stephen Casper – Research on interpretable and robust AI at Massachusetts Institute of Technology (MIT).

Xin Cynthia Chen – Research on safe reinforcement learning at ETH Zurich.

Erik Jenner – Research on developing principled techniques for aligning even very powerful AI systems with human values at UC Berkeley.

Johannes Treutlein – Research on objective robustness and learned optimization at UC Berkeley.

Alexander Pan – Research on alignment, adversarial robustness, and anomaly detection at UC Berkeley.

Erik Jones – Research on robustness of forthcoming machine learning systems at UC Berkeley.

Zhijing Jin – Research on promoting NLP for social good and improving AI by connecting NLP with causality, at Max Planck Institute.

Postdoctoral Fellowship

The Vitalik Buterin Postdoctoral Fellowship in AI Existential Safety is designed to support promising researchers for postdoctoral appointments starting in the fall semester of 2022 to work on AI existential safety research. Funding is for three years subject to annual renewals based on satisfactory progress reports. For host institutions in the US, UK, or Canada, the Fellowship includes an annual $80,000 stipend and a fund of up to $10,000 that can be used for research-related expenses such as travel and computing. At universities not in the US, UK or Canada, the fellowship amount will be adjusted to match local conditions.

Nisan Stiennon is our first Postdoctoral Fellow for this program; Nisan is researching agent foundations at UC Berkeley’s Center for Human-Compatible AI (CHAI).

Find out more about these amazing fellows and their research interests. We intend to run these fellowships again this coming fall. Any questions about the programs can be sent to grants@futureoflife.org.

Outreach

2022 Future of Life Award Announcement Coming Soon

On August 6th, FLI will announce the 2022 Winners of the Future of Life Award. Watch our new video to find out what this award is all about, and hear the stories of our previous winners. The award, funded by FLI co-founder Jaan Tallinn, is a $50,000/person prize given to individuals who, without having received much recognition at the time, have helped make today’s world dramatically better than it may otherwise have been. Follow the award countdown so you don’t miss the announcement of the winners next week!

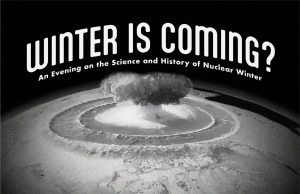

FLI Event in New York on Hiroshima Day

FLI Event in New York on Hiroshima Day

Join us at Pioneer Works in Brooklyn, New York, on 6th August, the 77th anniversary of Hiroshima, to explore a chilling but fascinating topic: the latest science of nuclear winter, and how to reduce this risk by improving awareness of it. We’ll be celebrating the heroes who discovered and spread the word about this shocking scientific prediction of nuclear winter: that firestorms set off by a major nuclear war would envelop the earth in soot and smoke blocking sunlight for years, sending global temperature plunging, ruining ecosystems and agriculture and killing billions through famine.

One panel, featuring nuclear winter pioneers Alan Robock, Brian Toon and Richard Turco and moderated by FLI’s Emilia Javorsky, will discuss the most up-to-date findings about nuclear winter. The other, featuring nuclear winter pioneers John Birks and Georgiy Stenchikov as well as Ann Druyan, the Award-winning American documentary producer and director who co-wrote Cosmos with her late husband Carl Sagan, will explore the challenges involved in communicating this risk to politicians and the public, from the 1980s to today. The specter of nuclear war still hangs over us, and current geopolitics has made it more threatening than ever. So come along and hear about it from the experts. Get your tickets here.

Policy

Senior Job Opening on the FLI Policy Team

Senior Job Opening on the FLI Policy Team

We have another new job opening at the Future of Life Institute, and it’s a big one: We’re looking for a new Director of U.S. Policy, based in Washington D.C., Sacramento, or San Francisco. FLI has recently grown to about 20 staff and we anticipate continued growth across our activities of outreach, grant making, and advocacy. The Director of U.S. Policy plays a pivotal role in the organisation, and we are looking for a driven individual of substantial experience to fill this position. If you think you’re up to it, or know someone who is, take a look at the full information.

FLI AI Director Richard Mallah Co-Organises AI Safety Workshop

On July 24-25th, Richard Mallah, Director of AI Projects at FLI, co-organized the ‘AISafety 2022’ workshop at the 31st International Joint Conference on Artificial Intelligence and the 23rd European Conference on Artificial Intelligence (IJCAI-ECAI 2022), in Vienna. The workshop sought ‘to explore new ideas on safety engineering, as well as broader strategic, ethical and policy aspects of safety-critical AI-based systems’. Speakers included scientist and ‘Rebooting AI’ author Gary Marcus, Elizabeth Adams, of the Stanford University Institute for Human Centered AI, USA, and Prof. Dr. Thomas A. Henzinger, of the Institute of Science and Technology, Austria.