Contents

FLI December 2021 Newsletter

FLI Launches a New Film:

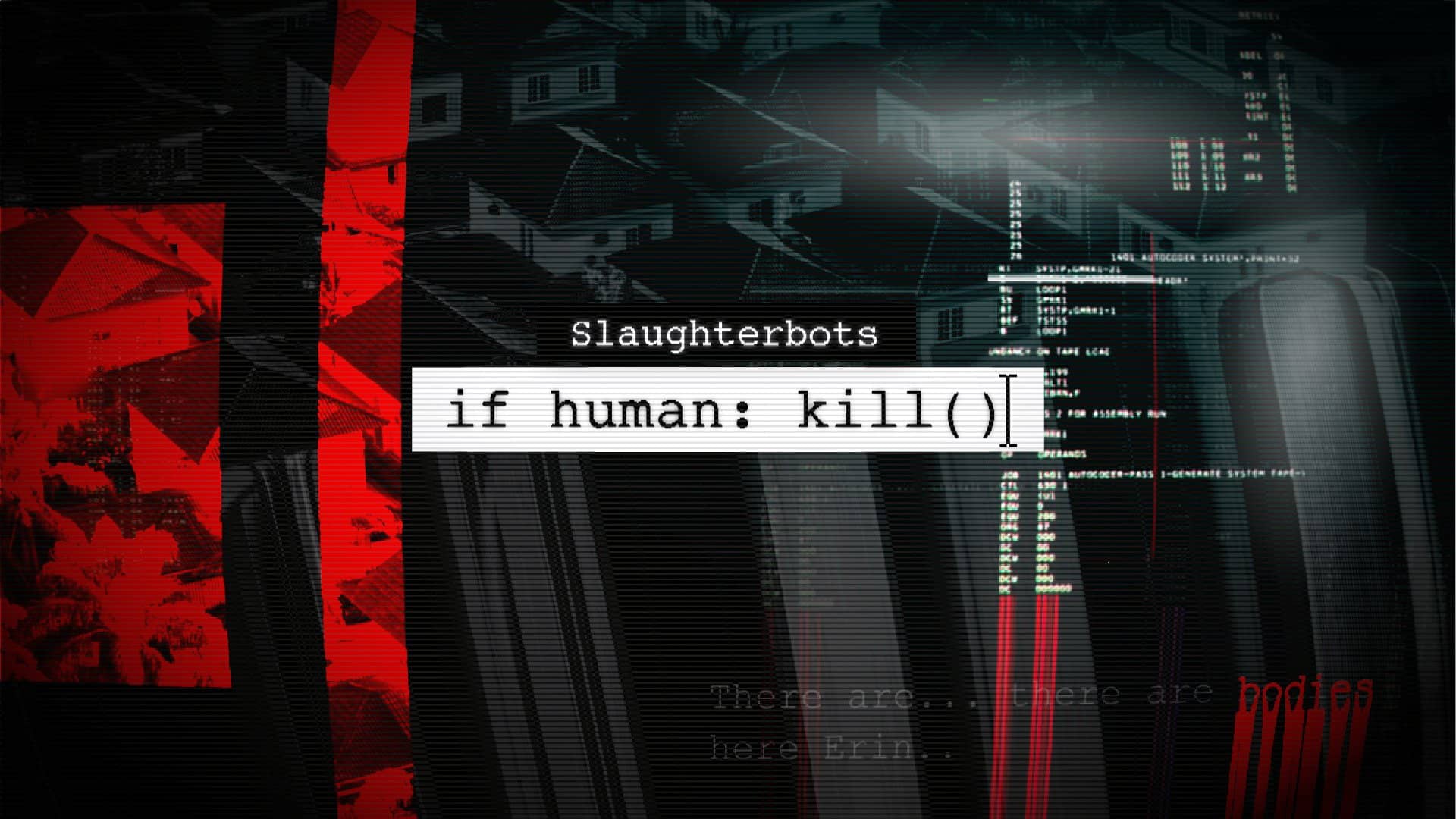

Slaughterbots – if human: kill()

Slaughterbots – if human: kill() follows up on FLI’s award-winning short film, Slaughterbots, which went viral back in 2017. The new film depicts a dystopian future in which these weapons have been allowed to become the tool of choice not just for militaries, but any group seeking to achieve scalable violence against a specific group, individual, or population.

When FLI first released Slaughterbots in 2017, some criticized the scenario as unrealistic and technically unfeasible. Since then, however, slaughterbots have been used on the battlefield, and similar, easy-to-make weapons are currently in development, marking the start of a global arms race that currently faces no legal restrictions.

if human: kill() conveys a concrete path to avoid the outcome of which it warns. The vision for action is based on the real-world policy prescription of the International Committee of the Red Cross (ICRC), an independent, neutral organisation that plays a leading role in the development and promotion of laws regulating the use of weapons. A central tenet of the ICRC’s position is the need to adopt a new, legally binding prohibition on autonomous weapons that target people. FLI agrees with the ICRC’s most recent recommendation that the time has come to adopt legally binding rules on lethal autonomous weapons through a new international treaty.

FLI’s new film has been watched by over 10 million people across YouTube, Facebook and Twitter, and received substantial media coverage, from outlets such as Axios, Forbes, BBC World Service’s Digital Planet and BBC Click, PopularMechanics, and ProlificNorth. Politico later recommended the film as the best way for readers to clarify for themselves the dangers we face from slaughterbots, and what can be done to prevent them. This places the film well to influence United Nations delegates next week as they meet at the Convention on Conventional Weapons (CCW) review conference. In the meantime, you can still impress upon your national delegates the importance of choosing humanity over Slaughterbots, by signing Amnesty International’s new petition.

Other Policy & Outreach Efforts

Policy Advocacy for Banning Slaughterbots

Help us find our next unsung hero

Help us find our next unsung hero

Nominations for next year’s Future of Life Award are officially open. For the unfamiliar, this award is a $50,000/person prize given to individuals who, without having received much recognition at the time, have helped make today dramatically better than it may otherwise have been. The award is funded by Skype Co-founder Jaan Tallinn and presented by us, the Future of Life Institute. Past winners include Stanislav Petrov, who helped prevent an all-out US-Russian nuclear war, William Foege & Viktor Zhdanov (right), who played key roles in the eradication of smallpox, and most recently, Joseph Farman, Susan Solomon and Stephen Andersen for their work in saving the earth’s ozone layer. To nominate an unsung hero, please follow the link here. If we decide to give the award to your nominee, you will receive a $3,000 prize from us!

New Podcast Episodes

The ideas behind ‘Slaughterbots – if human: kill()’ | A deep dive interview

The ideas behind ‘Slaughterbots – if human: kill()’ | A deep dive interview

In support of Slaughterbots – if human: kill(), we’ve produced an in-depth interview that explores the ideas and purpose behind the new film. We interviewed Emilia Javorsky, a physician-scientist who leads FLI’s lethal autonomous weapons policy and advocacy efforts, Max Tegmark, FLI President and Professor of Physics at MIT, and Stuart Russell, Professor of Computer Science at Berkeley, Director of the Center for Intelligent Systems, and world-leading AI researcher. They share their perspectives on particular scenes from the film and how they fit into wider issues surrounding lethal autonomous weapons. We hope this deep-dive into the content of Slaughterbots – if human: kill() helps to share the message of hope in the film, and the policy solution we see as crucial for allowing AI to benefit the world’s future, rather than oppress and harm it.

News & Reading

Recent Developments in Autonomous Weapons

In October, military hardware company Ghost Robotics debuted a robodog, with a sniper rifle attached. Ghost Robotics later denied that the robot was fully autonomous but it remains unclear just how far its autonomous capabilities extend. Leading AI researcher Toby Walsh said that he hoped the public outcry at the robodog would add ‘urgency to the ongoing discussions at the UN to regulate this space.’

Only weeks later, the Australian Army put in an order for the “Jaeger-C” (right), a bulletproof attack robot vehicle with anti-tank and anti-personnel capabilities. Forbes reported that “autonomous operation… means the Jaeger-C will work even if jammed”. It also means that the vehicle can go fully autonomous, with no human in control of its actions. The field of autonomous weaponry is advancing faster than legislation can hold it to account.

At the beginning of December, the Group of Governmental Experts (GGE) met at the UN in Geneva to discuss the appropriate legislation to regulate autonomous weapons systems. FLI hoped that the International Committee of the Red Cross (ICRC) position (namely, among other stipulations, to ban autonomous weapons that target humans) would be accepted, but instead, no consensus of any kind was reached, leaving the issue wide open for the upcoming Convention on certain Conventional Weapons (CCW) UN review conference proceeding this week. Mark Brakel attends on our behalf.

In the meantime, AI’s abilities to target accurately in situations resembling war remain dubious. Defense One writes of an Air Force targeting algorithm thought to have a 90% success rate, which turned out to be more like 25% accurate. A ‘subtle tweak’ in conditions sent this AI’s performance into a ‘dramatic nosedive’. And while the algorithm was only right 25% of the time, ‘it was confident that it was right’ 90% of the time. FLI takes this as yet more evidence that humans must remain in control: not only do AIs get things wrong; they also never stop to consider if they are wrong – a disastrous shortcoming.

The Gathering Swarm

Following the Indian military’s drone swarm demonstration in January, and the IDF (Israeli Defence Forces) use of a swarm to find, select and attack Hamas militants in Gaza over the summer (left), Russian defence firm Kronshtadt has now announced that they are soon debuting their own drone swarm. Kronschtadt CEO Sergei Bogatikov said, ‘This is a new stage in drone control, which will make them more autonomous’ – in other words, uncontrollable. Swarms represent a particularly large scale threat, due to the number of drones involved, and thus the potentially high number of human fatalities; in addition, Zachary Kallenborn pointed out on the FLI Podcast the danger of one drone’s error being communicated and maximised in impact by the entire swarm dynamic. So many military powers investing in them represents a frightening next step in the evolution of autonomous weapon systems.

New Uses of Drones But a Sign of Things to Come

The above developments are confined to high-tech, world-leading military firms. But further afield, we see uses of piloted drones which give a sense of the decentralised damage soon to be done by autonomous quadcopters and the like. At the beginning of November, an attack by a “small explosive-laden drone” failed to kill Iraqi PM Mustafa al-Kadhimi. However, like the Maduro drone attack in 2018, it demonstrated just how easy it is for powerful new technology to fall into criminal hands. With added autonomy, such a drone would have been harder to hold anyone accountable for, and much harder to defend against. al-Kadhimi would likely have died, Baghdad’s tense situation would have erupted, and Iraq’s latest chance at democratic stability might have been over. The Washington Post, quite rightly, later identified the attack as emblematic of a ‘growing threat’ of drone terrorism.

We learn that Mexican cartels have also embraced aerial drones for reconnaissance, and indeed for attacking rival gangs and security forces. And, in the same week as the Iraqi assassination attempt, WIRED ran this piece on the growing threat drone attacks disrupting the power grid in the U.S., and elsewhere. We are seeing the emergence of a truly destabilising force, here, one which the addition of autonomy could make devastating, and, as Brian Barrett writes in the article, ‘not enough is being done to stop it’.

Keep an Ear Out for Stuart Russell’s Reith Lecture Series

Keep an Ear Out for Stuart Russell’s Reith Lecture Series

Learning the lessons of our times

Lena Sun writes in The Washington Post that “two years into this pandemic, the world is dangerously unprepared for the next one”. A new global security index ranking of 195 countries by their preparedness for future biological risks reveals great complacency, which one might have expected COVID-19 to shake-up somewhat. Sun quotes epidemiologist Dr. Jennifer Nuzzo on the strange disconnect between scientific risk assessments and national reactions: If “the alarms go off and your political leaders tell you, ‘Pay no attention to that alarm…’ that doesn’t mean that the fire alarms don’t work”.

FLI is a 501c(3) non-profit organisation, meaning donations are tax exempt in the United States.

If you need our organisation number (EIN) for your tax return, it’s 47-1052538.

FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.