Contents

FLI November, 2016 Newsletter

Liability and the Definitional Problem

of Autonomous Weapons

If you haven’t already seen it, the FLI website has a page dedicated to the AI safety researchers, their publications, and their many other achievements. We’ve also been featuring their individual projects in articles and a podcast, with special attention to autonomous weapons this month. Peter Asaro and Heather Roff sat down with FLI’s Ariel Conn to discuss some of the biggest problems we currently face with respect to autonomous weapons. Specifically, who’s liable if something goes wrong, and how can we have a real discussion about the risks of weapons that aren’t well defined?

If you haven’t already seen it, the FLI website has a page dedicated to the AI safety researchers, their publications, and their many other achievements. We’ve also been featuring their individual projects in articles and a podcast, with special attention to autonomous weapons this month. Peter Asaro and Heather Roff sat down with FLI’s Ariel Conn to discuss some of the biggest problems we currently face with respect to autonomous weapons. Specifically, who’s liable if something goes wrong, and how can we have a real discussion about the risks of weapons that aren’t well defined?

By Ariel Conn

What, exactly, is an autonomous weapon? For the general public, the phrase is often used synonymously with killer robots and triggers images of the Terminator. But for the military, the definition of an autonomous weapons system, or AWS, is rather nebulous.

Research by Heather Roff.

Who Is Responsible for Autonomous Weapons?

By Tucker Davey

As artificial intelligence improves, governments may turn to autonomous weapons — like military robots — in order to gain the upper hand in armed conflict. How do we hold human beings accountable for the actions of autonomous systems? And how is justice served when the killer is essentially a computer?

Research by Peter Asaro.

by Ariel Conn

Heather Roff and Peter Asaro talk about their work to understand and define the role of autonomous weapons, the problems with autonomous weapons, and why the ethical issues surrounding autonomous weapons are so much more complicated than other AI systems.

Don’t forget to follow us on SoundCloud and iTunes!

What We’ve Been Up to This Month

Effective Altruism Global x Oxford

Victoria Krakovna participated in EAGx Oxford this month. She was on a panel — which included Demis Hassabis, Nate Soares, Toby Ord and Murray Shanahan — to discuss the long-term situation in AI. She also gave a talk about the story of FLI.

ICYMI: This Month’s Most Popular Articles

Complex AI Systems Explain Their Actions

By Sarah Marquart

Research by Manuela Veloso.

Cybersecurity and Machine Learning

By Tucker Davey

But what if defense systems could learn to anticipate how attackers will try to fool them?

Research by Ben Rubinstein.

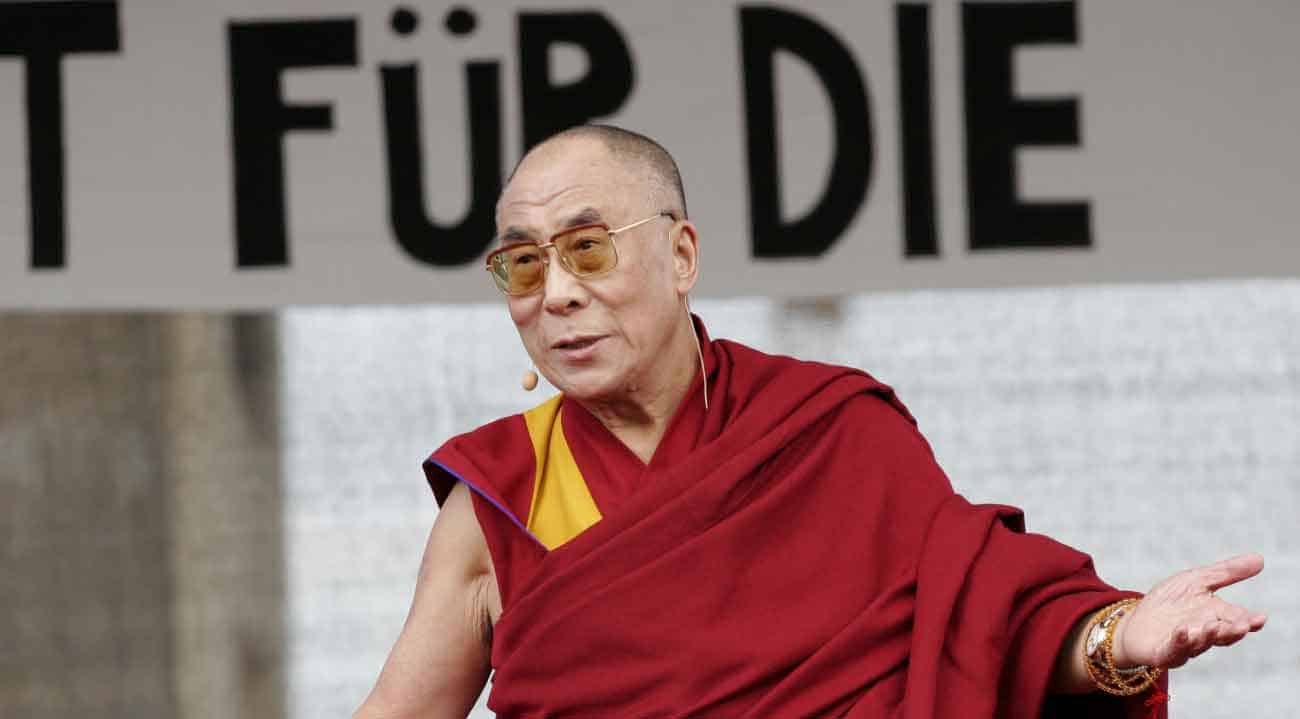

Insight from the Dalai Lama Applied to AI Ethics

By Ariel Conn

One of the primary objectives — if not the primary objective — of artificial intelligence is to improve life for all people. But an equally powerful motivator to create AI is to improve profits. These two goals can occasionally be at odds with each other. A solution may lie in the “need to be needed” expressed by the Dalai Lama in a New York Times Op-ed.

2300 Scientists from All Fifty States Pen Open Letter to Incoming Trump Administration

By The Union of Concerned Scientists

More than 2300 scientists from all fifty states, including 22 Nobel Prize recipients, released an open letter urging the Trump administration and Congress to set a high bar for integrity, transparency and independence in using science to inform federal policies.

Note From FLI:

We’re also looking for more writing volunteers. If you’re interested in writing research news articles for us, please let us know.