New report: “Leó Szilárd and the Danger of Nuclear Weapons”

Contents

From MIRI:

Today we release a new report by Katja Grace, “Leó Szilárd and the Danger of Nuclear Weapons: A Case Study in Risk Mitigation” (PDF, 72pp).

Leó Szilárd has been cited as an example of someone who predicted a highly disruptive technology years in advance — nuclear weapons — and successfully acted to reduce the risk. We conducted this investigation to check whether that basic story is true, and to determine whether we can take away any lessons from this episode that bear on highly advanced AI or other potentially disruptive technologies.

To prepare this report, Grace consulted several primary and secondary sources, and also conducted two interviews that are cited in the report and published here:

The basic conclusions of this report, which have not been separately vetted, are:

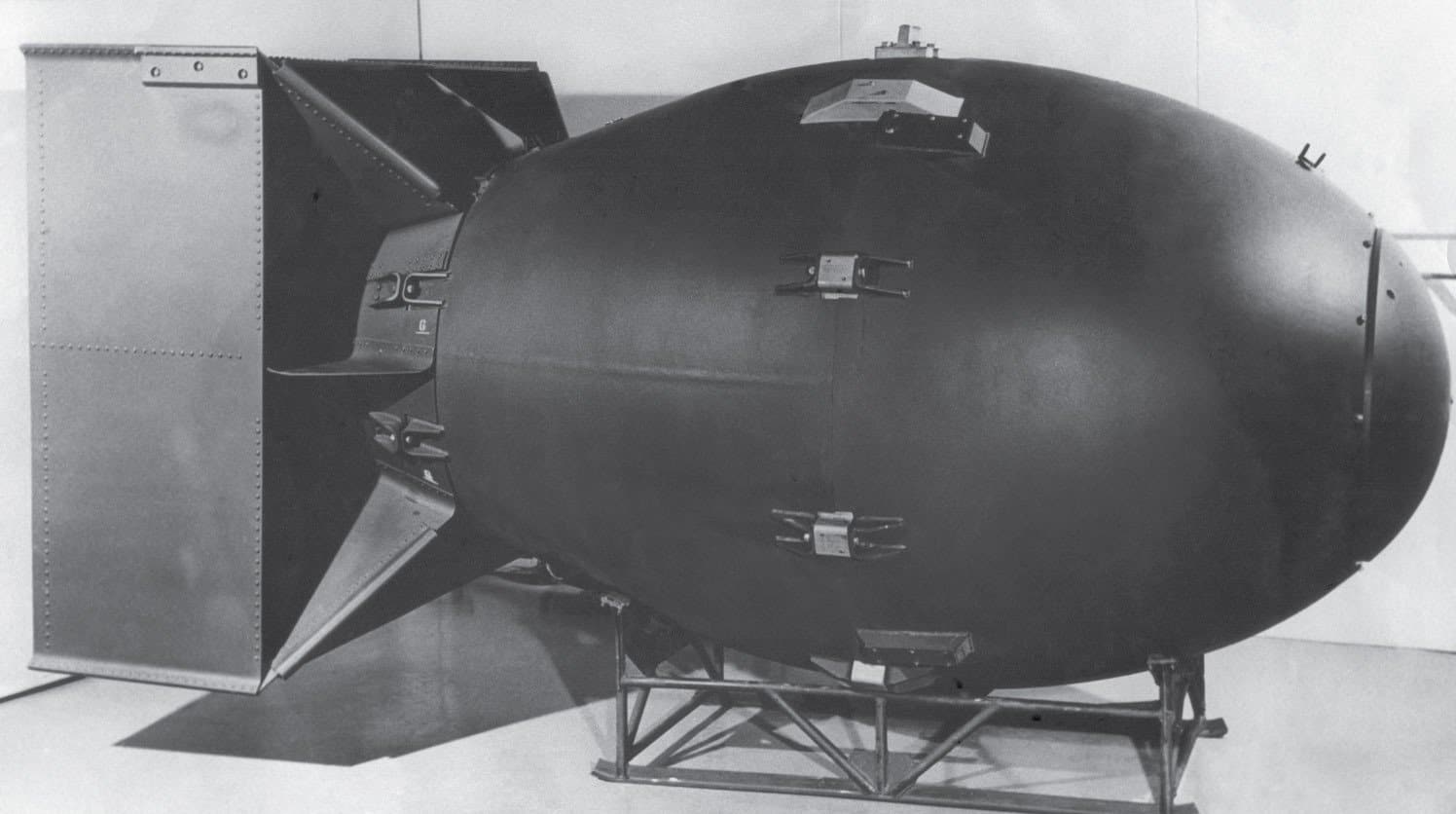

- Szilárd made several successful and important medium-term predictions — for example, that a nuclear chain reaction was possible, that it could produce a bomb thousands of times more powerful than existing bombs, and that such bombs could play a critical role in the ongoing conflict with Germany.

- Szilárd secretly patented the nuclear chain reaction in 1934, 11 years before the creation of the first nuclear weapon. It’s not clear whether Szilárd’s patent was intended to keep nuclear technology secret or bring it to the attention of the military. In any case, it did neither.

- Szilárd’s other secrecy efforts were more successful. Szilárd caused many sensitive results in nuclear science to be withheld from publication, and his efforts seems to have encouraged additional secrecy efforts. This effort largely ended when a French physicist, Frédéric Joliot-Curie, declined to suppress a paper on neutron emission rates in fission. Joliot-Curie’s publication caused multiple world powers to initiate nuclear weapons programs.

- All told, Szilárd’s efforts probably slowed the German nuclear project in expectation. This may not have made much difference, however, because the German program ended up being far behind the US program for a number of unrelated reasons.

- Szilárd and Einstein successfully alerted Roosevelt to the feasibility of nuclear weapons in 1939. This prompted the creation of the Advisory Committee on Uranium (ACU), but the ACU does not appear to have caused the later acceleration of US nuclear weapons development.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Nuclear, Partner Orgs

Designing Governance for Transformative AI: Top Proposals from the FLI & Foresight Institute Hackathon