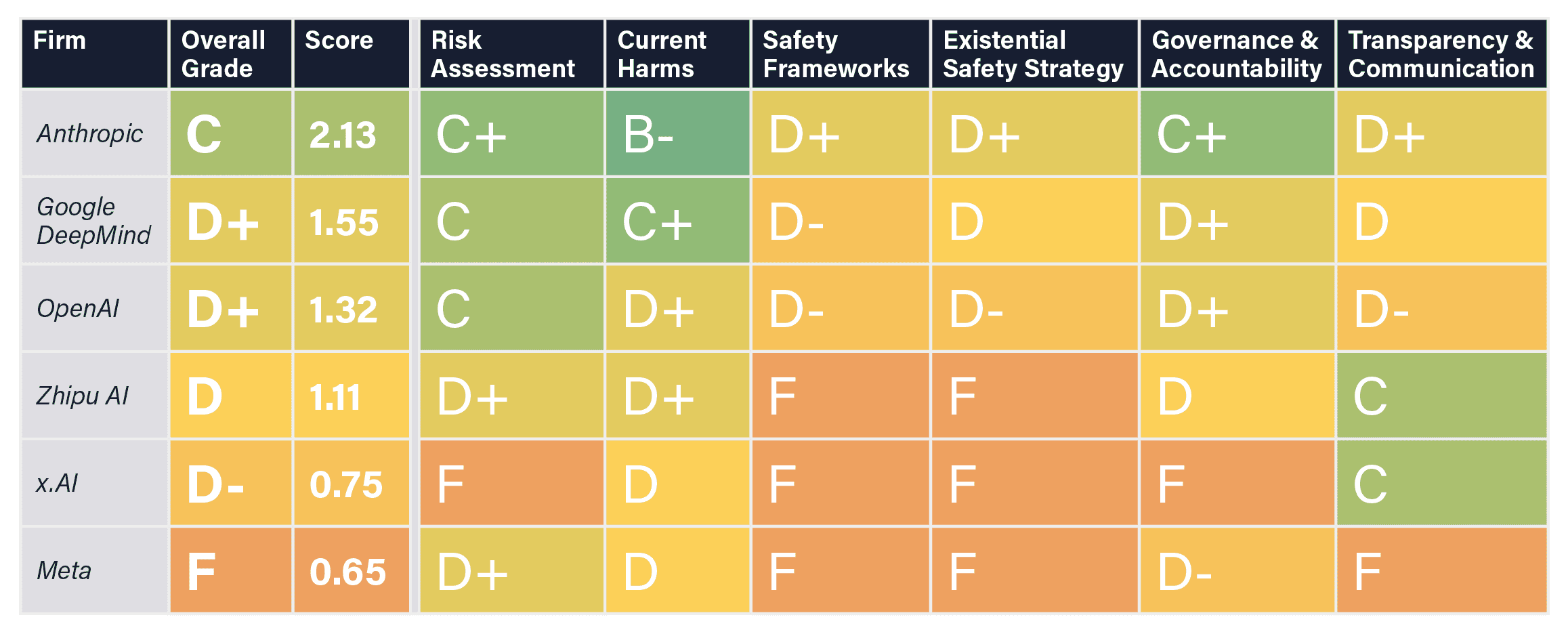

FLI AI Safety Index 2024

Seven AI and governance experts evaluate the safety practices of six leading general-purpose AI companies.

Rapidly improving AI capabilities have increased interest in how companies report, assess and attempt to mitigate associated risks. The 2024 FLI AI Safety Index therefore convened an independent panel of seven distinguished AI and governance experts to evaluate the safety practices of six leading general-purpose AI companies across six critical domains.

Contents

Scorecard

Grading: Uses the US GPA system for grade boundaries: A+, A, A-, B+, […], F letter values corresponding to numerical values 4.3, 4.0, 3.7, 3.3, […], 0.

Panel of graders

Yoshua Bengio

Atoosa Kasirzadeh

David Krueger

Tegan Maharaj

Jessica Newman

Sneha Revanur

Stuart Russell

Key Findings

- Large risk management disparities: While some companies have established initial safety frameworks or conducted some serious risk assessment efforts, others have yet to take even the most basic precautions.

- Jailbreaks: All the flagship models were found to be vulnerable to adversarial attacks.

- Control-Problem: Despite their explicit ambitions to develop artificial general intelligence (AGI), capable of rivaling or exceeding human intelligence, the review panel deemed the current strategies of all companies inadequate for ensuring that these systems remain safe and under human control.

- External oversight: Reviewers consistently highlighted how companies were unable to resist profit-driven incentives to cut corners on safety in the absence of independent oversight. While Anthropic’s current and OpenAI’s initial governance structures were highlighted as promising, experts called for third-party validation of risk assessment and safety framework compliance across all companies.

Methodology

The Index aims to foster transparency, promote robust safety practices, highlight areas for improvement and empower the public to discern genuine safety measures from empty claims.

An independent review panel of leading experts on technical and governance aspects of general-purpose AI volunteered to assess the companies’ performances across 42 indicators of responsible conduct, contributing letter grades, brief justifications, and recommendations for improvement. The panellist selection focused on academia rather than industry to reduce potential conflicts of interest.

The evaluation was supported by a comprehensive evidence base with company-specific information sourced from 1) publicly available material, including related research papers, policy documents, news articles, and industry reports, and 2) a tailored industry survey which firms could use to increase transparency around safety-related practices, processes and structures. The full list of indicators and collected evidence is attached to the report.

Independent Review Panel

Yoshua Bengio is a Full Professor in the Department of Computer Science and Operations Research at Université de Montreal, as well as the Founder and Scientific Director of Mila and the Scientific Director of IVADO. He is the recipient of the 2018 A.M. Turing Award.

Atoosa Kasirzadeh is an Assistant Professor at Carnegie Mellon University. Previously, she was a visiting faculty researcher at Google, a Chancellor’s Fellow and Director of Research at the Centre for Technomoral Futures at the University of Edinburgh.

David Krueger is an Assistant Professor at University of Montreal, and a Core Academic Member at Mila, UC Berkeley’s Center for Human-Compatible AI, and the Center for the Study of Existential Risk.

Tegan Maharaj is an Assistant Professor in the Department of Decision Sciences at HEC Montréal, where she leads the ERRATA lab on Ecological Risk and Responsible AI.

Sneha Revanur is the founder and president of Encode Justice, a global youth-led organization advocating for the ethical regulation of AI. TIME featured her as one of the 100 most influential people in AI.

Jessica Newman is the Director of the AI Security Initiative (AISI), housed at the UC Berkeley Center for Long-Term Cybersecurity. She is also a Co-Director of the UC Berkeley AI Policy Hub.

Stuart Russell is a Professor of Computer Science at UC Berkeley, holder of the Smith-Zadeh Chair in Engineering, and Director of the Center for Human-Compatible AI and the Kavli Center for Ethics, Science, and the Public. He co-authored the standard textbook for AI, which is used in over 1500 universities in 135 countries.

Panellist Comments

“It’s horrifying that the very companies whose leaders predict AI could end humanity have no strategy to avert such a fate,” said panelist David Krueger, Assistant Professor at Université de Montreal and a core member of Mila.

“The findings of the AI Safety Index project suggest that although there is a lot of activity at AI companies that goes under the heading of ‘safety,’ it is not yet very effective,” said panelist Stuart Russell, a Professor of Computer Science at UC Berkeley. “In particular, none of the current activity provides any kind of quantitative guarantee of safety; nor does it seem possible to provide such guarantees given the current approach to AI via giant black boxes trained on unimaginably vast quantities of data. And it’s only going to get harder as these AI systems get bigger. In other words, it’s possible that the current technology direction can never support the necessary safety guarantees, in which case it’s really a dead end.”

“Evaluation initiatives like this Index are very important because they can provide valuable insights into the safety practices of leading AI companies. They are an essential step in holding firms accountable for their safety commitments and can help highlight emerging best practices and encourage competitors to adopt more responsible approaches,” said Professor Yoshua Bengio, Full Professor at Université de Montréal, Founder and Scientific Director of Mila – Quebec AI Institute and 2018 A.M. Turing Award co-winner.

“We launched the Safety Index to give the public a clear picture of where these AI labs stand on safety issues,” said FLI president Max Tegmark, a professor doing AI research at MIT. “The reviewers have decades of combined experience in AI and risk assessment, so when they speak up about AI safety, we should pay close attention to what they say.”

Coverage

- TIME Magazine, 12 Dec 2024 – Which AI Companies Are the Safest—and Least Safe?

- IEEE Spectrum, 13 Dec 2024 – Leading AI Companies Get Lousy Grades on Safety A new report from the Future of Life Institute gave mostly Ds and Fs

- TomsGuide, 16 Dec 2024 – Meta gets an F in first AI safety scorecard — and the others barely pass

- Fortune, 17 Dec 2024 – Top AI labs aren’t doing enough to ensure AI is safe, a flurry of recent datapoints suggest

- CNBC, 17 Dec 2024 – Prominent AI scientist Max Tegmark explains why AI companies should be regulated

- Tech Crunch, 18 Dec 2024 – Who wants ‘Her’-like AI that gets stuff wrong?

- France24, 17 Jan 2025 – Top AI companies score badly on risk and safety assessments, ahead of Paris summit

- Sina (Chinese, Simplified), 16 Dec 2024- The first AI Safety Index Report in which Bengio participated was released, with C being the highest score

- Samsung SDS (Korean), 11 Apr 2025 – AI Risk Management Framework

- Global Views Monthly (Chinese, Traditional), 23 Dec 2024 – OpenAI and Google DeepMind both got low scores! AI Safety Index Report, US Research Institute Reveals Secrets