Contents

FLI April, 2019 Newsletter

The Future of Life Award, Fiction Contest, New Podcasts & More

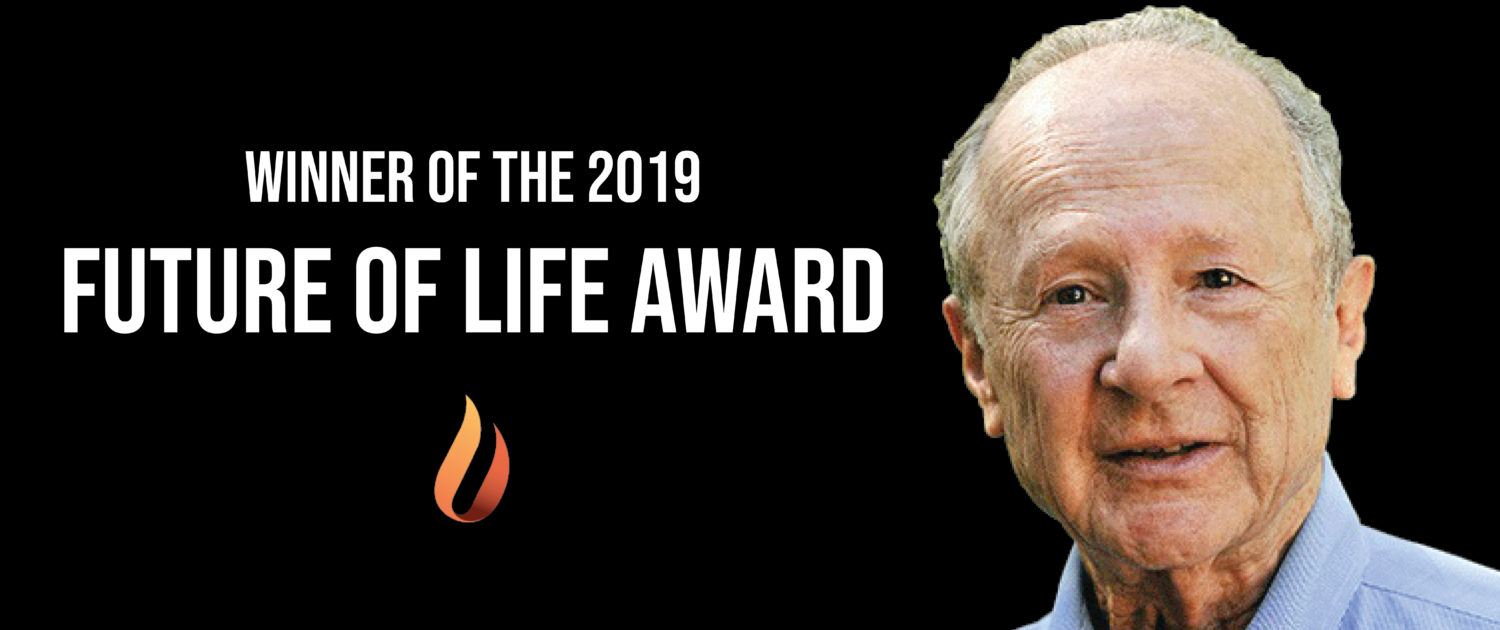

On April 9th, Dr. Matthew Meselson received the $50,000 Future of Life Award at a ceremony at the University of Boulder’s Conference on World Affairs. Dr. Meselson was a driving force behind the 1972 Biological Weapons Convention, an international ban that has prevented one of the most inhumane forms of warfare known to humanity. April 9th marked the eve of the Convention’s 47th anniversary.

Meselson became interested in biological weapons during the 60s, while employed with the U.S. Arms Control and Disarmament Agency. It was on a tour of Fort Detrick, where the U.S. was then manufacturing anthrax, that he learned the motivation for developing biological weapons: they were cheaper than nuclear weapons. Meselson was struck, he says, by the illogic of this — it would be an obvious national security risk to decrease the production cost of WMDs.

The use of biological weapons was already prohibited by the 1925 Geneva Protocol, an international treaty that the U.S. had never ratified. So Meselson wrote a paper, “The United States and the Geneva Protocol,” outlining why it should do so. Meselson knew Henry Kissinger, who passed his paper along to President Nixon, and by the end of 1969 Nixon renounced biological weapons.

On Meselson’s advice, Nixon had resubmitted the Geneva Protocol to the Senate for approval. But he also went beyond the terms of the Protocol — which only ban the use of biological weapons — to renounce offensive biological research itself. Stockpiles of offensive biological substances, like the anthrax that Meselson had discovered at Fort Detrick, were destroyed.

Once the U.S. adopted this more stringent policy, Meselson turned his attention to the global stage. He and his peers wanted an international agreement stronger than the Geneva Protocol, one that would ban stockpiling and offensive research in addition to use and would provide for a verification system. From their efforts came the Biological Weapons Convention, which was signed in 1972 and is still in effect today.

Utopian Fiction Contest

In honor of Earth Day and the future of the planet, The Future of Life Institute is co-sponsoring a short fiction writing contest with Sapiens Plurum. We love the Handmaid’s Tale and Black Mirror as much as anyone, but we think the world needs more utopian fiction right now. So we’re asking you to write down your vision for the future and share it with us. Learn more.

Job Openings

Want to get more involved?

Georgetown University’s Center for Security and Emerging Technology is hiring. Find a list of openings here.

New Podcast Episodes

FLI Podcast: The Unexpected Side Effects of Climate Change with Fran Moore and Nick Obradovich

The side effects of climate change remain relatively unknown, but we can expect a warming world to impact every facet of our lives. In honor of Earth Day, this month’s podcast focuses on these side effects and what we can do about them. Ariel spoke with Dr. Nick Obradovich, a research scientist at the MIT Media Lab, and Dr. Fran Moore, an assistant professor in the Department of Environmental Science and Policy at the University of California, Davis. They study the social and economic impacts of climate change, and they shared some of their most remarkable findings.

The space of AI alignment research is highly dynamic, and it’s often difficult to get a bird’s eye view of the landscape. This two-part podcast attempts to partially remedy this by providing an overview of the organizations participating in technical AI research, their specific research directions, and how these approaches all come together to make up the state of technical AI alignment efforts. Lucas was joined by Rohin Shah, a 5th year PhD student at UC Berkeley with the Center for Human-Compatible AI.

The second episode of this two-part podcast seeks to continue the discussion from Part 1 by going in more depth with regards to the specific approaches to AI alignment.

You can find all the FLI Podcasts here and all the AI Alignment Podcasts here. Or listen on SoundCloud,iTunes, Google Play, and Stitcher.

In Case You Missed It

Why are we so concerned about lethal autonomous weapons? Ariel spoke to four experts –– one physician, one lawyer, and two human rights specialists –– all of whom offered their most powerful arguments on why the world needs to ensure that algorithms are never allowed to make the decision to take a life. It was even recorded from the United Nations Convention on Conventional Weapons, where a ban on lethal autonomous weapons was under discussion.

Featuring experts Yoshua Bengio, Meredith Whittaker, Bart Selman, Carla Gomes, Stuart Russell, Laura Nolan, Toby Walsh, and Meia Chita-Tegmark and narrated by Joseph Gordon-Levitt.

What We’ve Been Up to This Month

Anthony Aguirre gave a statement during the Defense Innovation Board’s third roundtable at Stanford University. Video of the full roundtable, including Anthony’s statement, can be found here.

Ariel Conn moderated two panels at CU Boulder’s Conference on World Affairs, and she participated in the quarterly meeting of the CNAS AI Task Force.

Jessica Cussins Newman gave a talk at the Center for Human-Compatible AI (CHAI) as part of their Interdisciplinary Seminars series. She discussed the role of governments in ensuring safe and beneficial AI development and use, and highlighted the comparative analysis of AI policy and strategy from ten countries around the world as showcased in her report, Toward AI Security.

Richard Mallah did a Keynote Fireside Chat on Shaping the Future of AI at EAGx Boston and participated in a Partnership on AI workshop on global coordination. He also participated in last month’s Augmented Intelligence Summit.

FLI co-sponsored an AI policy briefing at the California State Capitol, along with the UC Berkeley Center for Long-Term Cybersecurity and the CITRIS Policy Lab. The briefing was co-sponsored by Senator Ling Ling Chang (R), Assemblymember Ed Chau (D), and Assembly member Kevin Kiley (R). Max Tegmark was a speaker and Jessica Cussins Newman was the moderator. The briefing emphasized California’s unique potential to be a world leader in responsible AI development and use.

FLI in the News

VOX: The man who stopped America’s biological weapons program

THE GUARDIAN: Can we stop AI outsmarting humanity?

FORBES: The Growing Marketplace For AI Ethics

FORBES: Wrestling With AI Governance Around The World