Podcast: Life 3.0 – Being Human in the Age of Artificial Intelligence

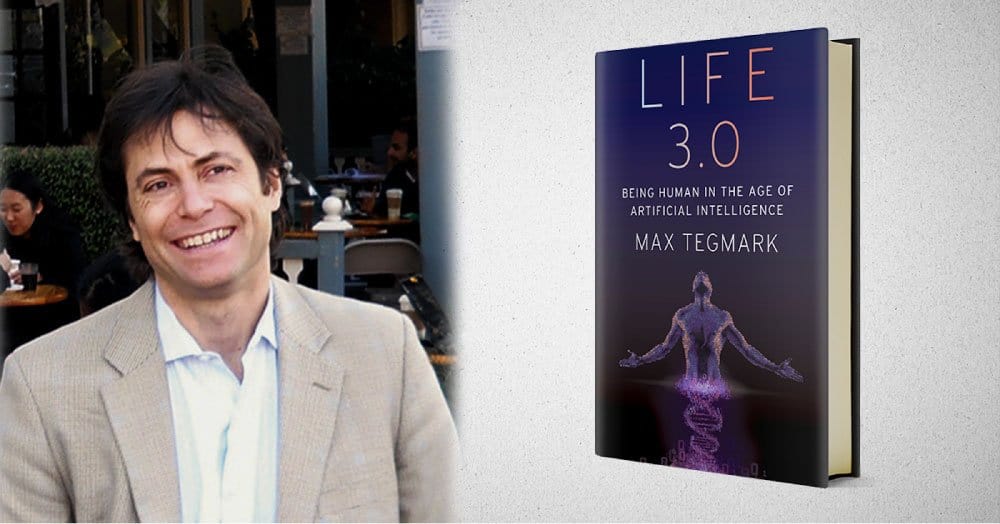

Elon Musk has called it a compelling guide to the challenges and choices in our quest for a great future of life on Earth and beyond, while Stephen Hawking and Ray Kurzweil have referred to it as an introduction and guide to the most important conversation of our time. “It” is Max Tegmark's new book, Life 3.0: Being Human in the Age of Artificial Intelligence.

Tegmark is a physicist and AI researcher at MIT, and he’s also the president of the Future of Life Institute.

The following interview has been heavily edited for brevity, but you can listen to it in its entirety above or read the full transcript here.

Transcript

What makes Life 3.0 an important read for anyone who wants to understand and prepare for our future?

There's been lots of talk about AI disrupting the job market and enabling new weapons, but very few scientists talk seriously about what I think is the elephant in the room: What will happen, once machines outsmart us at all tasks?

Will superhuman artificial intelligence arrive in our lifetime? Can and should it be controlled, and if so, by whom? Can humanity survive in the age of AI? And if so, how can we find meaning and purpose if super-intelligent machines provide for all our needs and make all our contributions superfluous?

I'm optimistic that we can create a great future with AI, but it's not going to happen automatically. We have to win this race between the growing power of the technology, and the growing wisdom with which we manage it. We don't want to learn from mistakes. We want to get things right the first time because that might be the only time we have.

There is still a lot of AI researchers who are telling us not to worry. What is your response to them?

There are two very basic questions where the world's leading AI researchers totally disagree.

One of them is when, if ever, are we going to get super-human general artificial intelligence? Some people think it's never going to happen or take hundreds of years. Many others think it's going to happen in decades. The other controversy is what's going to happen if we ever get beyond human-level AI?

Then there are a lot of very serious AI researchers who think that this could be the best thing ever to happen, but it could also lead to huge problems. It's really boring to sit around and quibble about whether we should worry or not. What I'm interested in is asking what concretely can we do today that's going to increase the chances of things going well because that's all that actually matters.

There’s also a lot of debate about whether people should focus on just near-term risks or just long-term risks.

We should obviously focus on both. What you're calling the short-term questions, like how for example, do you make computers that are robust, and do what they're supposed to do and not crash and don't get hacked. It's not only something that we absolutely need to solve in the short term as AI gets more and more into society, but it's also a valuable stepping stone toward tougher questions. How are you ever going to build a super-intelligent machine that you're confident is going to do what you want, if you can't even build a laptop that does what you want instead of giving you the blue screen of death or the spinning wheel of doom.

If you want to go far in one direction, first you take one step in that direction.

You mention 12 options for what you think a future world with superintelligence will look like. Could you talk about a couple of the future scenarios? And then what are you hopeful for, and what scares you?

Yeah, I confess, I had a lot of fun brainstorming these different scenarios. When we envision the future, we almost inadvertently obsess about gloomy stuff. Instead, we really need these positive visions to think what kind of society would we like to have if we have enough intelligence at our disposal to eliminate poverty, disease, and so on? If it turns out that AI can help us solve these challenges, what do we want?

If we have very powerful AI systems, it's crucial that their goals are aligned with our goals. We don't want to create machines, which are first very excited about helping us, and then later get as bored with us as kids get with Legos.

Finally, what should the goals be that we want these machines to safeguard? There's obviously no consensus on Earth for that. Should it be Donald Trump's goals? Hillary Clinton's goals? ISIS's goals? Whose goals should it be? How should this be decided? This conversation can't just be left to tech nerds like myself. It has to involve everybody because it's everybody's future that's at stake here.

If we actually create an AI or multiple AI systems that can do this, what do we do then?

That's one of those huge questions that everybody should be discussing. Suppose we get machines that can do all our jobs, produce all our goods and services for us. How do you want to distribute this wealth that's produced? Just because you take care of people materially, doesn't mean they're going to be happy. How do you create a society where people can flourish and find meaning and purpose in their lives even if they are not necessary as producers? Even if they don't need to have jobs?

You have a whole chapter dedicated to the cosmic endowment and what happens in the next billion years and beyond. Why should we care about something so far into the future?

It's a beautiful idea if our cosmos can continue to wake up more, and life can flourish here on Earth, not just for the next election cycle, but for billions of years and throughout the cosmos. We have over a billion planets in this galaxy alone, which are very nice and habitable. If we think big together, this can be a powerful way to put our differences aside on Earth and unify around the bigger goal of seizing this great opportunity.

If we were to just blow it by some really poor planning with our technology and go extinct, wouldn't we really have failed in our responsibility.

What do you see as the risks and the benefits of creating an AI that has consciousness?

There is a lot of confusion in this area. If you worry about some machine doing something bad to you, consciousness is a complete red herring. If you're chased by a heat-seeking missile, you don't give a hoot whether it has a subjective experience. You wouldn't say, "Oh I'm not worried about this missile because it's not conscious."

If we create very intelligent machines, if you have a helper robot who you can have conversations with and says pretty interesting things. Wouldn't you want to know if it feels like something to be that helper robot? If it's conscious, or if it's just a zombie pretending to have these experiences? If you knew that it felt conscious much like you do, presumably that would put it ethically in a very different situation.

It's not our universe giving meaning to us, it's we conscious beings giving meaning to our universe. If there's nobody experiencing anything, our whole cosmos just goes back to being a giant waste of space. It's going to be very important for these various reasons to understand what it is about information processing that gives rise to what we call consciousness.

Why and when should we concern ourselves with outcomes that have low probabilities?

I and most of my AI colleagues don't think that the probability is very low that we will eventually be able to replicate human intelligence in machines. The question isn't so much “if,” although there are certainly a few detractors out there, the bigger question is “when.”

If we start getting close to the human-level AI, there's an enormous Pandora's Box, which we want to open very carefully and just make sure that if we build these very powerful systems, they should have enough safeguards built into them already that some disgruntled ex-boyfriend isn't going to use that for a vendetta, and some ISIS member isn't going to use that for their latest plot.

How can the average concerned citizen get more involved in this conversation, so that we can all have a more active voice in guiding the future of humanity and life?

Everybody can contribute! We set up a website, ageofai.org, where we're encouraging everybody to come and share their ideas for how they would like the future to be. We really need the wisdom of everybody to chart a future worth aiming for. If we don't know what kind of future we want, we're not going to get it.

Related episodes

Can AI Do Our Alignment Homework? (with Ryan Kidd)

How AI Can Help Humanity Reason Better (with Oly Sourbut)