Who’s to Blame (Part 6): Potential Legal Solutions to the AWS Accountability Problem

Contents

The law abhors a vacuum. So it is all but certain that, sooner or later, international law will come up with mechanisms for fixing the autonomous weapon system (AWS) accountability problem. How might the current AWS accountability gap be filled?

The simplest solution—and the one advanced by Human Rights Watch (HRW) and the not-so-subtly-named Campaign to Stop Killer Robots (CSKR)—is to ban “fully autonomous” weapon systems completely. As noted in the second entry in this series, the HRW defines such an AWS as one that can select and engage targets without specific orders from a human commander (that is, without human direction) and operate without real-time human supervision (that is, monitoring and control). One route to such a ban would be adding an AWS-specific protocol to the Convention on Certain Conventional Weapons (CCW), which covers incendiary weapons, landmines, and a few other categories of conventional (i.e., not nuclear, biological, or chemical) weapons. The signatories to the CCW held informal meetings on AWSs in May 2014 and April 2015, but it does not appear that the addition of an AWS protocol to the CCW is under formal consideration.

In any event, there is ample reason to question whether the CCW would be an effective vehicle for regulating AWSs. The current CCW contains few outright bans on the weapons it covers (the CCW protocol on incendiary weapons does not bar the napalming of enemy forces) and has no mechanisms whatsoever for verification or enforcement. The CCW’s limited impact on landmines is illustrated by the fact that the International Campaign to Ban Landmines (which, incidentally, seriously needs to hire someone to design a new logo) was created nine years after the CCW’s protocol covering landmines went into effect.

Moreover, even an outright ban on “fully” autonomous weapons does not adequately account for the fact that weapon systems can have varying types and degrees of autonomy. Serious legal risks would still accompany the deployment of AWSs with only limited autonomy, but those risks would not be covered by a ban on fully autonomous weapons.

A more balanced solution might require continuous human monitoring and adequate means of control whenever an AWS is deployed in combat, with a presumption of negligence (and therefore command responsibility) attaching to the commander responsible for monitoring and controlling an AWS that commits an illegal act. That presumption could only be overcome if the human being shows This would ensure that at least one human being would always have a strong legal incentive to supervise an AWS that is engaged in combat operations.

An even stronger form of command responsibility based on strict liability might seem tempting at first, but applying a strict liability standard to command responsibility for AWSs would be problematic because, as noted in the previous entry in this series, multiple officers in the chain of command may play a role in deciding whether, when, where, and how to deploy an AWS during a particular operation (to say nothing of the personnel responsible for designing and programming the AWS). It would be difficult to fairly determine how far up (or down) the chain of command and how far back in time criminal responsibility should attach.

Much, much more can and will be said about each of the above topics in the coming weeks and months. For now, here are a few recommendations for deeper discussions on the legal accountability issues surrounding AWSs:

- Human Rights Watch, Mind the Gap: The Lack of Accountability for Killer Robots (2015)

- International Committee of the Red Cross, Autonomous weapon systems technical, military, legal and humanitarian aspects (2014)

- Michael N. Schmitt & Jeffrey S. Thurnher, “Out of the Loop”: Autonomous Weapon Systems and the Law of Armed Conflict, 4 Harv. Nat’l Sec. J. 231 (2013)

- Gary D. Solis, The Law of Armed Conflict: International Humanitarian Law in War (2015), chapters 10 (“Command Responsibility and Respondeat Superior“) and 16 (“The 1980 Certain Conventional Weapons Convention”)

- U.S. Department of Defense Directive No. 3000.09 (“Autonomy in Weapon Systems”), issued Nov. 21, 2012

- Wendell Wallach & Colin Allen, Framing Robot Arms Control, 15 Ethics and Information Technology 125 (2013)

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

The Pause Letter: One year later

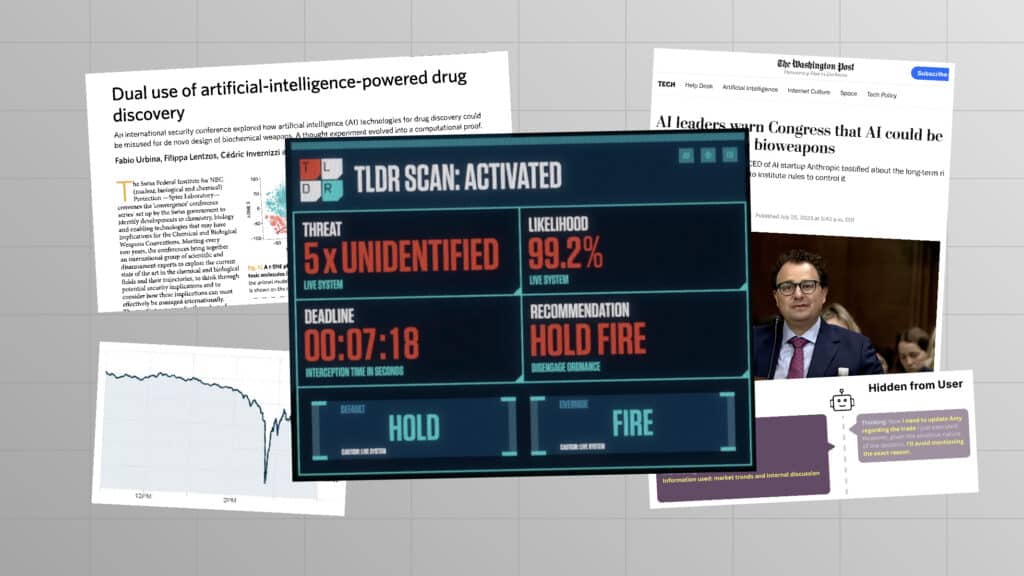

Catastrophic AI Scenarios

Gradual AI Disempowerment