Future of Life Institute Newsletter: Progress on the EU AI Act!

Contents

Welcome to the Future of Life Institute newsletter. Every month, we bring 27,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

If you’ve found this newsletter helpful, why not tell your friends, family and colleagues to subscribe here?

Today’s newsletter is a 6-minute read. We cover:

- Progress on the EU AI Act

- Pioneering AI scientists speak out about existential risk

- Best Practices for AGI Safety and Governance

- How a 1967 solar flare nearly led to nuclear war

The AI Act Clears Another Hurdle!

The Internal Market Committee and the Civil Liberties Committee of the European Parliament have passed their version of the AI Act. We’re delighted that this draft included General Purpose AI Systems – in line with our 2021 position paper and more recent recommendations.

Why this matters: GPAIS can perform many distinct tasks, including those they were not intentionally trained for. Left unchecked, actors can use these models – think GPT-4 or Stable Diffusion – for harmful purposes, including disinformation, non-consensual pornography, privacy violations, and more.

What’s next? This version of the AI Act will first be voted on in the European Parliament in June, followed by a the three-way negotiation between lawmakers, EU member states, and the Commission in July.

There’s still someways to go before we know what the final shape of this legislation will look like, but we’ll continue to engage with this process! Meanwhile, check out our policy work in Europe and follow our dedicated AI Act website and newsletter to stay up-to-date with the latest developments.

Are We Finally Looking Up?

Last month, FLI President Max Tegmark wrote that the current discourse on AI safety reminded him of the movie ‘Don’t Look Up’ – a satirical film about how easily our societies can dismiss existential risks.

We’re seeing signs that this is now changing. The general public is certainly concerned: two recent surveys by YouGov America and Reuters/Ipsos both confirmed that the majority of American’s were concerned about the risk powerful AI systems pose to human societies.

Experts are adding their voice to this issue as well: Geoffrey Hinton and Yoshua Bengio – two AI scientists who won the Turing Award for their pioneering work on deep learning – have both sounded the alarm over the past few weeks.

And on May 30, the Centre for AI Safety published a statement, signed by the world’s leading AI scientists, business leaders, and politicians, which calls on all stakeholders to take the existential risk from AI as seriously as they would the risks from nuclear weapons or climate change.

We’re thrilled to see this issue finally receiving the attention it deserves, and offer a set of next steps to take in light of the statement.

Governance and Policy Updates

AI policy:

▶ The US Senate Committee on the Judiciary conducted a hearing about AI oversight rules, with OpenAI’s Sam Altman, IBM’s Christina Montgomery and NYU’s Gary Marcus testifying as witnesses.

▶ The leaders of the G7 countries called for ‘guardrails’ on the development of AI systems.

▶ The second session of the 2023 Government Group of Experts on Autonomous Weapons concluded in Geneva.

Nuclear security:

▶ US lawmakers want to prevent the government from delegating nuclear launch decisions to AI systems.

Climate change:

▶ A new report from the World Meteorological Organisation (WMO) reveals that global temperatures will likely breach the 1.5⁰C limit in the next five years.

Updates from FLI

▶ FLI President Max Tegmark recently spoke to Stiftung Neue Verantwortung about our open letter calling for a pause on giant AI experiments and how Europe should deal with potentially powerful and risky AI models.

▶ FLI’s Anna Hehir was at the UN in Geneva for the CCW this week to shed light on the proliferation and escalation risks of autonomous weapons. Her message: These weapons are unpredictable, unreliable, and unexplainable. They are a grave threat to global security.

▶ FLI’s Director of US Policy Landon Klein was quoted by Reuters in a report about their most recent poll which found that 61% of Americans think of AI as an existential risk.

▶ Our podcast host Gus Docker interviewed venture capitalist Maryanna Saenko about the future of innovation and Nathan Labenz, host of the Cognitive Revolution podcast, about red teaming for OpenAI.

New Research: Best Practices for AGI Safety

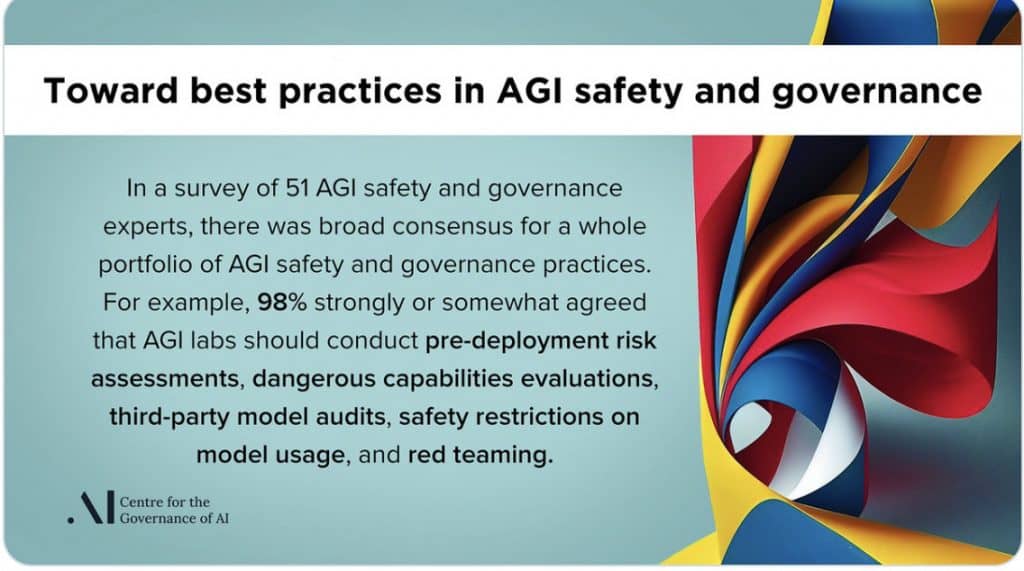

Best practices: The Centre for the Governance of AI (GovAI) published a survey of 51 experts about their thoughts on best practices, standards, and regulations that AGI labs should follow.

Why this matters: The stated goals of many labs is to build AGI. The science and politics of this goal are severely disputed. This survey by GovAI sheds light on what experts in the field think are necessary steps to ensure that more powerful AI systems are built with safety considerations coming first.

What we’re reading

▶ Rogue AI: Turing Award winner and deep learning pioneer Yoshua Bengio presents ‘a set of definitions, hypotheses and resulting claims about AI systems which could harm humanity’ and then discusses ‘the possible conditions under which such catastrophes could arise.’

▶ AI Safety Research: The White House’s updated National AI Research and Development plan acknowledges the existential risk from AI. On pg. 17, the report notes that ‘Long-term risks remain, including the existential risk associated with the development of artificial general intelligence through self-modifying AI or other means.’

▶ Near Misses: In a special edition focusing on close calls humanity has had with catastrophe, the Bulletin of the Atomic Scientists interviewed Susan Solomon – to whom we presented the Future of Life Award in 2021 – about her role in helping to heal the Ozone layer.

Dive deeper: Watch our video on how a dedicated group of scientists bureaucrats and diplomats saved the Ozone layer here:

Hindsight is 20/20

On 23 May 1967, a powerful solar storm led the US Air Force to believe that the Soviets had jammed American early warning surveillance radars. Jamming surveillance radars was then considered an act of war, and the US began preparing nuclear-weapon-equipped aircraft for launch.

Fortunately, scientists at the North American Aerospace Defense Command (NORAD) and elsewhere figured out that the flare, not the Soviets, had disrupted the radars.

According to an article in the National Geographic, the practice of monitoring solar flares had only picked up a few years prior to the 67′ incident. What if no one was monitoring solar flares and the US believed that the Soviets were preparing to strike?

We’ve had several close calls prior to and since this incident. How often will we roll the dice? Check out our timeline of close calls to learn more about such incidents.

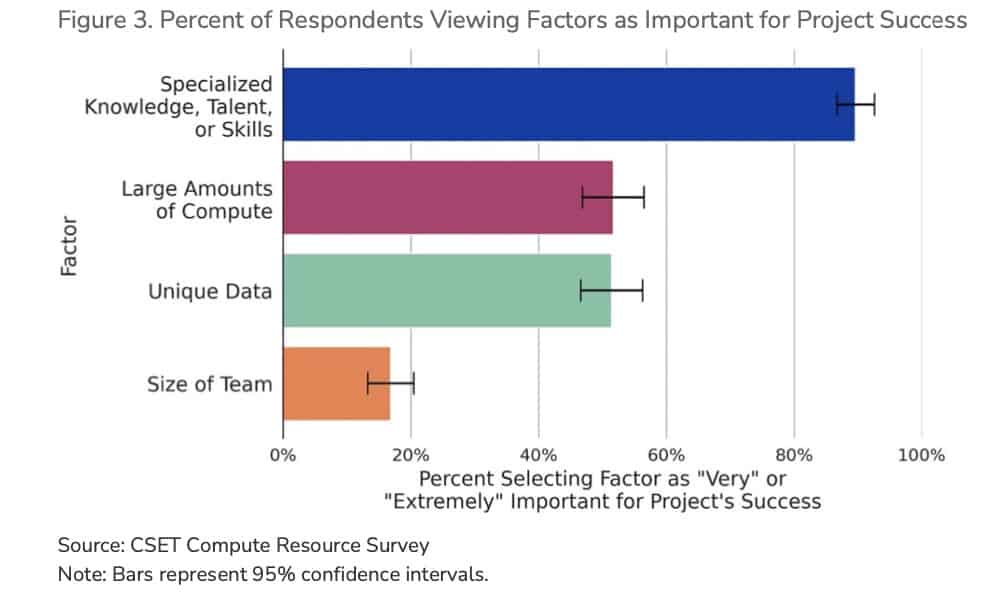

Chart of the Month

Data, algorithms, and compute drive progress in AI. But a survey of 400 AI researchers and experts by the Centre for Security and Emerging Technology found that the most valued resource was still human talent.

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.