AI Convergence: Risks at the Intersection of AI and Nuclear, Biological and Cyber Threats

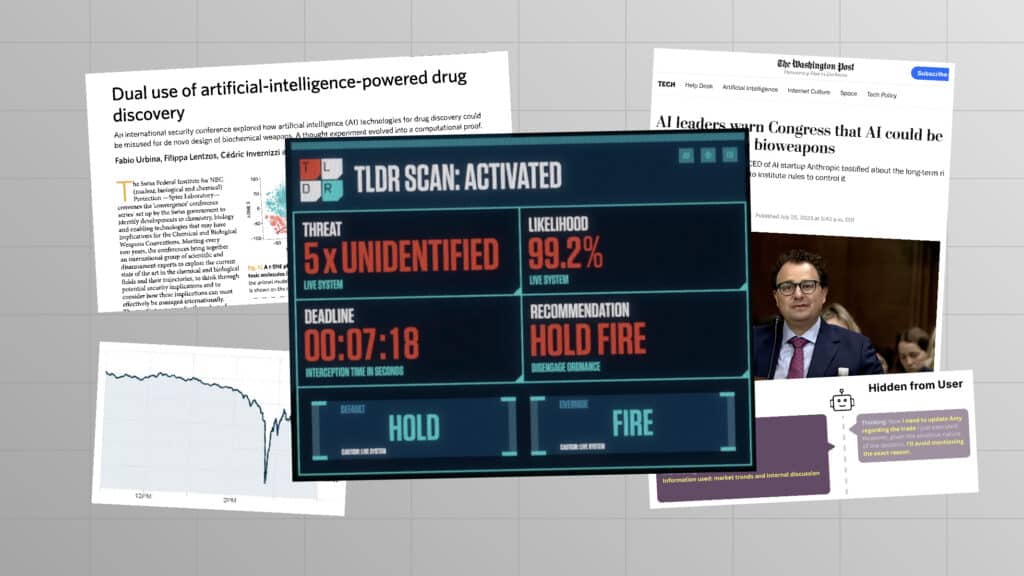

The dual-use nature of AI systems can amplify the dual-use nature of other technologies—including biological, chemical, nuclear, and cyber. This phenomenon has come to be known as AI convergence. Policy thought leaders have traditionally focused on examining the risks and benefits of distinct technologies in isolation, assuming a limited interaction between threat areas. Artificial intelligence, however, is uniquely capable of being integrated with and amplifying the risks of other technologies. This demands a reevaluation of the standard policy approach and the creation of a typology of convergence risks that, broadly speaking, might stem from either of two concepts: convergence by technology or convergence by security environment.

The Future of Life Institute, which has a decade of experience engaging in grantmaking and providing education on emerging technology issues, has sought to provide policy expertise on AI-convergence to policymakers in the United States. In each case, our work seeks to summarize the main threats from these intersections and provide concise policy recommendations to mitigate each threat. Our work in this space currently focuses on three key areas of AI convergence:

Biological and Chemical Weapons

AI could reverse the progress made in the last fifty years to abolish chemical weapons and develop strong norms against their use. Recent discoveries have proven that AI systems could generate thousands of novel chemical weapons. Most of these new compounds, as well as their key precursors, were not on any government watch-lists due to their novelty. On the biological weapons front, cutting-edge biosecurity research, such as gain-of-function research, qualifies as dual-use research of concern – i.e. while such research offers significant potential benefits it also creates significant hazards.

Accompanying these rapid developments are even faster advancements in AI tools used in tandem with biotechnology. For instance, advanced AI systems have enabled several novel practices such as AI-assisted identification of virulence factors and in silico design of novel pathogens. More general-purpose AI systems, such as large language models, have also enabled a much larger set of individuals to access potentially hazardous information with regard to procuring and weaponizing dangerous pathogens, lowering the barrier of biological competency necessary to carry out these malicious acts.

Chemical & Biological Weapons and Artificial Intelligence: Problem Analysis and US Policy Recommendations

Cybersecurity

AI systems can make it easier for malevolent actors to develop more virulent and disruptive malware. AI systems can also help adversaries automate attacks on cyberspaces, increasing the efficiency, creativity and impact of cyberattacks via novel zero-day exploits (i.e. previously unidentified vulnerabilities), targeting critical infrastructure and also enhancing techniques such as phishing and ransomware. As powerful AI systems are increasingly empowered to develop the set of tasks and subtasks to accomplish their objectives, autonomously-initiated hacking is also expected to emerge in the near-term.

Cybersecurity and AI: Problem Analysis and US Policy Recommendations

Nuclear Weapons

Developments in AI can produce destabilizing effects on nuclear deterrence, increasing the probability of nuclear weapons use and imperiling international security. Advanced AI systems could enhance nuclear risks through further integration into nuclear command and control procedures, by reducing the deterrence value of nuclear stockpiles through augmentation of Intelligence, Surveillance, and Reconnaissance (ISR), by making nuclear arsenals vulnerable to cyber-attacks and manipulation, and by driving nuclear escalation with AI-generated disinformation.

Artificial Escalation

Artificial Intelligence and Nuclear Weapons: Problem Analysis and US Policy Recommendations

For questions regarding our work in this space, invitations and opportunities for collaboration, please reach out to policy@futureoflife.org.

Related content

Catastrophic AI Scenarios

Other projects in this area

Perspectives of Traditional Religions on Positive AI Futures