AI Researcher Vincent Conitzer

Contents

AI Safety Research

AI Safety Research

Vincent Conitzer

Kimberly J. Jenkins University Professor of New Technologies

Professor of Computer Science, Professor of Economics, and Professor of Philosophy

Project: How to Build Ethics into Robust Artificial Intelligence

Amount Recommended: $200,000

Project Summary

Humans take great pride in being the only creatures who make moral judgments, even though their moral judgments often suffer from serious flaws. Some AI systems do generate decisions based on their consequences, but consequences are not all there is to morality. Moral judgments are also affected by rights (such as privacy), roles (such as in families), past actions (such as promises), motives and intentions, and other morally relevant features. These diverse factors have not yet been built into AI systems. Our goal is to do just that. Our team plans to combine methods from computer science, philosophy, and psychology in order to construct an AI system that is capable of making plausible moral judgments and decisions in realistic scenarios. We hope that this work will provide a basis that leads to future highly-advanced AI systems acting ethically and thereby being more robust and beneficial. Humans, by comparing their own moral judgments to the output of the resulting system, will be able to understand their own moral judgments and avoid common mistakes (such as partiality and overlooking relevant factors). In these ways and more, moral AI might also make humans more moral.

Technical Abstract

Most contemporary AI systems base their decisions solely on consequences, whereas humans also consider other morally relevant factors, including rights (such as privacy), roles (such as in families), past actions (such as promises), motives and intentions, and so on. Our goal is to build these additional morally relevant features into an AI system. We will identify morally relevant features by reviewing theories in moral philosophy, conducting surveys in moral psychology, and using machine learning to locate factors that affect human moral judgments. We will use and extend game theory and social choice theory to determine how to make these features more precise, how to weigh conflicting features against each other, and how to build these features into an AI system. We hope that eventually this work will lead to highly advanced AI systems that are capable of making moral judgments and acting on them. Humans will then be able to compare these outputs to their own moral judgments in order to learn which of these judgments are distorted by biases, partiality, or lack of attention to relevant factors. In such ways, moral AI can also contribute to our own understanding of morality and our moral lives.

The Evolution of AI: Can Morality be Programmed?

At first glance, the goal seems simple enough—make an AI that behaves in a way that is ethically responsible; however, it’s far more complicated than it initially seems, as there are an amazing amount of factors that come into play. As Conitzer’s project outlines, “moral judgments are affected by rights (such as privacy), roles (such as in families), past actions (such as promises), motives and intentions, and other morally relevant features. These diverse factors have not yet been built into AI systems.”

That’s what this team is trying to do now.

In a recent interview with Futurism, Conitzer clarified that, while the public may be concerned about ensuring that rogue AI don’t decide to wipe-out humanity, such a thing really isn’t a viable threat at the present time (and it won’t be for a long, long time). As a result, his team isn’t concerned with preventing a global-robotic-apocalypse by making selfless AI that adore humanity. Rather, on a much more basic level, they are focused on ensuring that our artificial intelligence systems are able to make the hard, moral choices that humans make on a daily basis.

So, how do you make an AI that is able to make a difficult moral decision?

Conitzer explains that, to reach their goal, the team is following a two path process: Having people make ethical choices in order to find patterns and then figuring out how that can be translated into an artificial intelligence. He clarifies, “what we’re working on right now is actually having people make ethical decisions, or state what decision they would make in a given situation, and then we use machine learning to try to identify what the general pattern is and determine the extent that we could reproduce those kind of decisions.”

In short, the team is trying to find the patterns in our moral choices and translate this pattern into AI systems. Conitzer notes that, on a basic level, it’s all about making predictions regarding what a human would do in a given situation, “if we can become very good at predicting what kind of decisions people make in these kind of ethical circumstances, well then, we could make those decisions ourselves in the form of the computer program.”

However, one major problem with this is, of course, that morality is not objective — it’s neither timeless nor universal.

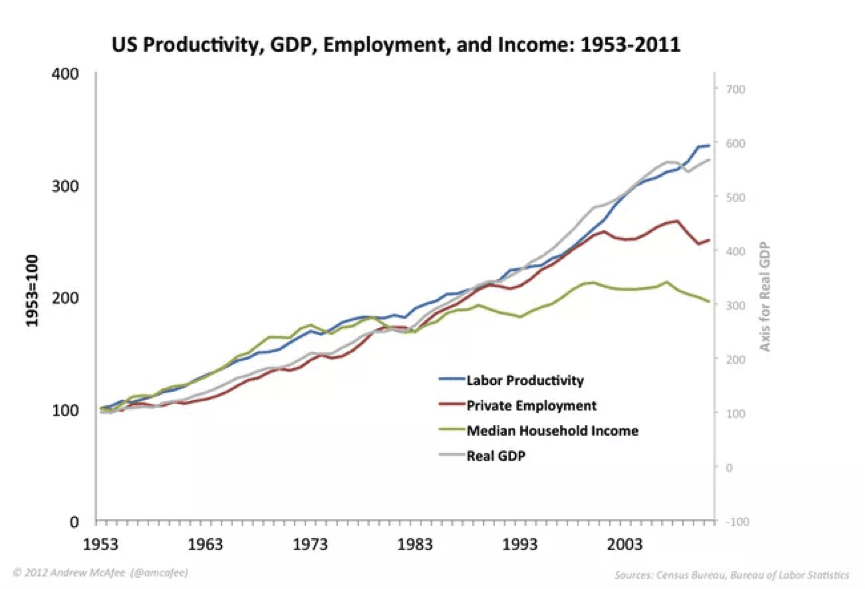

Conitzer articulates the problem by looking to previous decades, “if we did the same ethical tests a hundred years ago, the decisions that we would get from people would be much more racist, sexist, and all kinds of other things that we wouldn’t see as ‘good’ now. Similarly, right now, maybe our moral development hasn’t come to its apex, and a hundred years from now people might feel that some of the things we do right now, like how we treat animals, are completely immoral. So there’s kind of a risk of bias and with getting stuck at whatever our current level of moral development is.”

And of course, there is the aforementioned problem regarding how complex morality is. “Pure altruism, that’s very easy to address in game theory, but maybe you feel like you owe me something based on previous actions. That’s missing from the game theory literature, and so that’s something that we’re also thinking about a lot—how can you make this, what game theory calls ‘Solutions Concept’—sensible? How can you compute these things?”

To solve these problems, and to help figure out exactly how morality functions and can (hopefully) be programmed into an AI, the team is combining the methods from computer science, philosophy, and psychology “That’s, in a nutshell, what our project is about,” Conitzer asserts.

But what about those sentient AI? When will we need to start worrying about them and discussing how they should be regulated?

THE HUMAN-LIKE AI

According to Conitzer, human-like artificial intelligence won’t be around for some time yet (so yay! No Terminator-styled apocalypse…at least for the next few years).

“Recently, there have been a number of steps towards such a system, and I think there have been a lot of surprising advances….but I think having something like a ‘true AI,’ one that’s really as flexible, able to abstract, and do all these things that humans do so easily, I think we’re still quite far away from that,” Conitzer asserts.

True, we can program systems to do a lot of things that humans do well, but there are some things that are exceedingly complex and hard to translate into a pattern that computers can recognize and learn from (which is ultimately the basis of all AI).

“What came out of early AI research, the first couple decades of AI research, was the fact that certain things that we had thought of as being real benchmarks for intelligence, like being able to play chess well, were actually quite accessible to computers. It was not easy to write and create a chess-playing program, but it was doable.”

Indeed, today, we have computers that are able to beat the best players in the world in a host of games—Chess and Go, for example.

But Conitzer clarifies that, as it turns out, playing games isn’t exactly a good measure of human-like intelligence. Or at least, there is a lot more to the human mind. “Meanwhile, we learned that other problems that were very simple for people were actually quite hard for computers, or to program computers to do. For example, recognizing your grandmother in a crowd. You could do that quite easily, but it’s actually very difficult to program a computer to recognize things that well.”

Since the early days of AI research, we have made computers that are able to recognize and identify specific images. However, to sum the main point, it is remarkably difficult to program a system that is able to do all of the things that humans can do, which is why it will be some time before we have a ‘true AI.’

Yet, Conitzer asserts that now is the time to start considering what the rules we will use to govern such intelligences. “It may be quite a bit further out, but to computer scientists, that means maybe just on the order of decades, and it definitely makes sense to try to think about these things a little bit ahead.” And he notes that, even though we don’t have any human-like robots just yet, our intelligence systems are already making moral choices and could, potentially, save or end lives.

“Very often, many of these decisions that they make do impact people and we may need to make decisions that we will typically be considered to be a morally loaded decision. And a standard example is a self-driving car that has to decide to either go straight and crash into the car ahead of it or veer off and maybe hurt some pedestrian. How do you make those trade-offs? And that I think is something we can really make some progress on. This doesn’t require superintelligent AI, this can just be programs that make these kind of trade-offs in various ways.”

But of course, knowing what decision to make will first require knowing exactly how our morality operates (or at least having a fairly good idea). From there, we can begin to program it, and that’s what Conitzer and his team are hoping to do.

This article is part of a Future of Life series on the AI safety research grants, which were funded by generous donations from Elon Musk and the Open Philanthropy Project.

Publications

- Vincent Conitzer, et al. Moral Decision Making Frameworks for Artificial Intelligence. (Preliminary version.) To appear in Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17) Senior Member / Blue Sky Track, San Francisco, CA, USA, 2017. https://users.cs.duke.edu/~

conitzer/moralAAAI17.pdf

Other Articles

- Artificial Intelligence: Where’s the philosophical scrutiny? Published in the magazine Prospect.

Workshops

- Moral AI Projects:

- Each part of this workshop included an in depth discussion of an innovative model for moral artificial intelligence. The first group of speakers explained and defended the bottom-up approach that they are developing with support from FLI. The second session was led by a guest speaker who presented a dialogic theory of moral reasoning that has potential to be programmed into artificial intelligence systems. In the end, both groups found that their perspectives were complementary rather than competing. The audience consisted of around 20 students and faculty from a wide variety of fields.

- Conitzer and his team participated in the ethics workshop at AAAI, describing their work on this project in a session and also serving on a panel on research directions for keeping AI beneficial.

Ongoing Projects/Recent Progress

- Machine Learning, Predictions, and Morally Relevant Features: Conitzer and his team have been applying techniques from machine learning (specifically, recommender systems) to determine how well they can predict subjects’ morality ratings. For this project, they are using a pre-existing data set gathered by some members of their group (Clifford et al., 2015). They have implemented several machine learning algorithms, including collaborative filtering and user/item based recommender systems, the best of which so far predicts moral judgments with a 55% success rate (the rating is on a scale from 1 to 5, so random guessing gives 20%). They plan to implement other approaches in the coming months. Besides optimizing performance, they are particularly interested in interpretability: Can machine learning uncover new principles governing what makes people judge something as morally wrong? One key aspect of this project is characterizing and interpreting the features that the learning algorithm generates to make its predictions. They are still in the process of interpreting the features identified by the current algorithm, and will be testing other approaches that might be better tuned for identifying interpretable moral features in the coming months.

- Surveys of Moral Views about Artificial Intelligence in Various Domains: This project collects data from human subjects on preferences for or against computer agents making decisions in morally-laden contexts, such as military drone guidance and assignment of donated kidneys. The results will help Conitzer and his team understand in which domains decisions by AI are generally accepted and which factors contribute to this acceptance. (Again, given an algorithm that predicts such judgments accurately, an AI agent could use this to decide whether it should be the one making a given decision.) Thus far in their experiments, it appears that these preferences are primarily guided by perceived capability of both humans and computers to perform complicated tasks, rather than moral factors like concerns about fairness or harm. Specifically, the belief in the ability of computers to handle complex tasks predicts the degree to which an individual is likely to prefer computer agents to human agents in the scenarios tested. At the same time, they found that scenarios that may be more familiar to participants (e.g., setting a vehicle’s following distance, calculating insurance premiums) were those for which a majority of participants preferred a computer to make the decision. When studying this more closely, they found that the amount of exposure subjects reported to have had to the idea of computer agents making a decision in a particular domain may mediate subjects’ acceptance of computer agents making decisions in that domain. Conitzer and his team will shortly begin investigating whether they can modulate participants’ acceptance of computer agents by modulating their exposure to what computer agents are able to do.

- Ethical Game-theoretic Solution Concepts: Game theory provides a rich language for modeling scenarios with multiple agents, as well as solution concepts that specify what it means to act strategically in such scenarios. These form the basis of much AI research. Conitzer and his team are defining new concepts that specify what it means to act ethically . In their work, they have defined such a concept, outlined an axiomatic proof justifying it, and designed a heuristic algorithm that appears to be highly effective at computing these solutions. They are also planning experiments with human subjects on variants of the“trust game” —a standard example where people deviate from the traditional solutions of game theory, presumably because the latter are perceived to be morally wrong—to evaluate these concepts. They have begun building the infrastructure needed to allow participants in different geographical locations to engage in these games simultaneously through online interfaces, and will use the data they collect from these interfaces to understand people’s moral reasoning in these scenarios and refine their ethical game-theoretic concepts.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI Researcher Profile

AI Researcher Jacob Steinhardt

AI Researcher Bas Steunebrink

AI Researcher Moshe Vardi