Real-Life Technologies that Prove Autonomous Weapons are Already Here

Contents

Lethal autonomous weapons have been in development for years. In fact, we warned the world about this back in 2017.

Unfortunately, Slaughterbots are now here.

In this article, we have collected 4 examples of real-life autonomous weapons which exist today, and have either already been deployed in military operations or will be deployed in the near future.

1 – STM Kargu-2

In spring of 2020, it was reported that an autonomous drone strike had taken place in Libya. As far as we know, this was the first documented case of an autonomous weapon being used in a real military operation.

According to a recent UN report, a drone airstrike in Libya from the spring of 2020—made against Libyan National Army forces by Turkish-made STM Kargu-2 drones on behalf of Libya’s Government of National Accord—was conducted by weapons systems with no known humans “in the loop.”

One of the Kargu-2 drones used in the attack was downed and recovered for inspection, which allowed us to learn the following details about its functionality:

The STM Kargu-2 is a flying quadcopter that weighs a mere 7 kg, is being mass-produced, is capable of fully autonomous targeting, can form swarms, remains fully operational when GPS and radio links are jammed, and is equipped with facial recognition software to target humans. In other words, it’s a Slaughterbot.

Link: Source

2 – Jaeger-C

In November 2021, a new form of autonomous weapon got its first military contract:

Australian robot vehicle maker GaardTech announced a contract Thursday to supply its Jaeger-C uncrewed combat vehicle to the Australian Army for demonstrations in 2022.

The wheeled Jaeger-C is a small machine with a low profile designed to attack from ambush. In some ways, it might be seen as a mobile robotic mine. This is especially true because the makers note it can be remote-controlled or operate “autonomously with image analysis and trained models linked to robotic actions,” according to a report in Overt Defense.

The weapon has two modes of operation: Chariot mode, for engaging human targets, and Goliath mode, for engaging vehicles. Here is how the two modes work:

In Chariot mode, the robot engages targets with an undisclosed weapon, which is likely to be a 7.62 mm medium machine gun. It might also be something like the 6.5mm sniper rifle in a Special Purpose Unmanned Rifle pod recently seen mounted on a quadruped robot. In Goliath mode, the robot carries out a kamikaze attack on a vehicle. This is named after the Goliath Tracked Mine deployed by German forces in WWII. Known as the ‘beetle tank,’ these little tracked vehicles were less than five feet long but carried a hundred-pound explosive charge and were used against tanks and fortifications.

When it identifies an armoured vehicle target, such as a tank, the Jaeger-C will enter ‘Goliath mode’ and roll at up to 50 mph towards the vehicle. When it is close enough, the on-board explosive will automatically detonate, causing critical damage to the target.

It may be possible for a tank to react in time and destroy a fast-approaching Jaeger-C, but this would become very difficult if multiple attack robots were swarming the target vehicle at once.

The Jaeger-C carries an armor-piercing shaped charge; the size is unspecified, but it’s likely to be at least comparable to the 20-pound warhead on the FGM-148 Javelin and has the huge advantage of attacking the belly of the tank where the armor this thinnest.

No human operator needs to be present – the Jaeger-C is able to perform all of these functions, including recognising a target and deciding whether or not to detonate, by itself.

Link: Source

3 – US Air Force MQ-9 Reaper

In December 2020, Forbes ran this headline:

U.S. To Equip MQ-9 Reaper Drones With Artificial Intelligence

This caused a bit of a stir in the existential risk community.

The Pentagon’s Joint Artificial Intelligence Center has awarded a $93.3 million contract to General Atomics Aeronautical Systems Inc (GA-ASI), makers of the MQ-9 Reaper, to equip the drone with new AI technology. The aim is for the Reaper to be able to carry out autonomous flight, decide where to direct its battery of sensors, and to recognize objects on the ground.

In September, the Air Force announced that General Atomics had flown a Reaper fitted with a new device known as an Agile Condor pod under its wing for the first time. Agile Condor, which has been in development by the Air Force Research Laboratory for some years, is effectively a flying supercomputer – ‘high-performance embedded computing ‘ — optimized for artificial intelligence applications. Built by SRC Inc, it packs the maximum computing capacity into the minimum space, with the lowest possible power requirements. Its modular architecture is built around machine learning (suggesting a lot of GPUs or other processors optimized for parallel processing) and the makers anticipate upgrades to neuromorphic computing hardware which mimics the human brain.

In short, this new ‘smart’ drone promises to reduce the amount of human-hours required to review the incoming data, and make critical decisions.

“Instead of taking hours, sometimes days or even weeks – decisions can now be made in near real-time. If the system detects an anomaly on the ground, warfighters are alerted within minutes, allowing them to investigate and act while it’s still relevant,” according to SRC’s page on Agile Condor.

However, this also opens up the capability for the drone to act autonomously, and make decisions by itself.

It also opens up the possibility of the Reaper operating on its own. An Air Force slide of the Agile Condor concept of operations shows the drone losing both its communications link and GPS navigation at the start of its mission. An existing Reaper would circle in place or fly back to try and re-establish communications; the AI-boosted version uses its AI to navigate using landmarks and find the target area – as well as spotting threats on the ground and changing its flight path to avoid them.

Though it may seem harmless for an autonomous drone to use its new-found intelligence to avoid obstacles, it will be extremely tempting for military personnel to rely more and more on their drones’ autonomous capabilities, especially when there is a communications or GPS disconnection at critical moments. Eventually, they might even delegate the task of deciding whether or not to strike to the drones’ onboard system.

Unfortunately, the Pentagon’s policy on ‘human judgement’ is sufficiently vague to allow it to mean whatever they decide is in their best interests at the time:

When it comes to autonomous weapons, the Pentagon’s stated policy is always that a human operator will always make the firing decision, this policy has some flexibility: it simply demands “appropriate levels of human judgment,” whatever that means.

It seems that more and more critical decisions will be made ‘on the fly’ from now on.

Link: Source

Should algorithms decide who lives and who dies?

We believe that algorithms should not be empowered to decide who lives and who dies. If you agree, we need your help to demonstrate widespread support for this cause. Will you help us to #BanSlaughterbots, and take action against these weapons?

Related posts

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Autonomous Weapons

Future of Life Institute Statement on the Pope’s G7 AI Speech

An introduction to the issue of Autonomous Weapons

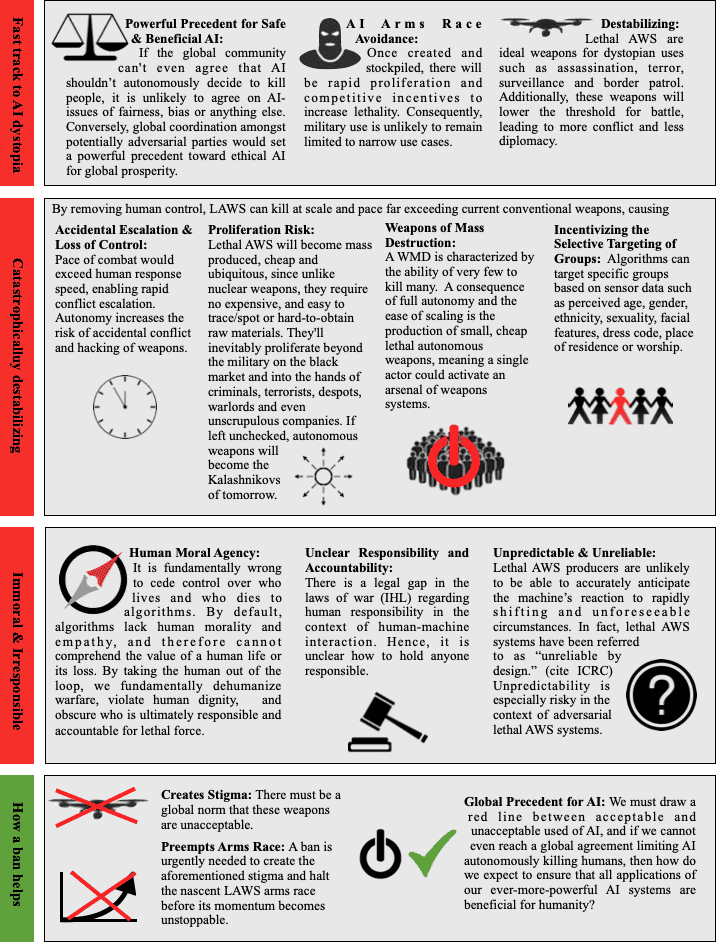

10 Reasons Why Autonomous Weapons Must be Stopped