Iason Gabriel on Foundational Philosophical Questions in AI Alignment

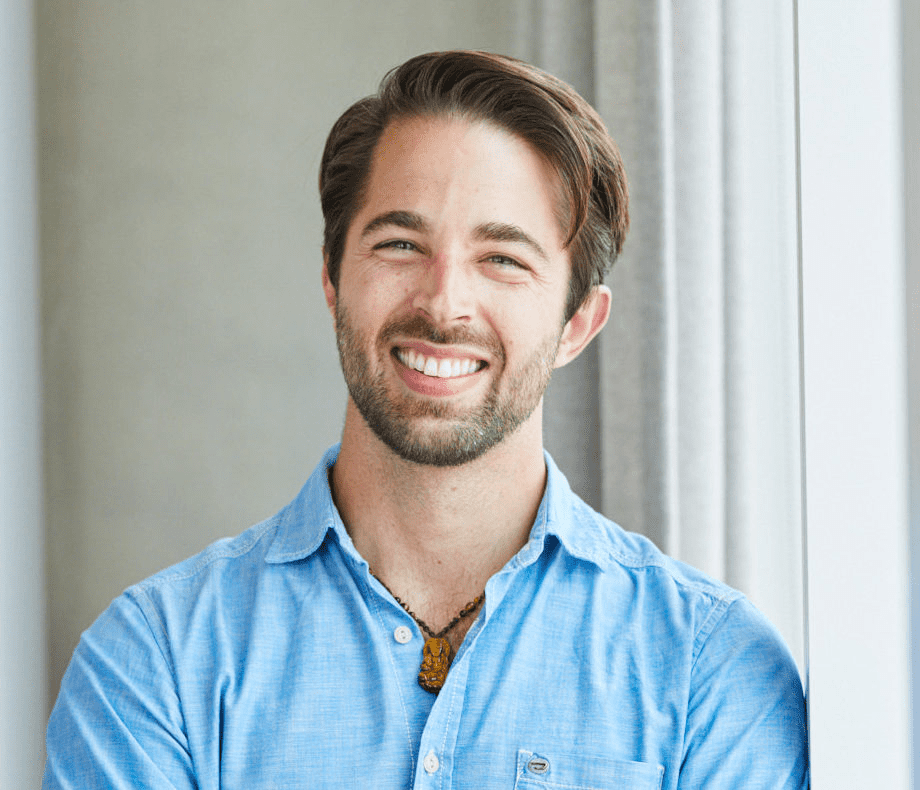

In the contemporary practice of many scientific disciplines, questions of values, norms, and political thought rarely explicitly enter the picture. In the realm of AI alignment, however, the normative and technical come together in an important and inseparable way. How do we decide on an appropriate procedure for aligning AI systems to human values when there is disagreement over what constitutes a moral alignment procedure? Choosing any procedure or set of values with which to align AI brings its own normative and metaethical beliefs that will require close examination and reflection if we hope to succeed at alignment. Iason Gabriel, Senior Research Scientist at DeepMind, joins us on this episode of the AI Alignment Podcast to explore the interdependence of the normative and technical in AI alignment and to discuss his recent paper Artificial Intelligence, Values and Alignment.

Topics discussed in this episode include:

- How moral philosophy and political theory are deeply related to AI alignment

- The problem of dealing with a plurality of preferences and philosophical views in AI alignment

- How the is-ought problem and metaethics fits into alignment

- What we should be aligning AI systems to

- The importance of democratic solutions to questions of AI alignment

- The long reflection

Timestamps:

0:00 Intro

2:10 Why Iason wrote Artificial Intelligence, Values and Alignment

3:12 What AI alignment is

6:07 The technical and normative aspects of AI alignment

9:11 The normative being dependent on the technical

14:30 Coming up with an appropriate alignment procedure given the is-ought problem

31:15 What systems are subject to an alignment procedure?

39:55 What is it that we're trying to align AI systems to?

01:02:30 Single agent and multi agent alignment scenarios

01:27:00 What is the procedure for choosing which evaluative model(s) will be used to judge different alignment proposals

01:30:28 The long reflection

01:53:55 Where to follow and contact Iason

Citations:

Artificial Intelligence, Values and Alignment

Iason Gabriel's Google Scholar

We hope that you will continue to join in the conversations by following us or subscribing to our podcasts on Youtube, Spotify, SoundCloud, iTunes, Google Play, Stitcher, iHeartRadio, or your preferred podcast site/application. You can find all the AI Alignment Podcasts here.

Transcript

Lucas Perry: Welcome to the AI Alignment Podcast. I’m Lucas Perry. Today, we have a conversation with Iason Gabriel about a recent paper that he wrote titled Artificial Intelligence, Values and Alignment. This episode primarily explores how moral and political theory are deeply interconnected with the technical side of the AI alignment problem, and important questions related to that interconnection. We get into the problem of dealing with a plurality of preferences and philosophical views, the is-ought problem, metaethics, how political theory can be helpful for resolving disagreements, what it is that we’re trying to align AIs to, the importance of establishing a broadly endorsed procedure and set of principles for alignment, and we end on exploring the long reflection.

This was a very fun and informative episode. Iason has succeeded in bringing new ideas and thought to the space of moral and political thought in AI alignment, and I think you’ll find this episode enjoyable and valuable. If you don’t already follow us, you can subscribe to this podcast on your preferred podcasting platform by searching for The Future of Life or following the links on the page for this podcast.

Iason Gabriel is a Senior Research Scientist at DeepMind where he works in the Ethics Research Team. His research focuses on the applied ethics of artificial intelligence, human rights, and the question of how to align technology with human values. Before joining DeepMind, Iason was a Fellow in Politics at St John’s College, Oxford. He holds a doctorate in Political Theory from the University of Oxford and spent a number of years working for the United Nations in post-conflict environments.

And with that, let’s get into our conversation with Iason Gabriel.

So we're here today to discuss your paper, Artificial Intelligence, Values and Alignment. To start things off here, I'm interested to know what you found so compelling about the problem of AI values and alignment, and generally, just what this paper is all about.

Iason Gabriel: Yeah. Thank you so much for inviting me, Lucas. So this paper is in broad brush strokes about how we might think about aligning AI systems with human values. And I wrote this paper because I wanted to bring different communities together. So on the one hand, I wanted to show machine learning researchers, that there were some interesting normative questions about the value configuration we align AI with that deserve further attention. At the same time, I was keen to show political and moral philosophers that AI was a subject that provoked real philosophical reflection, and that this is an enterprise that is worthy of their time as well.

Lucas Perry: Let's pivot into what the problem is then that technical researchers and people interested in normative questions and philosophy can both contribute to. So what is your view then on what the AI problem is? And the two parts you believe it to be composed of.

Iason Gabriel: In broad brush strokes, I understand the challenge of value alignment in a way that's similar to Stuart Russell. He says that the ultimate aim is to ensure that powerful AI is properly aligned with human values. I think that when we reflect upon this in more detail, it becomes clear that the problem decomposes into two separate parts. The first is the technical challenge of trying to align powerful AI systems with human values. And the second is the normative question of what or whose values we try to align AI systems with.

Lucas Perry: Oftentimes, I also see a lot of reflection on AI policy and AI governance as being a core issue to also consider here, given that people are concerned about things like race dynamics and unipolar versus multipolar scenarios with regards to something like AGI, what are your thoughts on this? And I'm curious to know why you break it down into technical and normative without introducing political or governance issues.

Iason Gabriel: Yeah. So this is a really interesting question, and I think that one we'll probably discuss at some length later about the role of politics in creating aligned AI systems. Of course, in the paper, I suggest that an important challenge for people who are thinking about value alignment is how to reconcile the different views and opinions of people, given that we live in a pluralistic world, and how to come up with a system for aligning AI systems that treats people fairly despite that difference. In terms of practicalities, I think that people envisage alignment in different ways. Some people imagine that there will be a human parliament or a kind of centralized body that can give very coherent and sound value advice to AI systems. And essentially, that the human element will take care of this problem with pluralism and just give AI very, very robust guidance about things that we've all agreed upon are the best thing to do.

At the same time, there's many other visions for AI or versions of AI that don't depend upon that human parliament being able to offer such cogent advice. So we might think that there are worlds in which there's multiple AIs, each of which has a human interlocutor, or we might imagine AIs as working in the world to achieve constructive ends and that it needs to actually be able to perform these value calculations or this value synthesis as part of its kind of default operating procedure. And I think it's an open question what kind of AI system we're discussing and that probably the political element understood in terms of real world political institutions will need to be tailored to the vision of AI that we have in question.

Lucas Perry: All right. So can you expand then a bit on the relationship between the technical and normative aspects of AI alignment?

Iason Gabriel: A lot of the focus is on the normative part of the value alignment question, trying to work out which values to align AI systems with, whether it is values that really matter and how this can be decided. I think this is also relevant when we think about the technical design of AI systems, because I think that most technologies are not value agnostic. So sometimes, when we think about AI systems, we assume that they'll have this general capability and that it will almost be trivially easy for them to align with different moral perspectives or theories. Yet when we take a ground level view and we look at the way in which AI systems are being built, there's various path dependencies that are setting in and there's different design architectures that will make it easier to follow one moral trajectory rather than the other.

So for example, if we take a reinforcement learning paradigm, which focuses on teaching agents tasks by enabling them to maximize reward in the face of uncertainty over time, a number of commentators have suggested that, that model fits particularly well with the kind of utilitarian decision theory, which aims to promote happiness over time in the face of uncertainty, and that it would actually struggle to accommodate a moral theory that embodies something like rights or hard constraints. And so I think that if what we do want is a rights based vision of artificial intelligence, it's important that we get that ideal clear in our minds and that we design with that purpose in mind.

This challenge becomes even clearer when we think about moral philosophies, such as a Kantian theory, which would ask an agent to reflect on the reasons that it has for acting, and then ask whether they universalize to good states of affairs. And this idea of using the currency of a reason to conduct moral deliberation would require some advances in terms of how we think about AI, and it's not something that is very easy to get a handle on from a technical point of view.

Lucas Perry: So the key takeaway here is that what is going to be possible in terms of the normative and in terms of moral learning and moral reasoning in AI systems will supervene upon technical pathways that we take, and so it is important to be mindful of the relationship between what is possible normatively, given what is technically known, and to try and navigate that with mindfulness about that relationship?

Iason Gabriel: I think that's precisely right. I see at least two relationships here. So the first is that if we design without a conception of value in mind, it's likely that the technology that we build will not be able to accommodate any value constellation. And then the mirror side of that is if we have a clear value constellation in mind, we may be able to develop technologies that can actually implement or realize that ideal more directly and more effectively.

Lucas Perry: Can you make a bit more clear the ways in which, for example, path dependency of current technical research makes certain normative ethical theories more plausible to be instantiated in AI systems than others?

Iason Gabriel: Yeah. So, I should say that obviously, there's a wide variety of different methodologies that are being tried at the present moment, and that intuitively, they seem to match up well with different kinds of theory. Of course, the reality is a lot of effort has been spent trying to ensure that AI systems are safe and that they are aligned with human intentions. When it comes to richer goals, so trying to evidence a specific moral theory, a lot of this is conjecture because we haven't really tried to build utilitarian or Kantian agents in full. But I think in terms of the details, so with regards to reinforcement learning, we have this, obviously, an optimization driven process, and there is that whole caucus of moral theories that basically use that decision process to achieve good states of affairs. And we can imagine, roughly equating the reward that we use to train an RL agent on, with some metric of subjective happiness, or something like that.

Now, if we were to take a completely different approach, so say, virtue ethics, virtue ethics is radically contextual, obviously. And it says that the right thing to do in any situation is the action that evidences certain qualities of character and that these qualities can't be expressed through a simple formula that we can maximize for, but actually require a kind of context dependence. So I think that if that's what we want, if we want to build agents that have a virtuous character, we would really need to think about the fundamental architecture potentially in a different way. And I think that, that kind of insight has actually been speculatively adopted by people who consider forms of machine learning, like inverse reinforcement learning, who imagined that we could present an agent with examples of good behavior and that the agent would then learn them in a very nuanced way without us ever having to describe in full what the action was or give it appropriate guidance for every situation.

So, as I said, these really are quite tentative thoughts, but it doesn't seem at present possible to build an AI system that adapts equally well to whatever moral theory or perspective we believe ought to be promoted or endorsed.

Lucas Perry: Yeah. So, that does make sense to me that different techniques would be more or less skillful for more readily and fully adopting certain normative perspectives and capacities in ethics. I guess the part that I was just getting a little bit tripped up on is that I was imagining that if you have an optimizer being trained off something, like maximize happiness, then given the massive epistemic difficulties of running actual utilitarian optimization process that is only thinking at the level of happiness and how impossibly difficult that, that would be that like human beings who are consequentialists, it would then, through gradient descent or being pushed and nudged from the outside or something, would find virtue ethics and deontological ethics and that those could then be run as a part of its world model, such that it makes the task of happiness optimization much easier. But I see how intuitively it more obviously lines up with utilitarianism and then how it would be more difficult to get it to find other things that we care about, like virtue ethics or deontological ethics. Does that make sense?

Iason Gabriel: Yeah. I mean, it's a very interesting conjecture that if you set an agent off with the learned goal of trying to maximize human happiness, that it would almost, by necessity, learn to accommodate other moral theories and perspectives kind of suggests that there is a core driver, which animates moral inquiry, which is this idea of collective welfare being realized in a sustainable way. And that might be plausible from an evolutionary point of view, but there's also other aspects of morality that don't seem to be built so clearly on what we might even call the pleasure principle. And so I'm not entirely sure that you would actually get to a rights based morality if you started out from those premises.

Lucas Perry: What are some of these things that don't line up with this pleasure principle, for example?

Iason Gabriel: I mean, of course, utilitarians have many sophisticated theories about how endeavors to improve total aggregate happiness involve treating people, fairly placing robust side constraints on what you can do to people and potentially, even encompassing other goods, such as animal welfare and the wellbeing of future generations. But I believe that the consensus or the preponderance of opinion is that actually, unless we can say that certain things matter, fundamentally, for example, human dignity or the wellbeing of future generations or the value of animal welfare, is quite hard to build a moral edifice that adequately takes these things into account just through instrumental relationships with human wellbeing or human happiness so understood.

Lucas Perry: So then we have this technical problem of how to build machines that have the capacity to do what we want them to do and to help us figure out what we would want to want us to get the machines to do, an important problem that comes in here is the is-ought distinction by Hume, where we have, say, facts about the world, on one hand, is statements, we can even have is statements about people's preferences and meta-preferences and the collective state of all normative and meta-ethical views on the planet at a given time, and the distinction between that and ought, which is a normative claim synonymous with should and is kind of the basis of morality, and the tension there between what assumptions we might need to get morality off of the ground and how we should interact with a world of facts and a world of norms and how they may or may not relate to each other for creating a science of wellbeing or not even doing that. So how do you think of coming up with an appropriate alignment procedure that is dependent on the answer to this distinction?

Iason Gabriel: Yeah, so that's a fascinating question. So I think that the is-ought distinction is quite fundamental and it helps us answer one important query, which is whether it's possible to solve the value alignment question simply through an empirical investigation of people's existing beliefs and practices. And if you take the is-ought distinction seriously, it suggests that no matter what we can infer from studies of what is already the case, so what people happen to prefer or happen to be doing, we still have a further question, which is should that perspective be endorsed? Is it actually the right thing to do? And so there's always this critical gap. It's a space for moral reflection and moral introspection and a place in which error can arise. So we might even think that if we studied all the global beliefs of different people and found that they agreed upon certain axioms or moral properties that we could still ask, are they correct about those things? And if we look at historical beliefs, we might think that there was actually a global consensus on moral beliefs or values that turned out to be mistaken.

So I think that these endeavors to kind of synthesize moral beliefs to understand them properly are very, very valuable resources for moral theorizing. It's hard to think where else we would begin, but ultimately, we do need to ask these questions about value more directly and ask whether we think that the final elucidation of an idea is something that ought to be promoted.

So in sum, it has a number of consequences, but I think one of them is that we do need to maintain a space for normative inquiry and value alignment can't just be addressed through an empirical social scientific perspective.

Lucas Perry: Right, because one's own perspective on the is-ought distinction and whether and how it is valid will change how one goes about learning and evolving normative and meta-ethical thinking.

Iason Gabriel: Yeah. Perhaps at this point, an example will be helpful. So, suppose we're trying to train a virtuous agent that has these characteristics of treating people fairly, demonstrating humility, wisdom, and things of that nature, suppose we can't specify these upfront and we do need a training set, we need to present the agent with examples of what people believe evidences these characteristics, we still have the normative question of what goes into that data set and how do we decide. So, the evaluative questions get passed on to that. Of course, we've seen many examples of data sets being poorly curated and containing bias that then transmutes onto the AI system. We either need to have data that's curated so that it meets independent moral standards and the AI learns from that data, or we need to have a moral ideal that is freestanding in some sense and that AI can be built to align with.

Lucas Perry: Let's try and make that even more concrete because I think this is a really interesting and important problem about why the technical aspect is deeply related with philosophical thinking about this is-ought problem. So a highest level of abstraction, like starting with axioms around here, if we have is statements about datasets, and so data sets are just information about the world, the data sets are the is statements, we can put whatever is statements into a machine and the machine can take the shape of those values already embedded and codified in the world in people's minds or in our artifacts and culture. And then the ought question, as you said, is what information in the world should we use? And to understand what information we should use requires some initial principle, some set of axioms that bridges the is-ought gap.

So for example, the kind of move that I think Sam Harris tries to lay out is this axiom, we should avoid the worst possible misery for everyone and you may or may not agree with that axiom but that is the starting point for how one might bridge the is-ought gap to be able to select for which data is better than other data or which data we should on load to AI systems. So I'm curious to know, how is it that you think about this very fundamental level of initial axiom or axioms that are meant to bridge this distinction?

Iason Gabriel: I think that when it comes to these questions of value, we could try and build up from this kind of very, very minimalist assumptions of the kind that it sounds like Sam Harris is defending. We could also start with richer conceptions of value that seem to have some measure of widespread ascent and reflective endorsement. So I think, for example, the idea that human life matters or that sentient life matters, that it has value and hence, that suffering is bad is a really important component of that, I think that conceptions of fairness of what people deserve in light of that equal moral standing is also an important part of the moral content of building an aligned AI system. And I would tend to try and be inclusive in terms of the values that we canvass.

So I don't think that we actually need to take this very defensive posture. I think we can think expansively about the conception and nature of the good that we want to promote and that we can actually have meaningful discussions and debate about that so we can put forward reasons for defending one set of propositions in comparison with another.

Lucas Perry: We can have epistemic humility here, given the history of moral catastrophes and how morality continues to improve and change over time and that surely, we do not sit at a peak of moral enlightenment in 2020. So given our epistemic humility, we can cast a wide net around many different principles so that we don't lock ourselves into anything and can endorse a broad notion of good, which seems safer, but perhaps has some costs in itself for allowing and being more permissible for a wide range of moral views that may not be correct.

Iason Gabriel: I think that's, broadly speaking, correct. We definitely shouldn't tether artificial intelligence too narrowly to the morality of the present moment, given that we may and probably are making moral mistakes of one kind or another. And I think that this thing that you spoke about, a kind of global conversation about value, is exactly right. I mean, if we take insights from political theory seriously, then the philosopher, John Rawls, suggests that a fundamental element of the present human condition is what he calls the fact of reasonable pluralism, which means that when people are not coerced and when they're able to deliberate freely, they will come to different conclusions about what ultimately has moral value and how we should characterize ought statements, at least when they apply to our own personal lives.

So if we start from that premise, we can then think about AI as a shared project and ask this question, which is given that we do need values in the equation, that we can't just do some kind of descriptive enterprise and that, that will tell us what kind of system to build, what kind of arrangement adequately factors in people's different views and perspectives, and seems like a solution built upon the relevant kind of consensus to value alignment that then allows us to realize a system that can reconcile these different moral perspectives and takes a variety of different values and synthesizes them in a scheme that we would all like.

Lucas Perry: I just feel broadly interested in just introducing a little bit more of the debate and conceptions around the is-ought problem, right? Because there are some people who take it very seriously and other people who try to minimize it or are skeptical of it doing the kind of philosophical work that many people think that it's doing. For example, Sam Harris is a big skeptic of the kind of work that the is-ought problem is doing. And in this podcast, we've had people on who are, for example, realists about consciousness, and there's just a very interesting broad range of views about value that inform the is-ought problem. If one's a realist about consciousness and thinks that suffering is the intrinsic valence carrier of disvalue in the universe, and that joy is the intrinsic valence carrier of wellbeing, one can have different views on how that even translates to normative ethics and morality and how one does that, given one's view on the is-ought problem.

So, for example, if we take that kind of metaphysical view about consciousness seriously, then if we take the is-ought problem seriously then, even though there are actually bad things in the world, like suffering, those things are bad, but that it would still require some kind of axiom to bridge the is-ought distinction, if we take it seriously. So because pain is bad, we ought to avoid it. And that's interesting and important and a question that is at the core of unifying ethics and all of our endeavors in life. And if you don't take the is-ought problem seriously, then you can just be like, because I understand the way that the world is, by the very nature of being sentient being and understanding the nature of suffering, there's no question about the kind of navigation problem that I have. Even in the very long-term, the answer to how one might resolve the is-ought problem would potentially be a way of unifying all of knowledge and endeavor. All the empirical sciences would be unified conceptually with the normative, right? And then there is no more conceptual issues.

So, I think I'm just trying to illustrate the power of this problem and distinction, it seems.

Iason Gabriel: It's a very interesting set of ideas. To my mind, these kinds of arguments about the intrinsic badness of pain, or kind of naturalistic moral arguments, are very strong ways of arguing, against, say, moral relativist or moral nihilist, but they don't necessarily circumvent the is-ought distinction. Because, for example, the claim that pain is bad is referring to a normative property. So if you say pain is bad, therefore, it shouldn't be promoted, but that's completely compatible with believing that we can't deduce moral arguments from purely descriptive premises. So I don't really believe that the is-ought distinction is a problem. I think that it's always possible to make arguments about values and that, that's precisely what we should be doing. And that the fact that, that needs to be conjoined with empirical data in order to then arrive at sensible judgments and practical reason about what ought to be done is a really satisfactory state of affairs.

I think one kind of interesting aspect of the vision you put forward was this idea of a kind of unified moral theory that everyone agrees with. And I guess it does touch upon a number of arguments that I make in the paper, where I juxtapose two slightly stylistic descriptions of solutions to the value alignment challenge. The first one is, of course, the approach that I term the true moral theory approach, which holds that we do need a period of prolonged reflection and we reflect fundamentally on these questions about pain and perhaps other very deep normative questions. And the idea is that by using tools from moral philosophy, eventually, although we haven't done it yet, we may identify a true moral theory. And then it's a relatively simple... well, not simple from a technical point of view, but simple from a normative point of view task, of aligning AI, maybe even AGI, with that theory, and we've basically solved the value alignment problem.

So in the paper, I argue against that view quite strongly for a number of reasons. The first is that I'm not sure how we would ever know that we'd identified this true moral theory. Of course, many people throughout history have thought that they've discovered this thing and often gone on to do profoundly unethical things to other people. And I'm not sure how, even after a prolonged period of time, we would actually have confidence that we had arrived at the really true thing and that we couldn't still ask the question, am I right?

But even putting that to one side, suppose that I had not just confidence, but justified confidence that I really had stumbled upon the true moral theory and perhaps with the help of AI, I could look at how it plays out in a number of different circumstances, and I realize that it doesn't lead to these kind of weird, anomalous situations that most existing moral theories point towards, and so I really am confident that it's a good one, we still have this question of what happens when we need to persuade other people that we've found the true moral theory and whether that is a further condition on an acceptable solution to the value alignment problem. And in the paper, I say that it is a further condition that needs to be satisfied because just knowing, well, supposedly having access to justified belief in a true moral theory, doesn't necessarily give you the right to impose that view upon other people, particularly if you're building a very powerful technology that has world shaping properties.

And if we return to this idea of reasonable pluralism that I spoke about earlier, essentially, the core claim is that unless we coerce people, we can't get to a situation where everyone agrees on matters of morality. We could flip it around. It might be that someone already has the true moral theory out there in the world today and that we're the people who refuse to accept it for different reasons, I think the question then is how do we believe other people should be treated by the possessor of the theory, or how do we believe that person should treat us?

Now, one view that I guess in political philosophy is often attributed to Jean-Jacques Rousseau, if you have this really good theory, you're justified in coercing other people to live by it. He says that people should be forced to be free when they're not willing to accept the truth of the moral theory. Of course, it's something that has come in for fierce criticism. I mean, my own perspective is that actually, we need to try and minimize this challenge of value imposition for powerful technologies because it becomes a form of domination. So the question is how can we solve the value alignment problem in a way that avoids this challenge of domination? And in that regard, we really do need tools from political philosophy, which is, particularly within the liberal tradition, has tried to answer this question of how can we all live together on reasonable terms that preserve everyone's capacity to flourish, despite the fact that we have variation and what we ultimately believe to be just, true and right.

Lucas Perry: So to bring things a bit back to where we're at today and how things are actually going to start changing in the real world as we move forward. What do you view as the kinds of systems that would be, and are subject to something like an alignment procedure? Does this start with systems that we currently have today? Does it start with systems soon in the future? Should it have been done with systems that we already have today, but we failed to do so? What is your perspective on that?

Iason Gabriel: To my mind, the challenge of value alignment is one that exists for the vast majority, if not all technologies. And it's one that's becoming more pronounced as these technologies demonstrate higher levels of complexity and autonomy. So for example, I believe that many existing machine learning systems encounter this challenge quite forcefully, and that we can ask meaningful questions about it. So I think in previous discussion, we may have had this example of a recommendation system come to light. And even if we think of something that seems really quite prosaic. so say a recommendation system for what films to watch or what content to be provided to you. I think the value alignment question actually looms large because it could be designed to do very different things. On the one hand, we might have a recommendation system that's geared around your current first order preferences. So it might continuously give you really stimulating, really fun, low quality content that kind of keeps you hooked to the system and with a high level of subjective wellbeing, but perhaps something that isn't optimum in other regards. Then we can think about other possible goals for alignment.

So we might say that actually these systems should be built to serve your second order desires. Those are desires that in philosophy, we would say that people reflectively endorse, they're desires about the person you want to be. So if we were to build recommendation system with that goal in mind, it might be that instead of watching this kind of cheap and cheerful content, I decided that I'd actually like to be quite a high brow person. So it starts kind of tacitly providing me with more art house recommendations, but even that doesn't opt out the options, it might be that the system shouldn't really be just trying to satisfy from my preferences, that it should actually be trying to steer me in the direction of knowledge and things that are in my interest to know. So it might try and give me new skills that I need to acquire, might try and recommend, I don't know, cooking or self improvement programs.

That would be a system that was, I guess, geared toward my own interest. But even that again, doesn't give us a complete portfolio of options. Maybe what we want is a morally aligned system that actually enhances our capacity for moral decision making. And then perhaps that would lead us somewhere completely different. So instead of giving us this content that we want, it might lead us to content that leads us to engage with challenging moral questions, such as factory farming or climate change. So, value alignment kind of arises quite early on. This is of course, with the assumption that the recommendation system is geared to promote your interest or wellbeing or preference or moral sensibility. There's also the question of whether it's really promoting your goals and aspirations or someone else's and in science and technology studies there's a big area of value sensitive design, which essentially says that we need to consult people and have this almost like democratic discussions early on about the kind of values we want to embody in systems.

And then we design with that goal in mind. So, recommendation systems are one thing. Of course, if we look at public institutions, say a criminal justice system, there, we have a lot of public roar and discussion about the values that would make a system like that fair. And the challenge then is to work out whether there is a technical approximation of these values that satisfactory realizes them in a way that conduces to some vision of the public good. So in sum, I think that value alignment challenges exist everywhere, and then they become more pronounced when these technologies become more autonomous and more powerful. So as they have more profound effects on our lives, the burden of justification in terms of the moral standards that are being met, become more exacting. And the kind of justification we can give for the design of a technology becomes more important.

Lucas Perry: I guess, to bring this back to things that exist today. Something like YouTube or Facebook is a very rudimentary initial kind of very basic first order preference, satisfier. I mean, imagine all of the human life years that have been wasted, mindlessly consuming content that's not actually good for us. Whereas imagine, I guess some kind of enlightened version of YouTube where it knows enough about what is good and yourself and what you would reflectively and ideally endorse and the kind of person that you wish you could be and that you would be only if you knew better and how to get there. So, the differences between that second kind of system and the first system where one is just giving you all the best cat videos in the world, and the second one is turning you into the person that you always wish you could have been. I think this clearly demonstrates that even for systems that seem mundane, that they could be serving us in much deeper ways and at much deeper levels. And that even when they superficially serve us they may be doing harm.

Iason Gabriel: Yeah, I think that's a really profound observation. I mean, when we really look at the full scope of value or the full picture of the kinds of values we could seek to realize when designing technologies and incorporating them into our lives, often there's a radically expansive picture that emerges. And this touches upon a kind of taxonomic distinction that I introduce in the paper between minimalist and maximalist conceptions of value alignment. So when we think about AI alignment questions, the minimalist says we have to avoid very bad outcomes. So it's important to build safe systems. And then we just need them to reside within some space of value that isn't extremely negative and could take a number of different constellations. Whereas the maximalist says, "Well, let's actually try and design the very best version of these technologies from a moral point of view, from a human point of view."

And they say that even if we design safe technologies, we could still be leaving a lot of value out there on the table. So a technology could be safe, but still not that good for you or that good for the world. And let's aim to populate that space with more positive and richer visions of the future. And then try to realize those through the technologies that we're building. As we want to realize richer visions of human flourishing, it becomes more important that it isn't just a personal goal or vision, but it's one that is collectively endorsed, has been reflected upon and is justifiable from a variety of different points of view.

Lucas Perry: Right. And I guess it's just also interesting and valuable to reflect briefly on how there is already in each society, a place where we draw the line at value imposition, and we have these principles, which we've agreed upon broadly, but we're not going to let Ted Bundy do what Ted Bundy and wants to do

Iason Gabriel: That's exactly right. So we have hard constraints, some of which are kind of set in law. And clearly those are constraints that these are just laws. So the AI systems need to respect. There's also a huge possible space of better outcomes that are left open. Once we look at where moral constraints are placed and where they reside. I think that the Ted Bundy example is interesting because it also shows that we need to discount the preferences and desires of certain people.

One vision of AI alignment says that it's basically a global preference aggregation system that we need, but in reality, there's a lot of preferences that just shouldn't be counted in the first place because they're unethical or they're misinformed. So again, that kind of to my mind pushes us in this direction of a conversation about value itself. And once we know what the principle basis for alignment is, we can then adjudicate properly cases like that and work out what a kind of valid input for an aligned system is and what things we need to discount if we want to realize good moral outcomes.

Lucas Perry: I'm not going to try and pin you down too hard on that because there's the tension here, of course, between the importance of liberalism, not coercing value judgments on anyone, but then also being like, "Well, we actually have to do it in some places." And that line is a scary one to move in either direction. So, I want to explore more now the different understandings of what it is that we're trying to align AI systems to. So broadly people and I use a lot of different words here without perhaps being super specific about what we mean, people talk about values and intentions and idealized preferences and things of this nature. So can you be a little bit more specific here about what you take to be the goal of AI alignment, the goal of it being, what is it that we're trying to align systems to?

Iason Gabriel: Yeah, absolutely. So we've touched upon some of these questions already tacitly in the preceding discussion. Of course, in the paper, I argue that when we talk about value alignment, this idea of value is often a placeholder for quite different ideas, as you said. And I actually present a taxonomy of options that I can take us through in a fairly thrifty way. So, I think the starting point for creating aligned AI systems is this idea that we want AI that's able to follow our instructions, but that has a number of shortcomings, which Stuart Russel and others have documented, which tend to center around this challenge of excessive literalism. So if an AI system literally does what we ask it to, without an understanding of context, side constraints and nuance, often this will lead to problematic outcomes with the story of King Midas, being the classic cautionary tale. Wishing that everything he touches turns to gold, everything turns to gold, then you have a disaster of one kind or another.

So of course, instructions are not sufficient. What you really want is AI that's aligned with the underlying intention. So, I think that often in the podcast, people have talked about intention alignment as an important goal of AI systems. And I think that is precisely right to dedicate a lot of technical effort to close the gap between a kind of idiot savant, AI, that perceives just instructions in this dumb way and the kind of more nuanced, intelligent AI that can follow an intention. But we might wonder whether aligning AI with an individual or collective intention is actually sufficient to get us to the really good outcomes, the kind of maximalist outcomes that I'm talking about. And I think that there's a number of reasons why that might not be the case. So of course, to start with, just because an AI can follow an intention, doesn't say anything about the quality of the intention that's being followed.

We can form intentions on an individual or collective basis to do all kinds of things. Some of which might be incredibly foolish or malicious, some of which might be self-harming, some of which might be unethical. And we've got to ask this question of whether we want AI to follow us down that path when we come up with schemes of that kind, and there's various ways we might try to address those bundle of problems. I think intentions are also problematic from a kind of technical and phenomenological perspective because they tend to be incomplete. So if we look at what an intention is, it's roughly speaking a kind of partially filled out plan of action that commits us to some end. And if we imagine the AI systems are very powerful, they may encounter situations or dilemmas or option sets that are in this space of uncertainty, where it's just not clear what the original intention was, and they might need to make the right kind of decision by default.

So they might need some intuitive understanding of what the right thing to do is. So my intuition is that we do want AI systems that have some kind of richer understanding of the goals that we would want to realize in whole. So I think that we do need to look at other options. It is also possible that we had formed the intention for the AI to do something that explicitly requires an understanding of morality. So we may ask it to do things like promote the greatest good in a way that is fundamentally ethical. Then it needs to step into this other terrain of understanding preferences, interests, and values. I think we need to explore that terrain for one reason or another. Of course, one thing that people talk about is this kind of learning from revealed preferences. So perhaps in addition to the things that we directly communicate, the AI could observe our behavior and make inferences about what we want that help fill in the gaps.

So maybe it could watch you in your public life, hopefully not private life and make these inferences that actually it should create this very good thing. So that isn't the domain of trying to learn from things that it observes. But I think that preferences are also quite a worrying data point for AI alignment, at least revealed preferences because they contain many of the same weaknesses and shortcomings that we can ascribe to individual intentions.

Lucas Perry: What is a revealed intention again?

Iason Gabriel: Sorry, revealed preferences are preferences that are revealed through your behavior. So I observed you doing A or B. And from that choice, I conclude that you have a deeper preference for the thing that you choose. And the question is, if we just watch people, can we learn all the background information we need to create ethical outcomes?

Lucas Perry: Yeah. Absolutely not.

Iason Gabriel: Yeah. Exactly. As your Ted Bundy example, nicely illustrated, not only is it very hard to actually get useful information from observing people about what they want, but what they want can often be the wrong kind of thing for them or for other people.

Lucas Perry: Yeah. I have to hire people to spend some hours with me every week to tell me from the outside, how I may be acting in ways that are misinformed or self-harming. So instead of revealed preferences, we need something like rational or informed preferences, which is something you get through therapy or counseling or something like that.

Iason Gabriel: Well, that's an interesting perspective. I guess there's a lot of different theories about how we get to ideal preferences, but the idea is that we don't want to just respond to what people are in practice doing. We want to give them the sort of thing that they would aspire to if they were rational and informed at the very least. So not things that are just a result of mistaken reasoning or poor quality information. And then this very interesting, philosophical and psychological question about what the content of those ideal preferences are. And particularly what happens when you think about people being properly rational. So, to return to David Hume, who often the is-ought distinction is attributed to, he has the conjecture that someone can be fully informed and rational and still desire pretty much anything at the end of the day, that they could want something hugely destructive for themselves or other people, of course, Kantians.

And in fact, a lot of moral philosophers believe that rationality is not just a process of joining up beliefs and value statements in a certain fashion, but it also encompasses a substantive capacity to evaluate ends. So, obviously Kantians have a theory about rationality ultimately requiring you to reflect on your ends and ask if they universalize in a positive way. But the thing is that's highly, highly contested. So I think ultimately if we say we want to align AI with people's ideal and rational preferences, it leads us into this question of what rationality really means. And we don't necessarily get the kind of answers that we want to get to.

Lucas Perry: Yeah, that's a really interesting and important thing. I've never actually considered that. For example, someone who might be a moral anti-realist would probably be more partial to the view that rationality is just about linking up beliefs and epistemics and decision theory with goals and goals are something that you're just given and embedded with. And that there isn't some correct evaluative procedure for analyzing goals beyond whatever meta preferences you've already inherited. Whereas a realist might say something like, the other view where rationality is about beliefs and ends, but also about perhaps more concrete standard method for evaluating which ends are good ends. Is that the way you view it?

Iason Gabriel: Yeah, I think that's a very nice summary. The people who believe in substantive rationality tend to be people with a more realist, moral disposition. If you're profoundly anti-realist, you basically think that you have to stop talking in the currency of reasons. So you can't tell people they have a reason not to act in a kind of unpleasant way to each other, or even to do really heinous things. You have to say to them, something different like, "Wouldn't it be nice if we could realize this positive state of affairs?" And I think ultimately we can get to views about value alignment that satisfy these two different groups. We can create aspirations that are well-reasoned from different points of view and also create scenarios that meet the kind of, "Wouldn't it be nice criteria." But I think it isn't going to happen if we just double down on this question of whether rationality ultimately leads to a single set of ends or a plurality of ends, or no consensus whatsoever.

Lucas Perry: All right. That's quite interesting. Not only do we have difficult and interesting philosophical ground in ethics, but also in rationality and how these are interrelated.

Iason Gabriel: Absolutely. I think they're very closely related. So actually the problems we encounter in one domain, we also encounter in the other, and I'd say in my kind of lexicon, they all fall within this question of practical rationality and practical reason. So that's deliberating about what we ought to do either because of explicitly moral considerations or a variety of other things that we factor up in judgements of that kind.

Lucas Perry: All right. Two more on our list here to hit our interests and values.

Iason Gabriel: So, I think there are one or two more things we could say about that. So if we think that one of the challenges with ideal preferences is that they lead us into this heavily contested space about what rationality truly requires. We might think that a conception of human interests does significantly better. So if we think about AI being designed to promote human interests or wellbeing or flourishing, I would suggest that as a matter of empirical fact, there's significantly less disagreement about what that entails. So if we look at say the capability based approach that Amartya Sen and Martha Nussbaum have developed, it essentially says that there's a number of key goods and aspects of human flourishing, that the vast majority of people believe conduce to a good life. And that actually has some intercultural value and affirmation. So if we designed AI that bore in mind, this goal of enhancing general human capabilities.

So, human freedom, physical security, emotional security, capacity, that looks like an AI that is both roughly speaking, getting us into the space of something that looks like it's unlocking real value. And also isn't bogged down in a huge amount of metaphysical contention. I suggest that aligning AI with human interests or wellbeing is a good proximate goal when it comes to value alignment. But even then I think that there's some important things that are missing and that can only actually be captured if we returned to the idea of value itself.

So by this point, it looks like we have almost arrived at a kind of utilitarian AI via the backdoor. I mean, of course utility is a subject of mental state, isn't necessarily the same as someone's interest or their capacity to lead a flourishing life. But it looks like we have an AI that's geared around optimizing some notion of human wellbeing. And the question is what might be missing there or what might go wrong. And I think there are some things that that view of value alignment still struggles to factor in. The welfare of nonhuman animals is something that's missing from this wellbeing centered perspective on alignment.

Lucas Perry: That's why we might just want to make it wellbeing for sentient creatures.

Iason Gabriel: Exactly, and I believe that this is a valuable enterprise, so we can expand the circle. So we say it's the wellbeing of sentient creatures. And then we have the question about, what about future generations? Does their wellbeing count? And we might think that it does if we follow Toby Ord or in fact, most conventional thinking, we do think that the welfare of future generations has intrinsic value. So we might say, "Well, we want to promote wellbeing of sentient creatures over time with some appropriate weighting to account for time."

And that's actually starting to take us into a richer space of value. So we have wellbeing, but we also have a theory about how to do intertemporal comparisons. We might also think that it matters how wellbeing or welfare is distributed. That it isn't just a maximization question, but that we also have to be interested in equity or distribution because we think is intrinsically important. So we might think it has to be done in a manner that's fair. Additionally, we might think that things like the natural world have intrinsic value that we want to factor in. And so the point which will almost be familiar now from our earlier discussion is you actually have to get to that question of what values do we want to align the system with because values and the principles that derive with them can capture everything that is seemingly important.

Lucas Perry: Right. And so, for example, within the effective altruism community and within moral philosophy recently, the way in which moral progress has been made is in so far that debiasing human moral thought and ethics from spatial and temporal bias. So Peter Singer has the children drowning in a shallow pond argument. It just illustrates how there are people dying and children dying all over the world in situations which we could cheaply intervene to save them as if they were drowning in a shallow pond. And you only need to take a couple of steps and just pull them out, except we don't. And we don't because they're far away. And I would like to say, essentially, everyone finds this compelling that where you are in space, doesn't matter how much you're suffering. That if you are suffering, then all else being equal, we should intervene to alleviate that suffering when it's reasonable to do so.

So space doesn't matter for ethics. Likewise, I hope, and I think that we're moving in the right direction if time also doesn't matter while also being mindful, we also have to introduce things like uncertainty. We don't know what the future will be like, but this principle about caring about the wellbeing of sentient creatures in general, I think is essential and core I think to whatever list of principles we'll want for bridging the is-ought distinction, because it takes away spacial bias, where you are in space, doesn't matter, just matters that you're sentient being, it doesn't matter when you are as a sentient being. It also doesn't matter what kind of sentient being you are, because the thing we care about is sentience. So then the moral circle has expanded across species. It's expanded across time. It's expanded across space. It includes aliens and all possible minds that we could encounter now or in the future. We have to get that one in, I think, for making a good future with AI.

Iason Gabriel: That's a picture that I strongly identify with on a personal level, this idea of the expanding moral circle of sensibilities. And I think from a substantive point of view, you're probably right. That that is a lot of the content that we would want to put into an aligned AI system. I think that one interesting thing to note is that a lot of these views are actually empirically fairly controversial. So if we look at the interesting study, the moral machine experiment, where I believe several million people ultimately played this experiment online, where they decided which trade offs an AV, an autonomous vehicle, should make in different situations. So whether it should crash into one person or five people, a rich person or a poor person, pretty much everyone agreed that it should kill fewer people when that was on the table. But I believe that in many parts of the world, there was also belief that the lives of affluent people mattered more than the lives of those in poverty.

And so if you were just to reason from their first sort of moral beliefs, you would bake that bias into an AI system that seems deeply problematic. And I think it actually puts pressure on this question, which is we've already said we don't want to just align AI with existing moral preferences. We've also said that we can't just declare a moral theory to be true and impose it on other people. So are there other options which move us in the direction of these kinds of moral beliefs that seem to be deeply justified, but also avoid the challenge of value imposition. And how far do they get if we try to move forward, not just as individuals like examining the kind of expanding moral circle, but as a community that's trying to progressively endogenize these ideas and come up with moral principles that we can all live by.

We might not get as far if we were going at it alone, but I think that there are some solutions that are kind of in that space. And those are the ones I'm interested in exploring. I mean, common sense, morality understood as the conventional morality that most people endorse, I would say is deeply flawed in a number of regards, including with regards to global poverty and things of that nature. And that's really unfortunate given that we probably also don't want to force people to live by more enlightened beliefs, which they don't endorse or can't understand. So I think that the interesting question is how do we meet this demand for a respect for pluralism, and also avoid getting stuck in the morass of common sense morality, which has these prejudicial beliefs that will probably with the passage of time come to be regarded quite unfortunately by future generations.

And I think that making this demand for non domination or democratic support seriously means not just running far into the future or in a way that we believe represents the future, but also doing a lot of other things, trying to have a democratic discourse where we use these reasons to justify certain policies that then other people reflectively endorse and we move the project forwards in a way that meets both desiderata. And in this paper, I try to map out different solutions that both meet this criteria and of respecting people's pluralistic beliefs while also moving us towards more genuinely morally aligned outcomes.

Lucas Perry: So now the last question that I want to ask you here then on the goal of AI alignment is do you view a needs based conception of human wellbeing as a sub-category of interest based value alignment? People have come up with different conceptions of human needs. People are generally familiar with Maslow's hierarchy of needs. And I mean, as you go up the hierarchy, it will become more and more contentious, but everyone needs food and shelter and safety, and then you need community and meaning and spirituality and things of that nature. So how do you view or fit a needs based conception. And because some needs are obviously undeniable relative to others.

Iason Gabriel: Broadly speaking, a needs space conception of wellbeing is in that space we already touched upon. So the capabilities based approach and the needs based approach are quite similar. But I think that what you're saying about needs potentially points to a solution to this kind of dilemma that we've been talking about. If we're going to ask this question of what does it mean to create principles for AI alignment that treat people fairly, despite their different views. One approach we might take is to look for commonalities that also seem to have moral robustness or substance to them. So within the parlance of political philosophy, we'd call this an overlapping consensus approach to the problem of political and moral decision making. I think that that's a project that's well worth countenancing. So we might say there's a plurality of global beliefs and cultures. What is it that these cultures coalesce around? And I think that it's likely to be something along the lines of the argument that you just put forward; that people are vulnerable in virtue of how we're constituted, that we have a kind of fragility and that we need protection, both against the environment and against certain forms of harm, particularly state-based violence. And that this is a kind of moral bedrock or what the philosopher Henry Shue calls, "A moral minimum" that receives intercultural endorsement. So actually the idea of human needs is very, very closely tied to the idea of human rights. So the idea is that the need is fundamental, and in virtue of your moral standing, the normative claim and your need, the empirical claim, you have a right to enjoy a certain good and to be secured in the knowledge that you'll enjoy that thing.

So I think the idea of building a kind of human rights space, AI that's based upon this intercultural consensus is pretty promising. In some regards human rights, as they've been historically thought about are not super easy to turn into a theory of AI alignment, because they are historically thought of as guarantees that States have to give their citizens in order to be legitimate. And it isn't entirely clear what it means to have a human rights based technology, but I think that this is a really productive area to work in, and I would definitely like to try and populate that ground.

You might also think that the consensus or the emerging consensus around values that need to be built into AI systems, such as fairness and explainability potentially pretends that the emergence of this kind of intercultural consensus. Although I guess at that point, we have to be really mindful of the voices that are at the table and who's had an opportunity to speak. So although there does appear to be some convergence around principles of beneficence and things like that, there's also true that this isn't a global conversation in which everyone is represented, and it would be easy to prematurely rush to the conclusion that we know what values to pursue, when we're really just reiterating some kind of very heavily Western centric, affluent view of ethics that doesn't have real intercultural democratic viability.

Lucas Perry: All right, now it's also interesting and important to consider here the differences and importance of single agent and multi-agent alignment scenarios. For example, you can imagine entertaining the question of, "How is it that I would build a system that would be able to align with my values? One agent being the AI system, and one person, and how is it that I get the system to do what I want it to do?" And then the multi-agent alignment scenario considers, "How do I get one agent to align and serve to many different people's interests and wellbeing and desires, and preferences, and needs? And then also, how do we get systems to act and behave when there are many other systems trying to serve and align to many other different people's needs? And how is it that all of these systems may or may not collaborate with all of the other AI systems, and may or may not collaborate with all of the other human beings, when all the human beings may have conflicting preferences and needs?" How is it that we do for example, intertheoretic comparisons of value and needs? So what's the difference, and importance between single agent and multi-agent alignment scenarios?

Iason Gabriel: I think that the difference is best understood in terms of how expansive the goal of alignment has to be. So if we're just thinking about a single person and a single agent, it's okay to approach the value alignment challenge through a slightly solipsistic lens. In fact, you know, if it was just one person and one agent, it's not clear that morality really enters the picture, unless there are other people other sentient creatures who our actions can effect. So with one person, one agent, the challenge is primarily correlation with the person's desires, aims intentions. Potentially, there's still a question of whether the AI serves their interest rather than, you know, there's more volitional states that come to mind. When we think about situations in which many people are affected, then it becomes kind of remiss not to think about interpersonal comparisons, and the kind of richer conceptions that we've been talking about.

Now, I mentioned earlier that there is a view that there will always be a human body that synthesizes preferences and provides moral instructions for AI. We can imagine democratic approaches to value alignment, where human beings assemble, maybe in national parliaments, maybe in global fora, and legislate principles that AI is then designed in accordance with. I think that's actually a very promising approach. You know, you would want it to be informed by moral reflection and people offering different kinds of moral reasons that support one approach rather than the other, but that seems to be important for multi-person situations and is probably actually a necessary condition for powerful forms of AI. Because, when AI has a profound effect on people's lives, these questions of legitimacy also start to emerge. So not only is it doing the right thing, but is it doing the sort of thing that people would consent to, and is it doing the sort of thing that people actually have consented to? And I think that when AI is used in certain forum, then these questions of legitimacy come to the top. There's a bundle of different things in that space.

Lucas Perry: Yeah. I mean, it seems like a really, really hard problem. When you talk about creating some kind of national body, and I think you said international fora, do you wonder that some of these vehicles might be overly idealistic given what may happen in the world where there's national actors competing and capitalism driving things forward relentlessly, and this problem of multi-agent alignment seems very important and difficult, and that there are forces pushing things such that it's less likely that it happens.

Iason Gabriel: When you talk about multi-agent alignment. Are you talking about the alignment of an ecosystem that contains multiple AI agents, or are you talking about how we align an AI agent with the interests and ideas of multiple parties? So many humans, for example?

Lucas Perry: I'm interested and curious about both.

Iason Gabriel: I think there's different considerations that arise for both sets of questions, but there are also some things that we can speak to that pertain to both of them.

Lucas Perry: Do they both count as multi-agent alignment scenarios in your understanding of the definition?

Iason Gabriel: From a technical point of view? It makes perfect sense to describe them both in that way. I guess when I've been thinking about it, curiously, I've been thinking of multi-agent alignment as an agent that has multiple parties that it wants to satisfy. But when we look at machine learning research, "Multi-agent" usually means many AI agents running around in a single environment. So I don't see any kind of language based reason to opt for one, rather than the other. With regards to this question of idealization and real world practice, I think it's an extremely interesting area. And the thing I would say is this is almost one of those occasions where potentially the is-ought distinction comes to our rescue. So the question is, "Does the fact that the real world is a difficult place, affected by divergent interests, mean that we should level down our ideals and conceptions about what really good and valuable AI would look like?"

And there are some people who have what we term, "Practice dependent" views of ethics who say, "Absolutely we should do. We should adjust our conception of what the ideal is." But as you'll probably be able to tell by now, I hold a kind of different perspective in general. I don't think it is problematic to have big ideals and rich visions of how value can be unlocked, and that partly ties into the reasons that we spoke about for thinking that the technical and the normative interconnected. So if we preemptively level down, we'll probably design systems that are less good than they could be. And when we think about a design process spanning decades, we really want that kind of ultimate goal, the shining star of alignment to be something that's quite bright and can steer our efforts towards it. If anything, I would be slightly worried that because these human parliaments and international institutions are so driven by real world politics, that they might not give us the kind of most fully actualized set of ideal aspirations to aim for.

And that's why philosophers like, of course John Rawls actually propose that we need to think about these questions from a hypothetical point of view. So we need to ask, "What would we choose if we weren't living in a world where we knew how to leverage our own interests?" And that's how we identified the real ideal that is acceptable to people, regardless of where they're located. And also can then be used to steer non-ideal theory or the kind of actual practice and the right direction.

Lucas Perry: So if we have an organization that is trying its best to create aligned and beneficial AGI systems, reasoning about what principles we should embed in it from behind Rawls' Veil of Ignorance, you're saying, would have hopefully the same practical implications as if we had a functioning international body for coming up with those principles in the first place.

Iason Gabriel: Possibly. I mean, I'd like to think that ideal deliberation would lead them in the direction of impartial principles for AI. It's not clear whether that is the case. I mean, it seems that at its very best, international politics has led us in the direction of a kind of human rights doctrine that both accords individuals protection, regardless of where they live and defends the strong claim that they have a right to subsistence and other forms of flourishing. If we use the Veil of Ignorance experiment, I think for AI might even give us more than that, even if a real world parliament never got there. For those of you who are not familiar with this, the philosopher John Rawls says that when it comes to choosing principles for a just society, what we need to do is create a situation in which people don't know where they are in that society, or what their particular interest is.

So they have to imagine that they're from behind the Veil of Ignorance. They select principles for that society that they think will be fair regardless of where they end up, and then having done that process and identified principles of justice for the society, he actually holds out the aspiration that people will reflectively endorse them even once the veil has been removed. So they'll say, "Yes, in that situation, I was reasoning in a fair way that was nonprejudicial. And these are principles that I identified there that continue to have value in the real world." And we can say what would happen if people are asked to choose principles for artificial intelligence from behind a veil of ignorance where they didn't know whether they were going to be rich or poor, Christian, utilitarian, Kantian, or something else.

And I think there, some of the kind of common sense material would be surfaced; so people would obviously want to build safe AI systems. I imagine that this idea of preserving human autonomy and control would also register, but for some forms of AI, I think distributive considerations would come into play. So they might start to think about how the benefits and burdens of these technologies are distributed and how those questions play out on a global basis. They might say that ultimately, a value aligned AI is one that has fair distributive impacts on a global basis, and if you follow rules, that it works to the advantage of the least well off people.

That's a very substantive conception of value alignment, which may or may not be the final outcome of ideal international deliberation. Maybe the international community will get to global justice eventually, or maybe it's just too thoroughly affected by nationalists interests and other kinds of what, to my mind, the kind of distortionary effects that mean that it doesn't quite get there. But I think that this is definitely the space that we want the debate to be taking place in. And that actually, there has been real progress in identifying collectively endorsed principles for AI that gives me hope for the future. Not only that we'll get good ideals, but that people might agree to them, and that they might get democratic endorsement, and that they might be actionable and the sort of thing they can guide real world AI design.

Lucas Perry: Can you add a little bit more clarity on the philosophical questions and issues, which single and multi-agent alignments scenarios supervene on? How do you do inter theoretic comparisons of value if people disagree on normative or meta-ethical beliefs or people disagree on foundational axiomatic principles for bridging the is-ought gap? How is it that systems deal with that kind of disagreement?

Iason Gabriel: I'm hopeful that the three pictures that I outlined so far of the overlapping consensus between different moral beliefs, of democratic debate over a constitution for AI, and of selection of principles from behind the Veil of Ignorance, are all approaches that carry some traction in that regard. So they try to take seriously the fact of real world pluralism, but they also, through different processes, tend to tap towards principles that are compatible with a variety of different perspectives. Although I would say, I do feel like there's a question about this multi agent thing that may still not be completely clear in my mind, and it may come back to those earlier questions about definition. So in a one person, one agent scenario, you don't have this question of what to do with pluralism, and you can probably go for a more simple one shot solution, which is align it with the person's interest, beliefs, moral beliefs, intentions, or something like that. But if you're interested in this question of real world politics for real world AI systems where a plurality of people are affected, we definitely need these other kinds of principles that have a much richer set of properties and endorsements.

Lucas Perry: All right, there's Rawls' Veil of Ignorance. There's, principle of non domination, and then there's the democratic process?

Iason Gabriel: Non-domination is a criterion that any scheme for multi-agent value alignment needs to meet. And then we can ask the question, "What sort of scheme would meet this requirement of non-domination?" And there we have the overlapping census with human rights. We have a scheme of democratic debate leading to principles for AI constitution, and we have the Veil of Ignorance as all ideas that we basically find within political theory that could help us meet that condition.

Lucas Perry: All right, so we have spoken at some length then about principles and identifying principles, this goes back to our conversation about the is-ought distinction, and these are principles that we need to identify for setting up an ethical alignment procedure. You mentioned this earlier, when we were talking about this, this distinction between the one true moral theory approach to AI alignment, in contrast to coming up with a procedure for AI alignment that would be broadly endorsed by many people, and would respect the principle of non domination, and would take into account pluralism. Can you unpack this distinction more, and the importance of it?

Iason Gabriel: Yeah, absolutely. So I think that the kind of true moral theory approach, although it is a kind of stylized idea of what an approach to value of alignment might look like, is the sort of thing that could be undertaken just by a single person who is designing the technology or a small group of people, perhaps moral philosophers who think that they have really great expertise in this area. And then they identify the chosen principle and run with it.

The big claim is that that isn't really a satisfactory way to think about design and values in a pluralistic world where many people will be affected. And of course, many people who've gone off on that kind of enterprise have made serious mistakes that were very costly for humanity and for people who are affected by their actions. So the political approach to value alignment paints a fundamentally different perspective and says it isn't really about one person, or one group running ahead and thinking that they've done all the hard work it's about working out what we can all agree upon, that looks like a reasonable set of moral principles or coordinates to build powerful technologies around. And then, once we have this process in place that outfits the right kind of agreement, then the task is given back to technologists and these are the kind of parameters that are fair process of deliberation has outputted. And this is what we have the authority to encode in machines, whether it's say human rights or a conception of justice, or some other widely agreed upon values.

Lucas Perry: There are principles that you're really interested in satisfying, like respecting pluralism, and respecting a principle of non-domination, and the One True Moral Theory approach, risks, violating those other principles. Are you not taking a stance on whether there is a One True Moral Theory, you're just willing to set that question aside and say, "Because it's so essential to a thriving civilization that we don't do moral imposition on one another, that coming up with a broadly endorsed theory is just absolutely the way to go, whether or not there is such a thing as a One True Moral Theory? Does that capture your view?