US House of Representatives call for legal liability on Deepfakes

Contents

The spread of AI-generated videos and images ahead of the U.S. election has reemphasized the urgent threat deepfakes present to our society, and the recent bipartisan push to amend Section 230 reveals the growing desire for legal accountability across the supply chain for deepfake sexual abuse material.

Echoing this, members of the U.S. House of Representatives recently made a bipartisan call for regulations that place legal liability on the developers of the AI systems used to produce deepfakes. In the House, Rep. LaTurner (R-KS) shared,

“Anyone with a laptop can create realistic images, videos, and audio clips depicting events that did not occur. This poses a threat to our security and could harm our election systems, and developers should be held liable for the harms they cause.“

This was further emphasized by Rep. Morelle (D-NY):

“Deepfakes are abhorrent, and the developers of these systems should be held liable“.

The public wants regulation on deepfakes

Polling shows that such measures are supported by the general public:

- 84% of respondents say companies that create AI models used to generate fake political content should be held liable, compared to 4% who say they should not be held liable.

- 70% support and just 13% oppose legislation that would make artificial intelligence companies liable if their models were used to create deepfake political content. That includes 71% of Republicans, 74% of Democrats, and 63% of independents.

- 75% support holding the developers of AI models liable when AI-generated content is used for scams and deceptive political tactics. 66% support holding Elevenlabs liable for the deceptive use of their model in the New Hampshire primary; compared to just 14% who oppose holding the company liable.

Response from the Campaign to Ban Deepfakes

The Campaign to Ban Deepfakes is leading this effort, and counts among its members the National Organization for Women (NOW), SAG-AFTRA, Plan International, Future of Life Institute (FLI), and many more. On July 30, NOW hosted a media virtual roundtable on the topic (recording; password: H223.6&&).

One of its supporters, the actor, author and activist Ashley Judd, made the following statement:

“Deepfakes, and the AI tools that are used to create them, are causing incredible harm 24 hours a day – and with alarming speed they’re becoming faster, cheaper, and easier to produce. With nonconsensual pornography making up 95% of deepfakes, and 99% of those sexually explicit deepfakes violating the likenesses of girls and women, the deepfakes problem reflects the ease with which society sexualizes girls and women, discarding our humanity and impedes the goal of sex and gender equality.

“The only effective way to tackle this rampant abuse is to deliver meaningful liability for the AI companies that develop and deploy these technologies – in addition to deepfake creators and distributors. We need to pass laws that guarantee accountability now. It is encouraging to see U.S. lawmakers from across the political spectrum and stakeholders from different backgrounds come together for discussions about the welfare of girls and women. As an activist working towards women’s equality, an actor, and a victim of nonconsensual deepfakes myself, I’m all in to support the Ban Deepfakes campaign and its efforts to disrupt this enormously damaging supply chain.”

Max Tegmark, President of FLI, one of the founding members of the campaign, released the following statement:

“This bipartisan discussion on deepfakes is an important step in halting their growing threat to society. I was pleased to see that several members of the House agree that any legislation addressing deepfakes must criminalize and create liability at each stage of production and dissemination. The only way to combat this issue is for governments to hold the whole supply chain responsible. This includes requiring AI developers and deployers to show they have taken steps to prevent deepfakes in their systems, and allowing people harmed by deepfakes to sue those responsible for damages.

“There is broad bipartisan support to regulate deepfakes in the U.S. and we need to pass legislation urgently. Deepfakes that spread non-consensual sexual imagery, commit fraud, and circulate misinformation continue to proliferate. This issue is made worse as AI capabilities grow and make the creation and distribution of deepfakes increasingly fast, cheap, and easy. I’m encouraged by these members’ floor speeches and look forward to the House passing legislation that will stem this rapidly accelerating threat to our society.”

Please get in touch if you have any questions or would like to speak with Max Tegmark, National Organization for Women President Christian Nunes, Encode Justice President Sneha Revanur, or the offices of the Reps.

Ban Deepfakes is a diverse coalition of organizations and individuals committed to disrupting the deepfake supply chain at every stage. Supporters include preeminent figures like author Steven Pinker and actor Ashley Judd, and members include the National Organization for Women, Equality Now, SAG-AFTRA, Plan International, FLI, and many more. They are calling on U.S. lawmakers to deliver meaningful accountability and liability for everyone involved in the production and distribution of deepfakes, including AI system developers and deployers.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Michael Kleinman reacts to breakthrough AI safety legislation

Context and Agenda for the 2025 AI Action Summit

Paris AI Safety Breakfast #4: Rumman Chowdhury

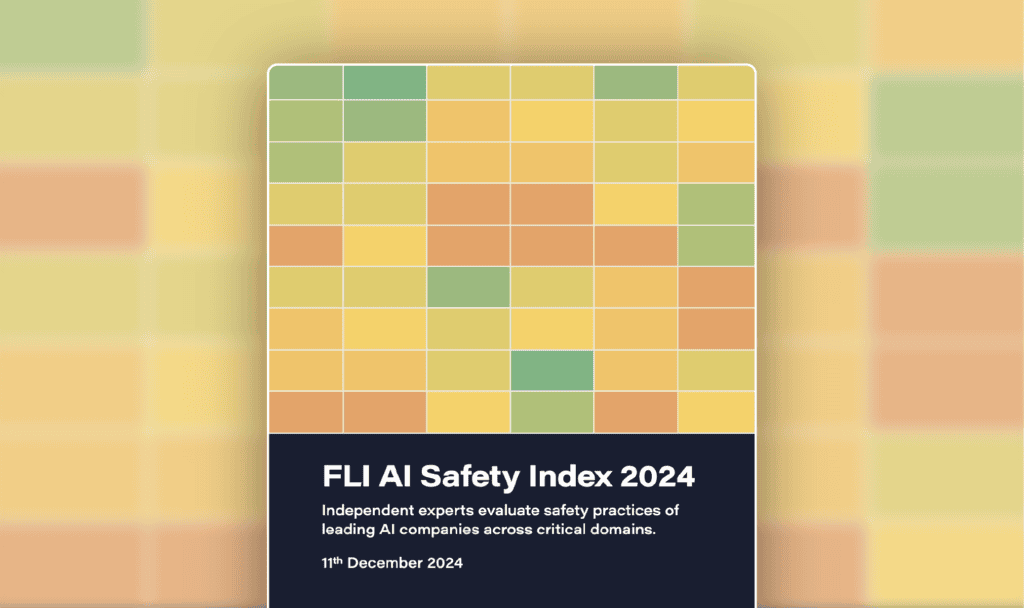

AI Safety Index Released

Some of our Policy & Research projects

Control Inversion