The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Contents

A new national survey of 2,000 U.S. adults (29 Sept – 5 Oct 2025) uncovers where Americans stand on AI and the prospect of expert‑level and superhuman systems.

The findings show that a majority of those surveyed are concerned about the way that AI is being developed today, and that they do not support the development of human-level AI systems until they can be proven safe and controllable.

⭐ Highlights

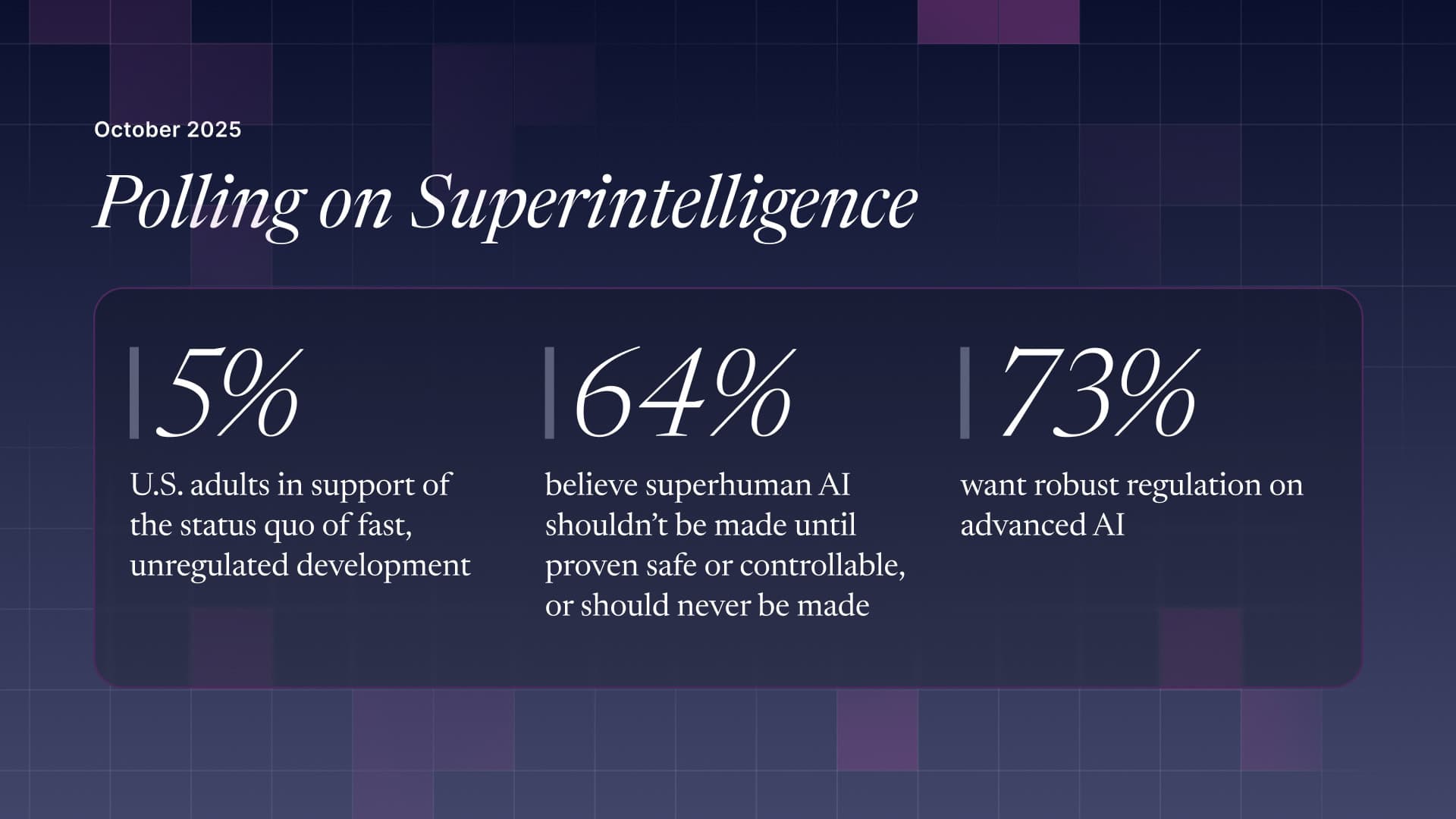

- There is widespread discontent at the current trajectory of advanced AI development, with only 5% in support of the status quo of fast, unregulated development;

- Almost two-thirds (64%) feel that superhuman AI should not be developed until it is proven safe and controllable, or should never be developed;

- There is overwhelming support (73%) for robust regulation on AI. The fraction opposed to strong regulation is only 12%.

Participants were given a brief description of key terms, including ‘expert-level AI’ and ‘superhuman AI’, and asked about both the benefits and potential risks of these technologies. Their understanding and engagement on these topics was measured so that results could be compared across cohorts.

Below we summarize the key findings of this report. Click here to view the full report.

Overall: American adults are not ‘bought in’ to current trajectory

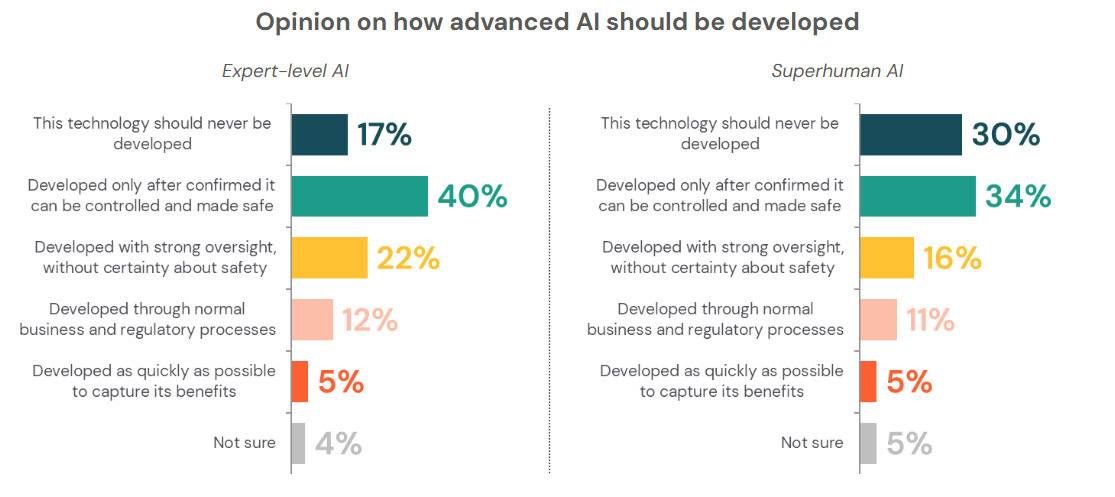

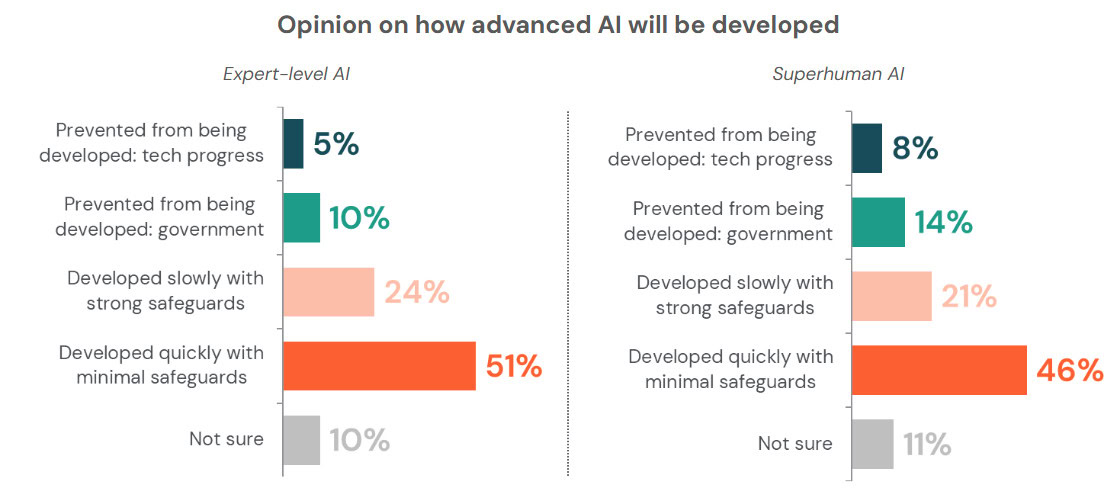

The poll reveals a strong consensus that superhuman AI should not be developed until there is scientific agreement that it is safe and controllable (64%), or should never be developed at all.

A majority of adults (57%) are not bought in to the development of ‘expert-level AI’ under current conditions; they feel that it should never be developed (17%), or at least halted until proven controllable and safe (40%).

Only 5% feel that either technology should be developed “as quickly as possible.” This sits in stark contrast to the operating paradigm of the leading AI corporations today, which openly declare they are “racing” to human-level systems and beyond.

Despite this, around half of respondents expect that advanced AI will be developed “quickly with minimal safeguards”.

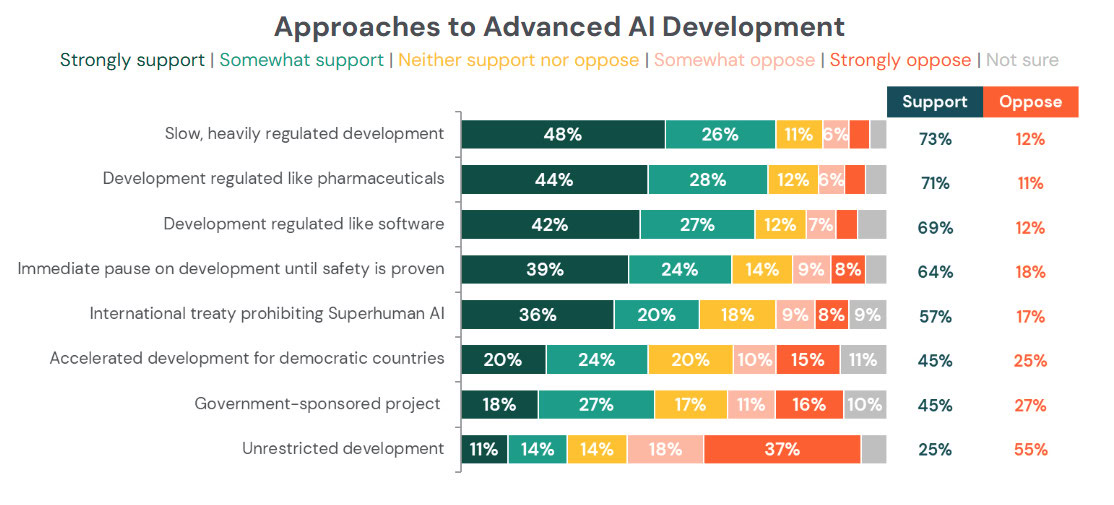

Approaches to AI development: Heavy regulation favored by most

There is overwhelming support for “slow, heavily regulated” development of AI (73%), with about the same proportion wishing for regulation “like pharmaceuticals with extensive testing before deployment”.

Even more significantly, two-thirds (64%) of adults would like to see an “immediate pause” on the development of advanced AI until the technology is proven to be safe.

A related poll on American’s attitudes about AI found that 69% think the government is not doing enough to regulate the use of AI (Quinnipiac University, April 2025).

Respondents cite “replacement” and “extinction” as top reasons to not develop advanced AI

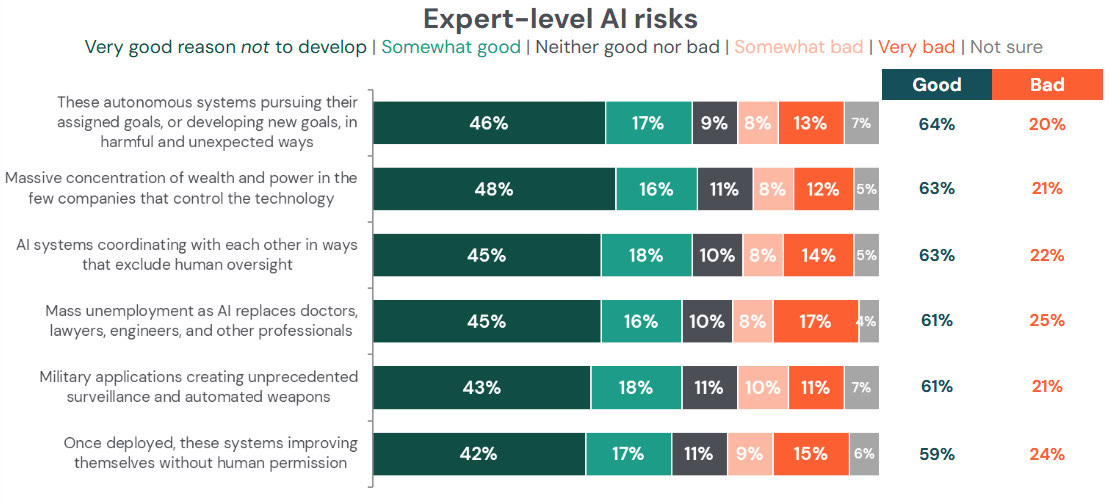

When asked to rate reasons to not develop advanced AI, those which received the most support all revolved around human extinction or enfeeblement, and concentration of power.

Another poll on American’s attitudes (YouGov, March 2025) had similar findings:

- Three-quarters (74%) of adults are concerned about the possibility of AI resulting in the concentration of power in technology companies, and;

- One-third (37%) of adults are concerned that AI could cause the end of the human race on earth.

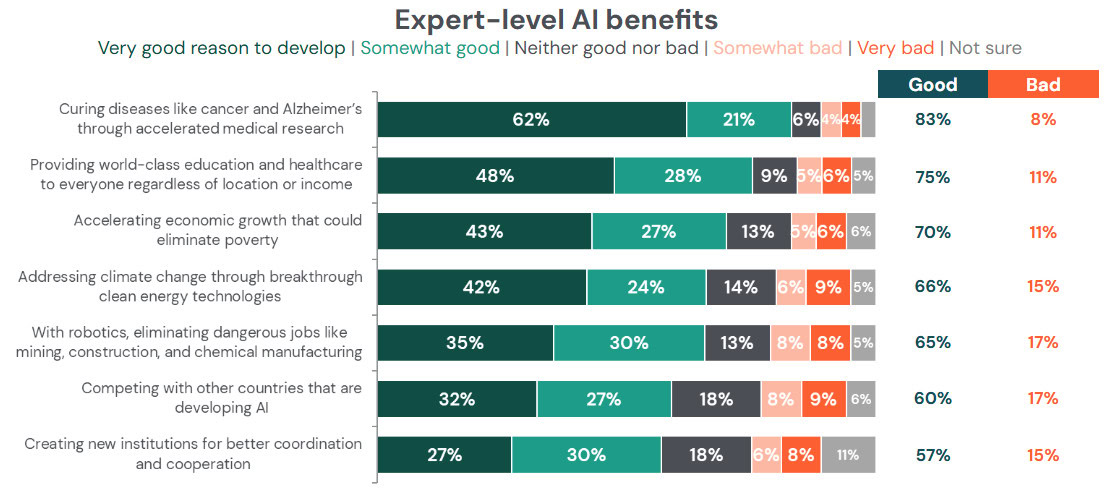

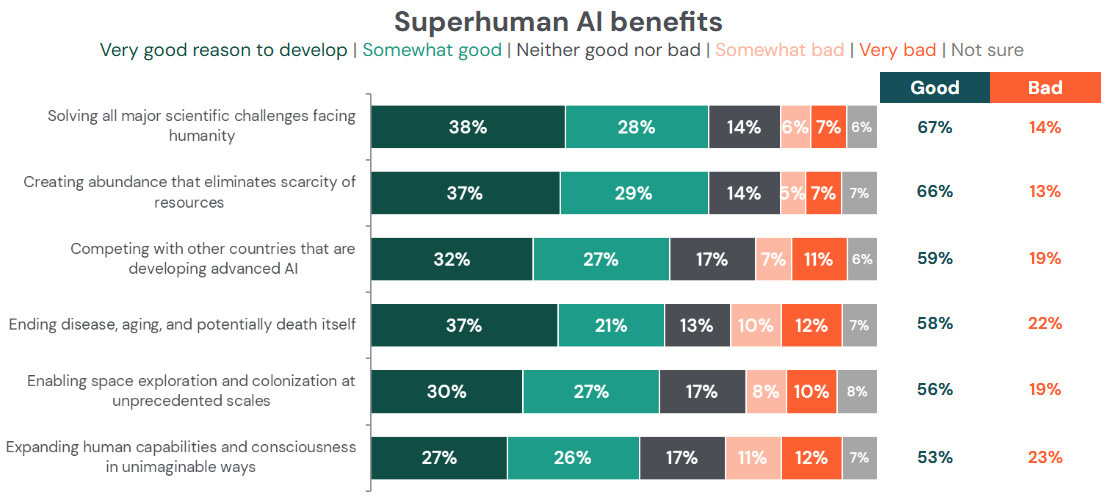

In terms of support for the development of advanced AI, top reasons involved solving major social and scientific challenges, and creating abundance.

Respondents with higher AI‑knowledge tend to appreciate both upsides and downsides, and demand tighter safeguards.

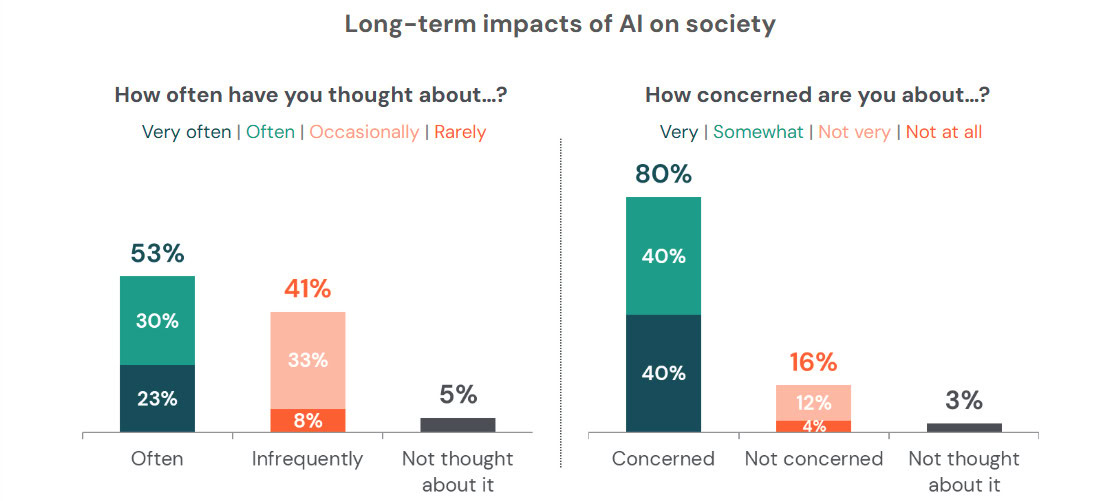

Overall, U.S. adults feel overwhelmingly concerned about the long-term impacts of AI on society.

Two other polls of U.S. adults demonstrate an overall pessimism about the impacts of AI on society:

- Almost half (44%) think AI will do more harm than good, while only 38% think the opposite (Quinnipiac University, April 2025);

- 40% think AI will have a negative impact on society, and only 29% expect it to have a positive impact (YouGov, March 2025).

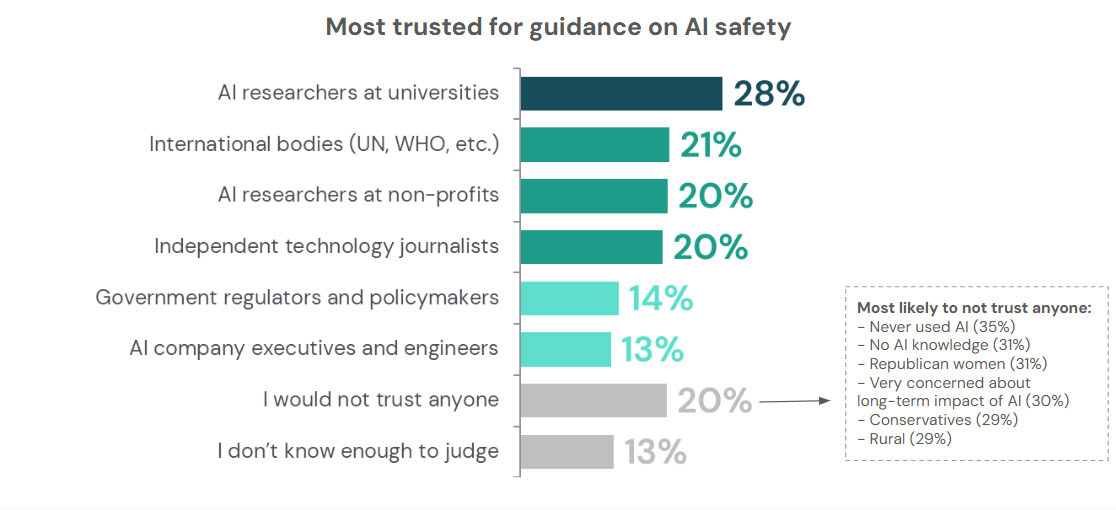

The public looks to scientific organizations on AI

When asked who they believed should determine the trajectory of advanced AI, “International scientific organizations” ranked at the top, with “National government agencies” a very close second.

Altogether, scientific organizations (both international and independent combined) are favored by around half of U.S. adults.

Two-thirds (69%) reported that the sources of information they would trust most for guidance on AI safety include “AI researchers”—both at universities and non-profits—and “International bodies”.

The companies developing AI technologies and their leadership score significantly lower in terms of public trust. And a related study (Pew Research, April 2025) found that a majority of adults (59%) have little or no confidence in US companies to develop and use AI responsibly.

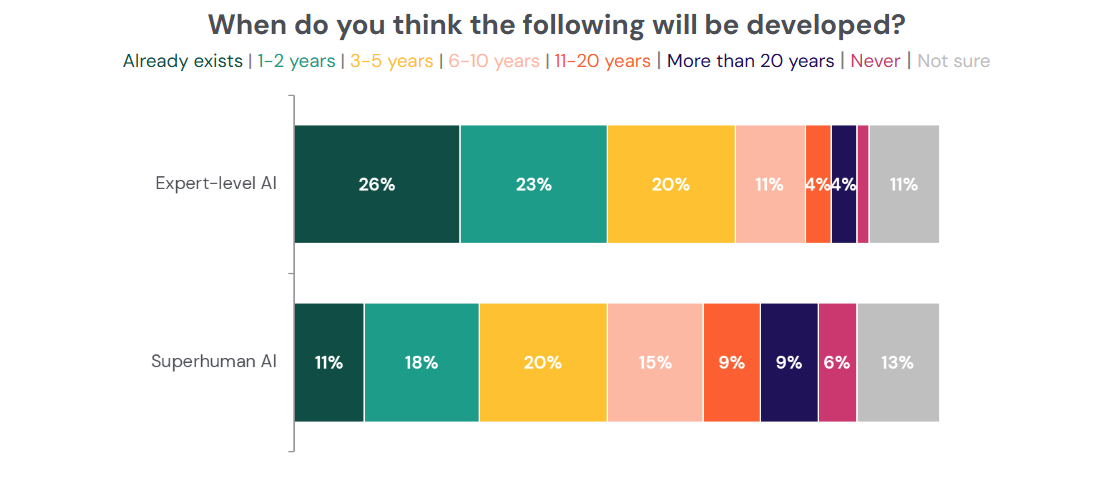

The public expects transformative AI to be here soon

The majority of those surveyed expect expert‑level AI to arrive imminently: 49% think that expert-level AI will be developed within 2 years.

Superhuman AI is seen as slightly farther off, but still quite soon; the same proportion (49%) expect it to be developed within 5 years.

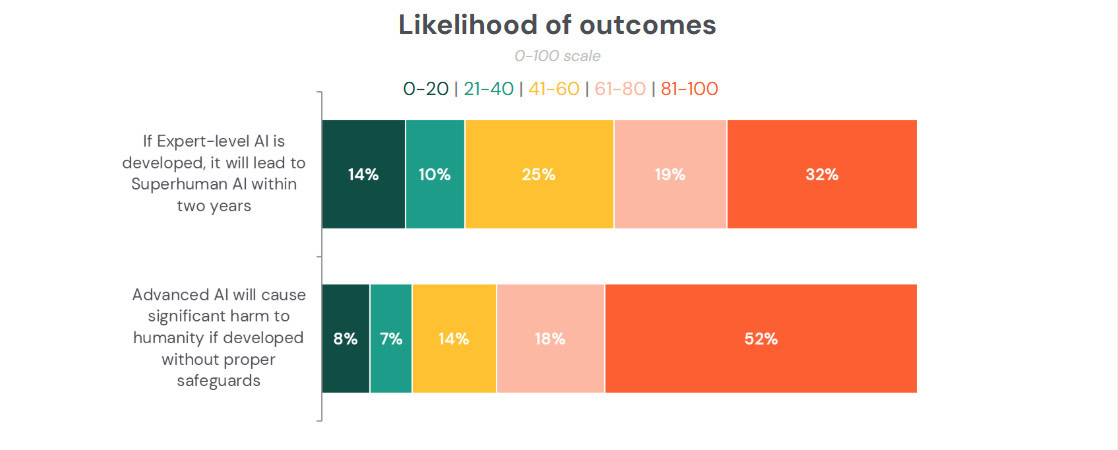

Two-thirds of adults worry that advanced AI is likely (61% or greater chance) to cause “significant harm to humanity” if it is developed without proper safeguards.

Conclusion

To our knowledge, this is the first public large-scale survey that specifically addresses US public attitudes toward the development of advanced AI systems of the type often referred to as “AGI” and “superintelligence.” We encourage more such studies by independent researchers.

In the results above, we see a clear disconnect between the stated mission of leading AI companies and the wishes of the American public.

OpenAI, Anthropic, xAI, Google, Meta, and others have openly declared they are “racing” to expert-level or superhuman AI, while many of them actively hamper efforts to create any meaningful guardrails.

The survey paints a nuanced picture: Americans recognize AI’s general promise but are wary of unchecked advancement of certain systems that aim to compete with or exceed top human experts. The public’s preference for strong, independent oversight signals a clear mandate for policymakers and industry leaders: advance cautiously and responsibly, educate broadly, and involve trusted scientific institutions in governance.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Policy, Recent News