2019 Statement to the United Nations in Support of a Ban on LAWS

Contents

The following statement was read on the floor of the United Nations during the March, 2019 CCW meeting, in which delegates discussed a possible ban on lethal autonomous weapons.

Thank you chair for your leadership.

The Future of Life Institute (FLI) is a research and outreach organization that works with scientists to mitigate existential risks facing humanity. FLI is deeply worried about an imprudent application of technology in warfare, especially with regard to emerging technologies in the field of artificial intelligence.

Let me give you an example: In just the last few months, researchers from various universities have shown how easy it is to trick image recognition software. For example, researchers at Auburn University found that if objects, like a school bus or a firetruck were simply shifted into unnatural positions, so that they were upended or turned on their sides in an image, the image classifier would not recognize them. And this is just one of many, many examples of image recognition software failing because it does not understand the context within the image.

This is the same technology that would analyze and interpret data picked up by the sensors of an autonomous weapons system. It’s not hard to see how quickly image recognition software could misinterpret situations on the battlefield if it has to quickly assess everyday objects that have been upended or destroyed.

And challenges with image recognition is only one of many examples why an increasing number of people in AI research and in the tech field – that is an increasing number of the people who are most familiar with how the technology works, and how it can go wrong – are all saying that this technology cannot be used safely or fairly to select and engage a target. In the last few years, over 4500 aritificial intelligence and robotics researchers have called for a ban on lethal autonomous weapons, over 100 CEOs of prominent AI companies have called for a ban on lethal autonomous weapons, and over 240 companies and nearly 4000 people have pledged to never develop lethal autonomous weapons.

But as we turn our attention to human-machine teaming, we must also carefully consider research coming from the field of psychology and recognize the limitations there as well. I’m sure everyone in this room has had a beneficial personal experience working with artificial intelligence. But when under extreme pressure, as in life and death situations, psychologists find that humans become overly reliant on technology. In one study at Georgia Tech, students were taking a test alone in a room, when a fire alarm went off. The students had the choice of leaving through a clearly marked exit that was right by them, or following a robot that was guiding them away from the exit. Almost every student followed the robot, away from the safe exit. In fact, even when the students had been warned in advance that the robot couldn’t be trusted, they still followed it away from the exit.

As the delegate from Costa Rica mentioned yesterday, the New York Times has reported that pilots on the Boeing 737 Max had only 40 seconds to fix the malfunctioning automated software on the plane. These accidents represent tragic examples of how difficult it can be for a human to correct an autonomous system at the last minute if something has gone wrong.

Meaningful human control is something we must strive for, but as our colleagues from ICRAC said yesterday, “If states wanted genuine meaningful human control of weapons systems, they would not be using autonomous weapons systems.”

I want to be clear. Artificial intelligence will be incredibly helpful for militaries, and militaries should move to adopt systems that can be implemented safely in areas such as improving the situational awareness of the military personnel who would be in the loop, logistics, and defense. But we cannot allow algorithms to make the decision to harm a human – they simply cannot be trusted, and we have no reason to believe they will be trustworthy anytime soon. Given the incredible pace at which the technology is advancing, thousands of AI researchers from around the world call with great urgency for a ban on lethal autonomous weapons.

There is a strong sense in the science and technology community that only a binding legal instrument can ensure continued research and development of beneficial civilian applications without the endeavor being tainted by the spectre of lethal algorithms. We thus call on states to take real leadership on this issue! We must move to negotiate a legally binding instrument that will ensure algorithms are not allowed to make the decision – or to unduly influence the decision — to harm or kill a human.

Thank you.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Open Letters

Written Statement of Dr. Max Tegmark to the AI Insight Forum

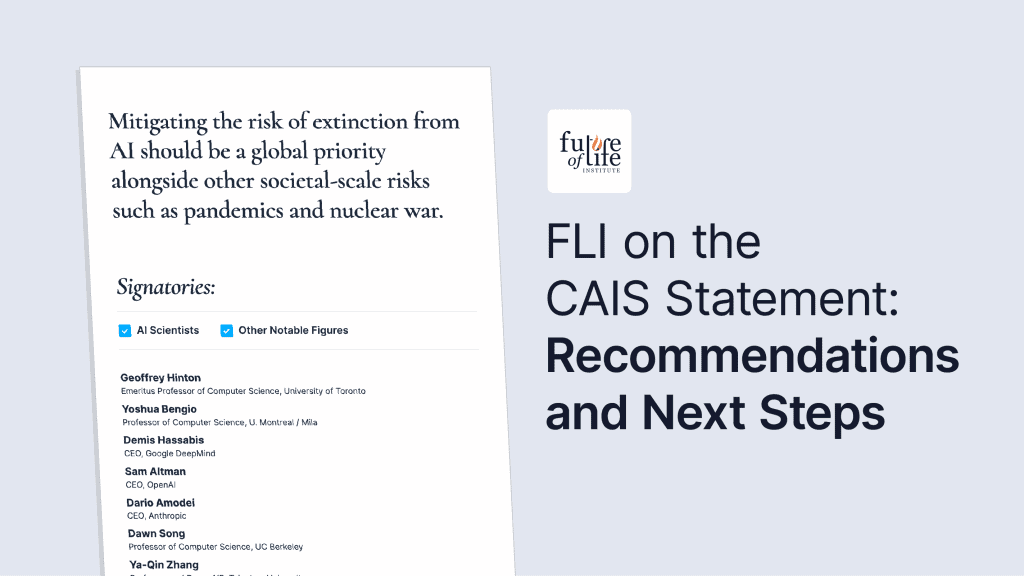

FLI on “A Statement on AI Risk” and Next Steps

Open Letter Against Reckless Nuclear Escalation and Use