Contents

Welcome to the Future of Life Institute newsletter. Every month, we bring 25,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

If you’ve found this newsletter helpful, why not tell your friends, family, and colleagues to subscribe here?

Today’s newsletter is a 5-minute read. We cover:

- Our new open letter advocating for a pause on training large models

- An interview with professional poker player Liv Boeree

- The GPT-4 system card

- Why the The Franck Report and the Szilárd petition are relevant today

- The IPCC’s latest synthesis report

Open Letter: Pause Giant AI Experiments!

📢 We’re calling on AI labs to temporarily pause training powerful models!

Join FLI’s call alongside Yoshua Bengio, Steve Wozniak, Yuval Noah Harari, Elon Musk, Stuart Russell, and over 30,000 other concerned individuals who’ve signed!

🛑 Why do we think this is necessary?

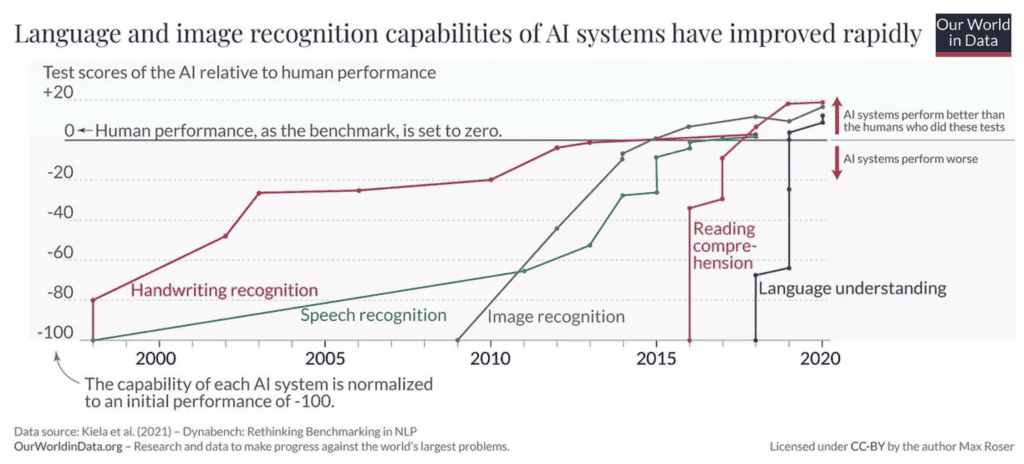

AI systems are scaling rapidly – The largest models are increasingly capable of surpassing human performance across many domains.

We believe in the importance of scientific progress and innovation, but the use of these technologies create many harms that require more thought, better governance, and stronger democratic institutions.

These include bias and discrimination, the spread of mis and disinformation, the development of autonomous weapons, IP theft and adverse impacts on labour markets, and the impact on our environment.

❗We are now training and deploying even more powerful AI models that can leverage more data, more sophisticated algorithms, and more compute.

In fact, the stated goal of many commercial AI labs is to build Artificial General Intelligence. Worryingly, they are doing so even as they cut ethics and safety staff.

We cannot know with certainty how close humanity is to achieving this goal, but it is obvious that no single company can forecast what the development of extremely powerful AI systems means for our societies.

💡 What do we want?

We believe that powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.

We share this view with over 5000 leading scientists who signed the 2017 Asilomar AI Principles – many of whom now work at the largest AI labs.

💬 The key message is this: Our societies need time to adapt.

That’s why we’re calling on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

This pause will give us time to ideate new governance institutions, better monitor hardware capabilities, create capacity to audit groundbreaking algorithms, design safety standards, and mitigate democratic and economic harms.

🫵🏽 How can you help?

Read our letter and share it with your friends, family, and colleagues. If you agree with us, consider adding your name to the list of signatories! Sign the Open Letter!

Governance and Policy Updates

AI policy:

▶ The First Session of the 2023 CCW Group of Governmental Experts on Lethal Autonomous Weapons Systems concluded on 10 March.

▶ Spain, Portugal, and Andorra have joined the 33 states from Latin American and the Caribbean in calling for legally-binding rules on autonomous weapons in Costa Rica earlier in February.

▶ The United Kingdom published its AI White Paper.

Biotechnology:

▶ The US White House published new priorities for US biotechnology manufacturing, including reports from the Office of Science and Technology Policy, The Department of Defense, and the Department of Commerce.

Climate change:

▶ The Intergovernmental Panel on Climate Change published its synthesis report – the last in the IPCC’s sixth assessment cycle.

Updates from FLI

▶ Our call to pause the training of AI models more powerful that GPT-4 has been extensively covered in the media, including by outlets such as the Financial Times, Wired, The New York Times and a dozen others.

▶ Politico interviewed FLI’s Director of Policy Mark Brakel about his views on autonomous weapons, AI hype, climate futures, race dynamics, and the EU AI Act. Read the full interview:

▶ Our podcast host Gus Docker interviewed former professional poker player Liv Boeree about GPT-4, the AI arms race, and why this might just be the most important century.

New Research: GPT-4 System Card

Alongside the release of GPT-4, OpenAI published two documents: a technical report on GPT-4’s capabilities, and a system card, which details many of the risks that GPT-4 will present alongside the company’s efforts to mitigate these harms.

Here’s a quick summary of some of the concerns that feature in the system card:

▶ Large models will proliferate: Meta’s language model – LLaMA – was promptly leaked over BitTorrent days after it was released. You can now run it on a computer if you have the technical chops.

▶ Some harms are predictable: If everybody has powerful AI systems in their pockets, somebody is going to do bad things, like flood the internet with synthetic media. OpenAI, Stanford, and the Centre for Security and Emerging Technology have already published a report outlining disinformation risks.

▶ Powerful models can be used in unexpected ways: It turns out you can use a commercially available AI model meant to discover new drugs to create pathogens instead. This model will promptly generate over 40k pathogens – including VX, the deadliest nerve agent ever – in roughly six hours.

▶ Safety and ethics will be the victims of an AI race: Given how capable these systems are, it isn’t surprising that both states and other AI labs race will accelerate their efforts to develop their own large models. The rush to deploy these systems will likely compel them to ignore important safety considerations.

Why this matters: Model, or system cards, are relatively new transparency mechanisms. Their goal is to provide a comprehensive yet accessible overview about how developers have designed an AI system, including details about data, algorithms, performance, and ethics and safety considerations.

OpenAI’s report deviates from the norm by not providing details about GPT-4, but the system card is still worth reading in full given that it extensively covers 11 risks in total and represents an important facet of safely deploying powerful models.

The system card highlights the need to pause giant AI experiments: Despite the comprehensive coverage of potential risks in the system card, no single company can identify and mitigate all of the harms associated with language models or forecast their effect on society.

We need time to engineer these systems for the clear benefit of all, and give society a chance to adapt.

Given the high stakes involved, we’ve outlined some governance responses in our open letter that we think should be put into place before AI labs train even larger models. These include:

▶ New and capable regulatory authorities dedicated to AI;

▶ Oversight and tracking of highly capable AI systems and large pools of computational capability;

▶ Provenance and watermarking systems to help distinguish real from synthetic and to track model leaks; a robust auditing and certification ecosystem;

▶ Liability for AI-caused harm;

▶ Robust public funding for technical AI safety research;

▶ Well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.

Let us know what you think by replying to this e-mail!

What We’re Reading

▶ Lobbying against General Purpose AI regulation: The Corporate Europe Observatory (COE) published a new report documenting how large technology firms are aggressively lobbying European law makers to exclude General Purpose AI systems from the upcoming EU AI Act.

Dive deeper: FLI has previously advocated for developers and users of general purpose AI systems to adhere to the compliance requirements of high-risk systems.

▶ Biosafety: A new report by the Kings College London finds that the number of labs working on dangerous pathogens continue to grow – now numbering over 100 – without appropriate oversight and accountability systems.

Learn more: Watch our interview with one of the reports co-authors on catastrophic biological risks:

▶ The Belén Communiqué: Researchers from Human rights watch argue that the autonomous weapons conference in Costa Rica (which we covered in last months newsletter) was notable for leaving open the possibility of negotiating a treaty outside the UN process in Geneva.

▶ Risk communication: A new comment in Vox says that we need to get better at talking about climate change. Unbridled pessimism or complacent optimism won’t help make our planet better.

Hindsight is 20/20

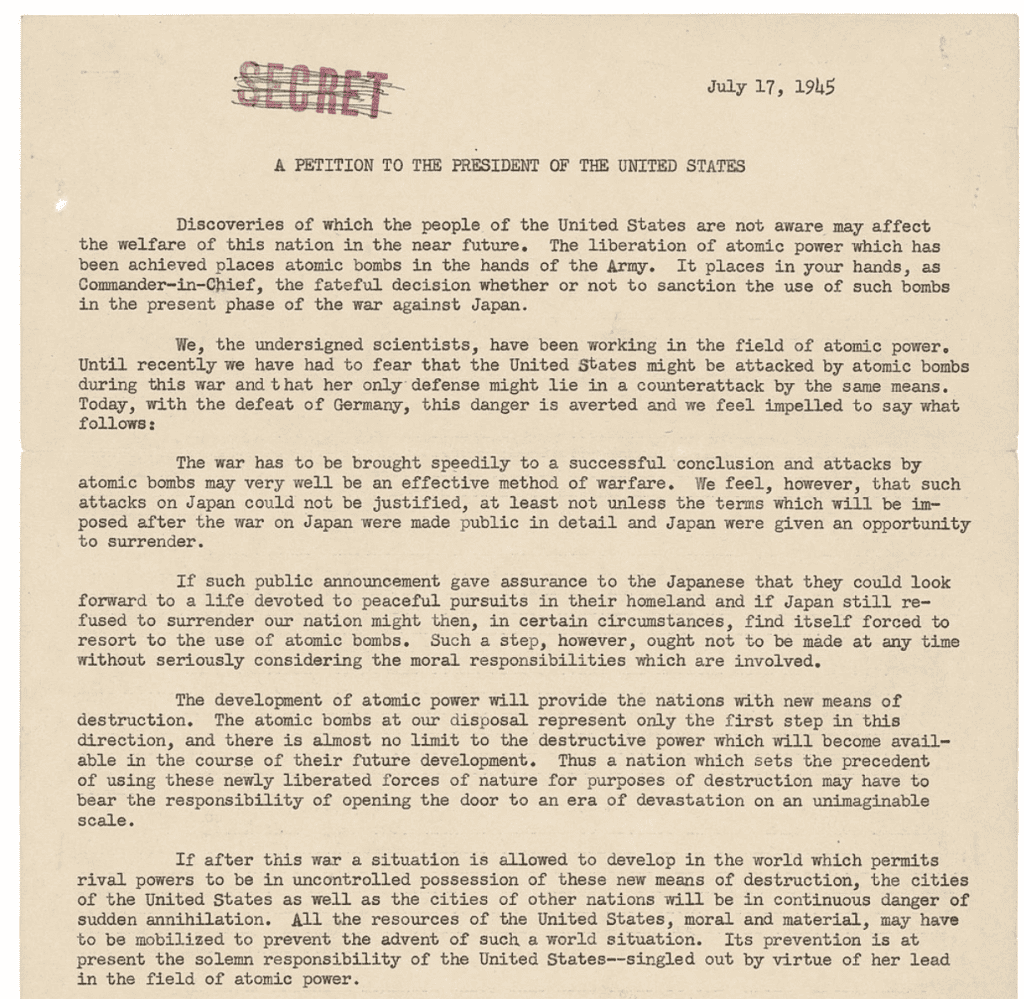

The Franck Report and the Szilárd petition: In June and July 1945, several prominent nuclear physicists who worked on the Manhattan project recommended and petitioned that the US not use nuclear weapons to force Japan to surrender.

These scientists cautioned that using the nuclear bomb on Japanese cities would lead to an all-out arms race. They offered a range of alternatives – from communicating the US’ new technology privately to detonating the bomb on an uninhabited island.

In August 1945, the US dropped two nuclear bombs on Hiroshima and Nagasaki.

Why this matters: Ask yourself: How likely is it that AI labs – beholden to commercial and geopolitical pressures – will pause research on systems that grow even more powerful? Can you really put the genie back into the bottle?

Chart of the Month

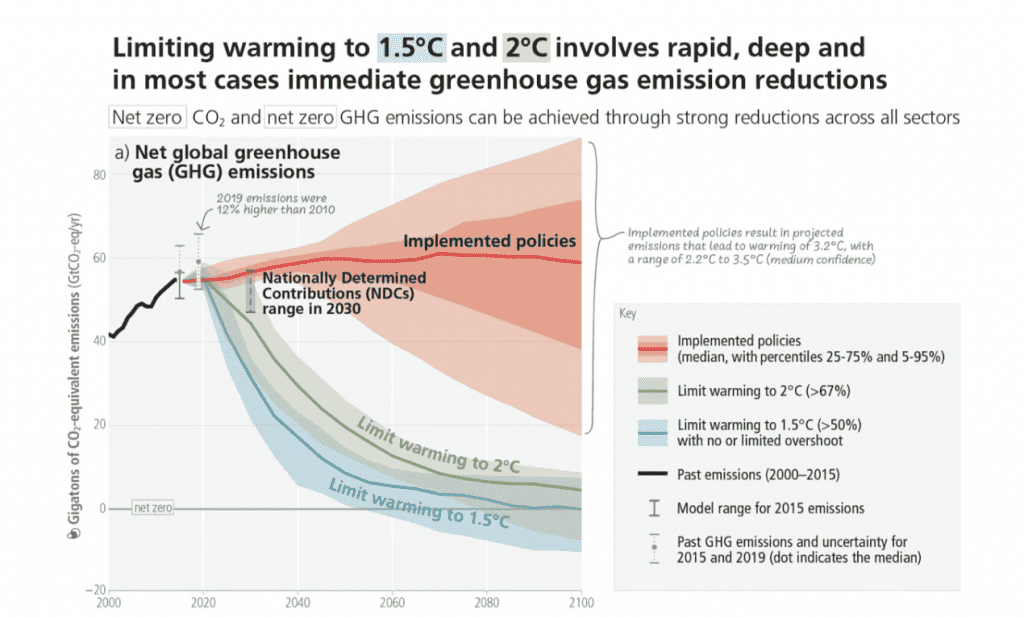

We’re still not doing enough to limit global warming. Here’s a chart from the IPCC’s synthesis report that puts our efforts into perspective.

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.