Supervising AI Growth

When Apple released its software application, Siri, in 2011, iPhone users had high expectations for their intelligent personal assistants. Yet despite its impressive and growing capabilities, Siri often makes mistakes. The software’s imperfections highlight the clear limitations of current AI: today’s machine intelligence can’t understand the varied and changing needs and preferences of human life.

However, as artificial intelligence advances, experts believe that intelligent machines will eventually – and probably soon – understand the world better than humans. While it might be easy to understand how or why Siri makes a mistake, figuring out why a superintelligent AI made the decision it did will be much more challenging.

If humans cannot understand and evaluate these machines, how will they control them?

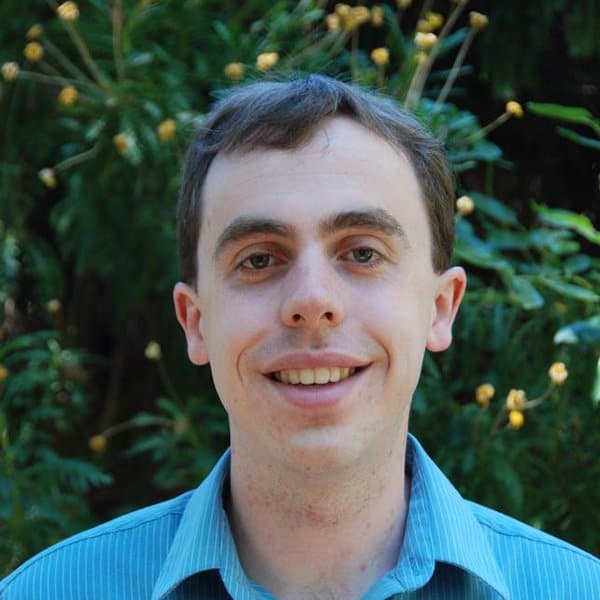

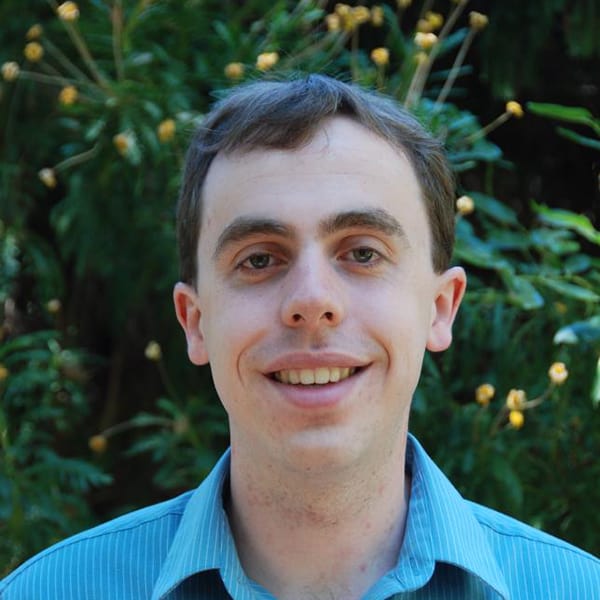

Paul Christiano, a Ph.D. student in computer science at UC Berkeley, has been working on addressing this problem. He believes that to ensure safe and beneficial AI, researchers and operators must learn to measure how well intelligent machines do what humans want, even as these machines surpass human intelligence.

Semi-supervised Learning

The most obvious way to supervise the development of an AI system also happens to be the hard way. As Christiano explains: “One way humans can communicate what they want, is by spending a lot of time digging down on some small decision that was made , and try to evaluate how good that decision was.”

But while this is theoretically possible, the human researchers would never have the time or resources to evaluate every decision the AI made. “If you want to make a good evaluation, you could spend several hours analyzing a decision that the machine made in one second,” says Christiano.

For example, suppose an amateur chess player wants to understand a better chess player’s previous move. Merely spending a few minutes evaluating this move won’t be enough, but if she spends a few hours she could consider every alternative and develop a meaningful understanding of the better player’s moves.

Fortunately for researchers, they don’t need to evaluate every decision an AI makes in order to be confident in its behavior. Instead, researchers can choose “the machine’s most interesting and informative decisions, where getting feedback would most reduce our uncertainty,“ Christiano explains.

“Say your phone pinged you about a calendar event while you were on a phone call,” he elaborates, “That event is not analogous to anything else it has done before, so it’s not sure whether it is good or bad.” Due to this uncertainty, the phone would send the transcript of its decisions to an evaluator at Google, for example. The evaluator would study the transcript, ask the phone owner how he felt about the ping, and determine whether pinging users during phone calls is a desirable or undesirable action. By providing this feedback, Google teaches the phone when it should interrupt users in the future.

This active learning process is an efficient method for humans to train AIs, but what happens when humans need to evaluate AIs that exceed human intelligence?

Consider a computer that is mastering chess. How could a human give appropriate feedback to the computer if the human has not mastered chess? The human might criticize a move that the computer makes, only to realize later that the machine was correct.

With increasingly intelligent phones and computers, a similar problem is bound to occur. Eventually, Christiano explains, “we need to handle the case where AI systems surpass human performance at basically everything.”

If a phone knows much more about the world than its human evaluators, then the evaluators cannot trust their human judgment. They will need to “enlist the help of more AI systems,” Christiano explains.

Using AIs to Evaluate Smarter AIs

When a phone pings a user while he is on a call, the user’s reaction to this decision is crucial in determining whether the phone will interrupt users during future phone calls. But, as Christiano argues, “if a more advanced machine is much better than human users at understanding the consequences of interruptions, then it might be a bad idea to just ask the human ‘should the phone have interrupted you right then?’” The human might express annoyance at the interruption, but the machine might know better and understand that this annoyance was necessary to keep the user’s life running smoothly.

In these situations, Christiano proposes that human evaluators use other intelligent machines to do the grunt work of evaluating an AI’s decisions. In practice, a less capable System 1 would be in charge of evaluating the more capable System 2. Even though System 2 is smarter, System 1 can process a large amount of information quickly, and can understand how System 2 should revise its behavior. The human trainers would still provide input and oversee the process, but their role would be limited.

This training process would help Google understand how to create a safer and more intelligent AI – System 3 – which the human researchers could then train using System 2.

Christiano explains that these intelligent machines would be like little agents that carry out tasks for humans. Siri already has this limited ability to take human input and figure out what the human wants, but as AI technology advances, machines will learn to carry out complex tasks that humans cannot fully understand.

Can We Ensure that an AI Holds Human Values?

As Google and other tech companies continue to improve their intelligent machines with each evaluation, the human trainers will fulfill a smaller role. Eventually, Christiano explains, “it’s effectively just one machine evaluating another machine’s behavior.”

Ideally, “each time you build a more powerful machine, it effectively models human values and does what humans would like,” says Christiano. But he worries that these machines may stray from human values as they surpass human intelligence. To put this in human terms: a complex intelligent machine would resemble a large organization of humans. If the organization does tasks that are too complex for any individual human to understand, it may pursue goals that humans wouldn’t like.

In order to address these control issues, Christiano is working on an “end-to-end description of this machine learning process, fleshing out key technical problems that seem most relevant.” His research will help bolster the understanding of how humans can use AI systems to evaluate the behavior of more advanced AI systems. If his work succeeds, it will be a significant step in building trustworthy artificial intelligence.

This article is part of a Future of Life series on the AI safety research grants, which were funded by generous donations from Elon Musk and the Open Philanthropy Project.