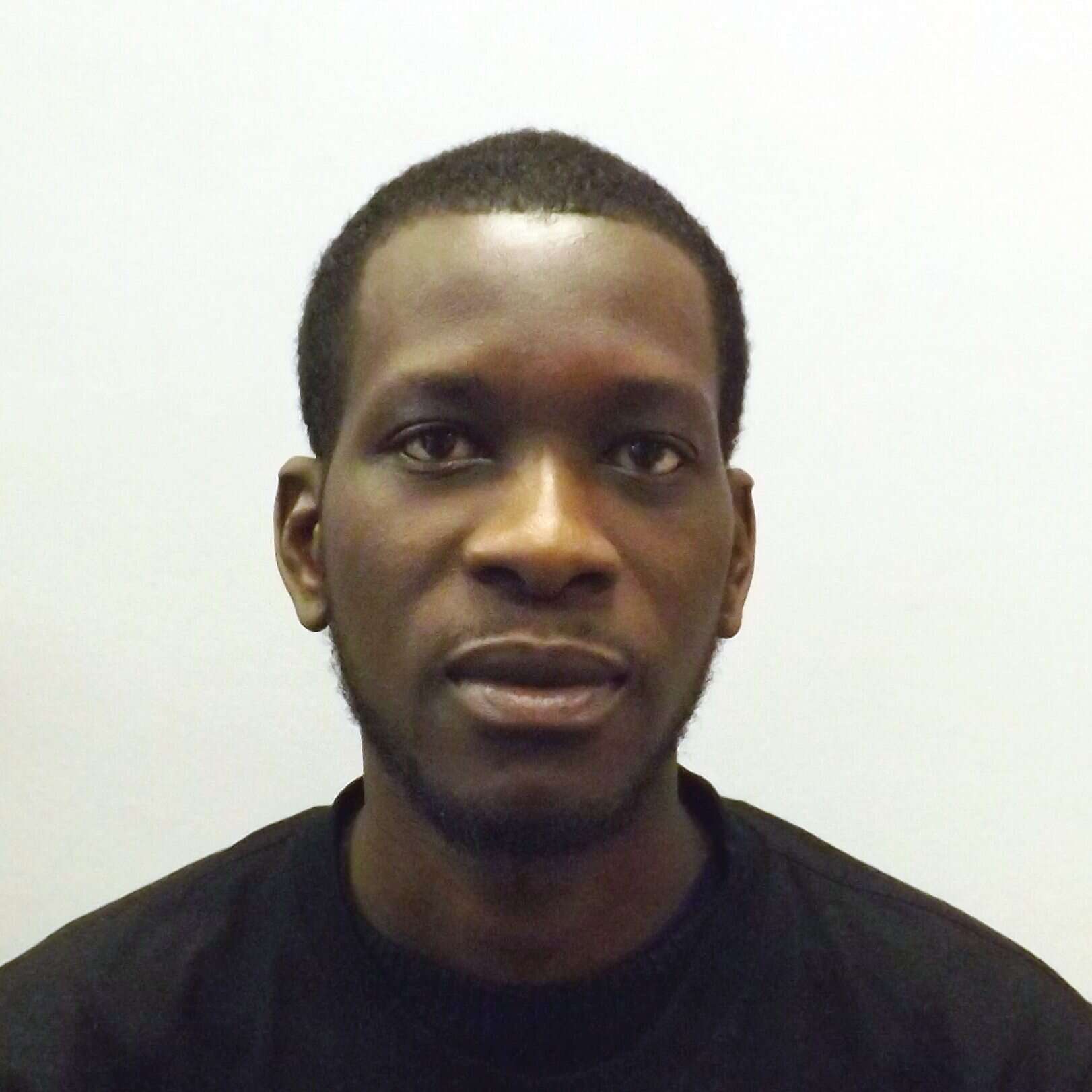

Charles Ikem

Why do you care about AI Existential Safety?

I care because some of the most horrific anecdotes about algorithmic harms and injustice are tired to pre-mature AI deployments leading to social and benefits injustice. The importance of both understanding what’s happening in automated systems and being given meaningful ways to challenge them. Also, critics have pointed out that, when designing these programs, incentives are not always aligned with easy interfaces and intelligible processes.

Please give at least one example of your research interests related to AI existential safety:

I’m interested in Transparency, oversight, and contestability in AI systems. Today AI is designed without any character basis and with random behaviors and personality, and no transparency on how it would behave in certain situations. When AI becomes an integral part of our lives and does useful functions like senior care or engage with people in serious situations, their behavior must be thoughtfully designed and grounded onto clear values defined as the character.