10 Reasons Why Autonomous Weapons Must be Stopped

Contents

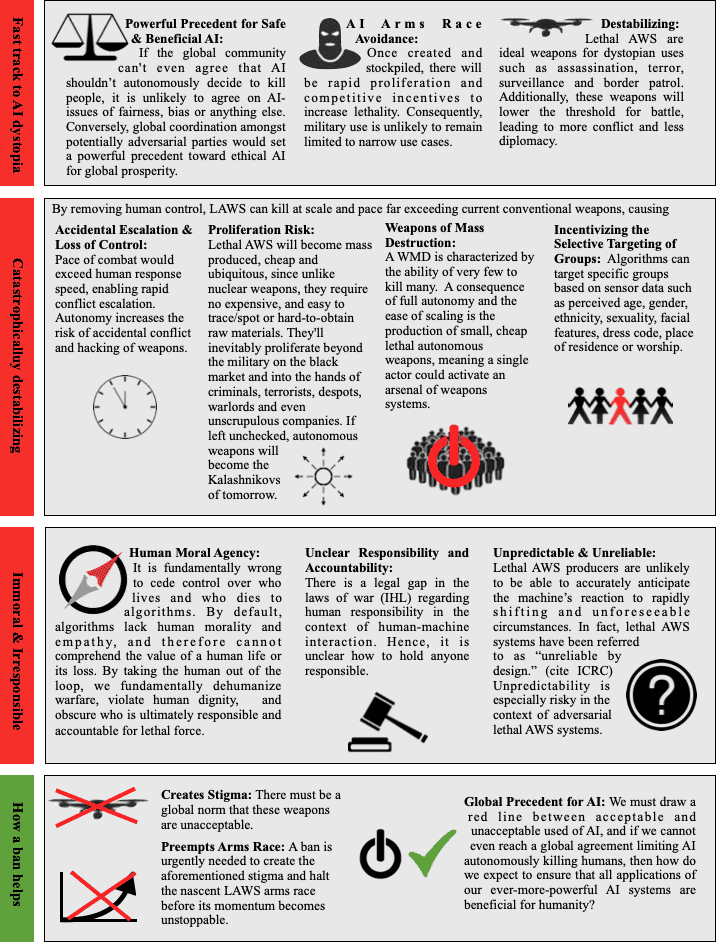

Lethal autonomous weapons pose a number of severe risks. These risks significantly outweigh any benefits they may provide, even for the world’s most advanced military programs.

In fact, these weapons have been referred to as “The Third Revolution In Warfare” due to their huge potential to have a negative impact on our society.

So, what are the main risks posed by the development of this new type of weapon?

Safety risks

1 – Unpredictability

Lethal autonomous weapons are dangerously unpredictable in their behaviour. Complex interactions between machine learning-based algorithms and a dynamic operational context make it extremely difficult to predict the behaviour of these weapons in realworld settings. Moreover, the weapons systems are unpredictable by design; they’re programmed to behave unpredictably in order to remain one step ahead of enemy systems.

2 – Escalation

Given the speed and scale at which they are capable of operating, autonomous weapons systems introduce the risk of accidental and rapid conflict escalation. Recent research by RAND found that “the speed of autonomous systems did lead to inadvertent escalation in the wargame” and concluded that “widespread AI and autonomous systems could lead to inadvertent escalation and crisis instability.” The United Nations Institute for Disarmament Research (UNIDIR) has concurred with RAND’s conclusion. Even the United States’ quasi-governmental National Security Commission on AI (NSCAI) acknowledged that “unintended escalations may occur for numerous reasons, including when systems fail to perform as intended, because of challenging and untested complexities of interaction between AI-enabled and autonomous systems on the battlefield, and, more generally, as a result of machines or humans misperceiving signals or actions.” The NSCAI went on to state that “AI-enabled systems will likely increase the pace and automation of warfare across the board, reducing the time and space available for de-escalatory measures.”

3 – Proliferation

Slaughterbots do not require costly or hard-to-obtain raw materials, making them extremely cheap to mass-produce. They’re also safe to transport and hard to detect. Once significant military powers begin manufacturing, these weapons systems are bound to proliferate. They will soon appear on the black market, and then in the hands of terrorists wanting to destabilise nations, dictators oppressing their populace, and/or warlords wishing to perpetrate ethnic cleansing. Indeed, the U.S. National Security Commission on AI has identified reducing the risk of proliferation as a key priority in reducing the strategic risks of AI in the military.

4 – Lowered barriers to conflict

War has traditionally been costly, both in terms of the cost of producing conventional weapons and in terms of costing human lives. Arguably, this has sometimes acted as a disincentive to go to war and, on the flip side, incentivised diplomacy. The rise of cheap, scalable weapons may undermine this norm, thereby lowering the barrier to conflict. The risk of rapid and unintended escalation combined with the proliferation of lethal autonomous weapons would arguably have the same effect.

5 – Mass destruction

Lethal autonomous weapons are extremely scalable. This means that the level of harm you can do using autonomous weapons depends solely on the quantity of Slaughterbots in your arsenal, not on the number of people you have available to operate the weapons. This stands in stark contrast to conventional weapons: a military power cannot do twice as much harm simply by purchasing twice as many guns; it also needs to recruit twice as many soldiers to shoot those guns. A swarm of Slaughterbots, small or large, requires only a single individual to activate it, and then its component Slaughterbots would fire themselves.

The quality of scalability, together with the significant threat of proliferation, gives rise to the threat of mass destruction. The defining characteristic of a weapon of mass destruction is that it can be used by a single person to cause many fatalities directly, and with lethal autonomous weapons a single individual could theoretically activate a swarm of hundreds of Slaughterbots, if not thousands. Proliferation increases the likelihood that large quantities of these weapons will end up in the hands of someone inclined to wreak havoc, and scalability empowers that individual. These considerations have prompted some to classify certain types of autonomous weapons systems, namely Slaughterbots, as weapons of mass destruction.

6 – Selective targeting of groups

Selecting individuals to kill based on sensor data alone, especially through facial recognition or other biometric information, introduces substantial risks for the selective targeting of groups based on perceived age, gender, race, ethnicity or religious dress. Combine that with the risk of proliferation, and autonomous weapons could greatly increase the risk of targeted violence against specific classes of individuals, including even ethnic cleansing and genocide. Furthermore, facial recognition software has been shown to amplify bias and increase error rates in correct identification of individuals from minority backgrounds, such as women and people of color. The potential disproportionate effects of lethal autonomous weapons on race and gender are key focus areas of civil society advocacy.

These threats are especially noteworthy given the increased use of facial recognition in policing and ethnic discrimination, with companies citing interest in developing lethal systems as a reason not to take a pledge against the weaponization of facial recognition software.

7 – AI Arms Race

Avoidance of an AI arms race is a foundational guiding principle of ethical artificial intelligence and yet, in the absence of a unified global effort to highlight the risks of lethal autonomous weapons and generate political pressure, an “ AI military race has begun.” Arms race dynamics, which favour speed over safety, further compound the inherent risks of unpredictability and escalatory behaviour.

Algorithms are incapable of understanding or conceptualizing the value of a human life, and so should never be empowered to decide who lives and who dies. Lethal autonomous weapons represent a violation of that clear moral red line.

9 – Lack accountability

Delegating the decision to use lethal force to algorithms raises significant questions about who is ultimately responsible and accountable for the use of force by autonomous weapons, particularly given their tendency towards unpredictability. This “accountability gap” is arguably illegal, as “international humanitarian law requires that individuals be held legally responsible for war crimes and grave breaches of the Geneva Conventions. Military commanders or operators could be found guilty if they deployed a fully autonomous weapon with the intent to commit a crime. It would, however, be legally challenging and arguably unfair to hold an operator responsible for the unforeseeable actions of an autonomous robot.”

10 – Violate international humanitarian law

International humanitarian law (IHL) sets out the principles of distinction and proportionality. The principle of distinction establishes the obligation of parties in armed conflict to distinguish between civilian and military targets, and to direct their operations only against military objectives. The principle of proportionality prohibits attacks in conflict which expose civilian populations to harm that is excessive when compared to the expected military advantage gained.

It has been noted that “fully autonomous weapons would face significant obstacles to complying with the principles of distinction and proportionality.” For example, these systems would lack the human judgment necessary to determine whether expected civilian harm outweighs anticipated military advantage in ever-changing and unforeseen combat situations.”

Further, it has been argued that autonomous weapons that target humans would violate the Martens Clause, a provision of IHL that establishes a moral baseline for judging emerging technologies. These systems would violate the dictates of public conscience and “undermine the principles of humanity because they would be unable to apply compassion or human judgment to decisions to use force.”

Related posts

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Autonomous Weapons

An introduction to the issue of Lethal Autonomous Weapons

Real-Life Technologies that Prove Autonomous Weapons are Already Here