State of California Endorses Asilomar AI Principles

Contents

Click here to see this page in other languages: Russian ![]()

On August 30, the State of California unanimously adopted legislation in support of the Future of Life Institute’s Asilomar AI Principles.

The Asilomar AI Principles are a set of 23 principles intended to promote the safe and beneficial development of artificial intelligence. The principles – which include research issues, ethics and values, and longer-term issues – emerged from a collaboration between AI researchers, economists, legal scholars, ethicists, and philosophers in Asilomar, California in January of 2017.

The Principles are the most widely adopted effort of their kind. They have been endorsed by AI research leaders at Google DeepMind, GoogleBrain, Facebook, Apple, and OpenAI. Signatories include Demis Hassabis, Yoshua Bengio, Elon Musk, Ray Kurzweil, the late Stephen Hawking, Tasha McCauley, Joseph Gordon-Levitt, Jeff Dean, Tom Gruber, Anthony Romero, Stuart Russell, and more than 3,800 other AI researchers and experts.

With ACR 215 passing the State Senate with unanimous support, the California Legislature has now been added to that list.

Assemblyman Kevin Kiley, who led the effort, said, “By endorsing the Asilomar Principles, the State Legislature joins in the recognition of shared values that can be applied to AI research, development, and long-term planning — helping to reinforce California’s competitive edge in the field of artificial intelligence, while assuring that its benefits are manifold and widespread.”

The third Asilomar AI principle indicates the importance of constructive and healthy exchange between AI researchers and policymakers, and the passing of this resolution highlights the value of that endeavor. While the principles do not establish enforceable policies or regulations, the action taken by the California Legislature is an important and historic show of support across sectors towards a common goal of enabling safe and beneficial AI.

The Future of Life Institute (FLI), the nonprofit organization that led the creation of the Asilomar AI Principles, is thrilled by this latest development, and encouraged that the principles continue to serve as guiding values for the development of AI and related public policy.

“By endorsing the Asilomar AI Principles, California has taken a historic step towards the advancement of beneficial AI and highlighted its leadership of this transformative technology,” said Anthony Aguirre, cofounder of FLI and physics professor at the University of California, Santa Cruz. “We are grateful to Assemblyman Kevin Kiley for leading the charge and to the dozens of co-authors of this resolution for their foresight on this critical matter.”

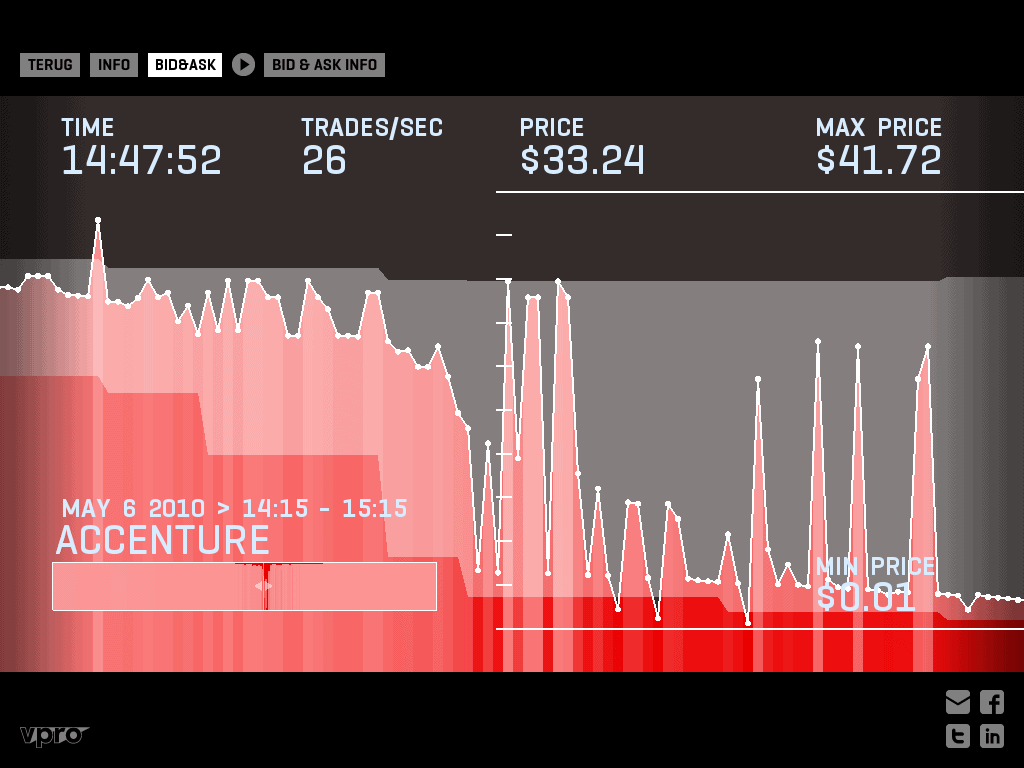

Profound societal impacts of AI are no longer merely a question of science fiction, but are already being realized today – from facial recognition technology, to drone surveillance, and the spread of targeted disinformation campaigns. Advances in AI are helping to connect people around the world, improve productivity and efficiencies, and uncover novel insights. However, AI may also pose safety and security threats, exacerbate inequality, and constrain privacy and autonomy.

“New norms are needed for AI that counteract dangerous race dynamics and instead center on trust, security, and the common good,” says Jessica Cussins, AI Policy Lead for FLI. “Having the official support of California helps establish a framework of shared values between policymakers, AI researchers, and other stakeholders. FLI encourages other governmental bodies to support the 23 principles and help shape an exciting and equitable future.”

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

The Pause Letter: One year later

Catastrophic AI Scenarios

Gradual AI Disempowerment