Contents

FLI December, 2017 Newsletter

FLI Announces 2nd AI Safety Grants Competition

A Look Back at 2017 and the Next Step for AI Safety

Advancing AI safety lies at the core of FLI’s mission, and 2017 was an exceptional year for safety. We hosted our Beneficial AI conference in Asilomar, Max Tegmark released his new book, Life 3.0, and FLI worked with various nonprofits and government agencies to push for a ban on lethal autonomous weapons.

But aside from these major events, we also witnessed the tireless work of our AI grant recipients, as they’ve addressed technical safety problems, organized interdisciplinary workshops on AI, and published papers and news articles about their research.

Now, FLI seeks to expand on that first successful round of grants with a new grant round in 2018!

For many years, artificial intelligence (AI) research has been appropriately focused on the challenge of making AI effective, with significant recent success, and great future promise. This recent success has raised an important question: how can we ensure that the growing power of AI is matched by the growing wisdom with which we manage it?

The focus of this RFP is on technical research or other projects enabling development of AI that is beneficial to society and robust in the sense that the benefits have some guarantees: our AI systems must do what we want them to do.

If you’re interested in applying for a grant, or you know someone who is, please follow this link.

Check us out on SoundCloud and iTunes!

Beneficial AI and Existential Hope in 2018

For most of us, 2017 has been a roller coaster, from increased nuclear threats to incredible advancements in AI to crazy news cycles. But while it’s easy to be discouraged by various news stories, we at FLI find ourselves hopeful that we can still create a bright future. In this episode, the FLI team discusses the past year and the momentum we’ve built, including: the Asilomar Principles, our 2018 AI safety grants competition, the recent Long Beach workshop on Value Alignment, and how we’ve honored one of civilization’s greatest heroes.

FLI’s Biggest Events of 2017

Beneficial AI 2017: A Principled Discussion in Asilomar

FLI kicked off 2017 with our Beneficial AI conference in Asilomar, where a collection of over 200 AI researchers, economists, psychologists, authors and other thinkers got together to discuss the future of artificial intelligence (videos here).

The conference produced a set of 23 Principles to guide the development of safe and beneficial AI. The Principles have been signed and supported by over 1200 AI researchers and 2500 others, including Elon Musk and Stephen Hawking.

But the Principles were just a start to the conversation. After the conference, Ariel began a series that looks at each principle in depth and provides insight from various AI researchers. Artificial intelligence will affect people across every segment of society, so we want as many people as possible to get involved. To date, tens of thousands of people have read these articles. You can read them all here and join the discussion!

News outlets including Business Insider, Inverse, and Newsweek have written about the Principles.

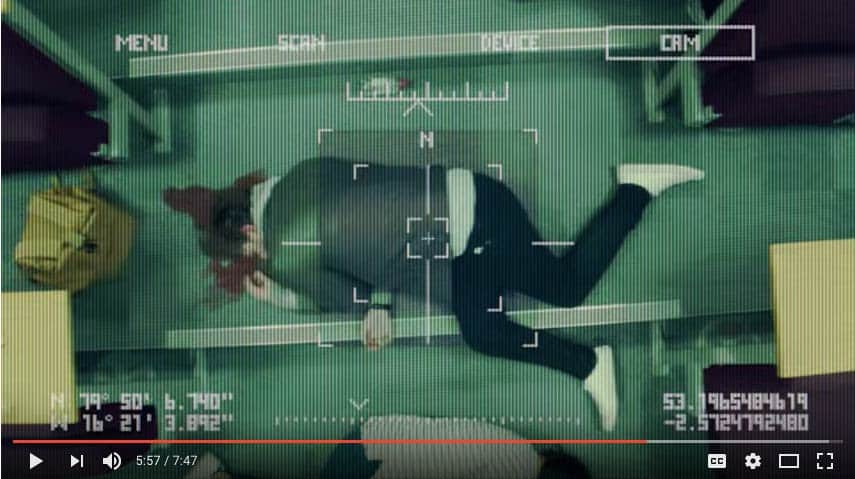

Slaughterbots: Will the UN Ban Autonomous Weapons?

Do you like Black Mirror? If so, check out this viral video, Slaughterbots, which received over 35 million views across platforms. Ahead of the UN’s meeting on lethal autonomous weapons, or “killer robots”, AI researcher and FLI advisor Stuart Russell released a short film detailing the dystopian nightmare that could result from palm-sized killer drones. It may seem like science fiction, but many of these technologies already exist today.

FLI helped develop this film to encourage the UN to begin negotiations for a ban on autonomous weapons and to ensure that they don’t lead to a destabilizing arms race. We’ve previously released two open letters calling for negotiations. Over 3700 AI researchers and 20,000 others signed the first letter in 2015, while the second, released this summer, was signed by leaders from top robotics companies around the world. Founders and CEOs of about 100 companies from 26 countries signed this second letter, which warns:

“Lethal autonomous weapons threaten to become the third revolution in warfare. Once developed, they will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend.”

Major news outlets covered this video, including CNN, The Washington Post, The Guardian, and Vice. Learn more at autonomousweapons.org.

Throughout 2017, FLI worked with the International Campaign to Abolish Nuclear Weapons (ICAN), PAX for Peace, and scientists across the world to push for an international ban on nuclear weapons. The ban successfully passed in July, and over 50 countries have signed it so far. And while the ban won’t eliminate all nuclear arsenals overnight, it is a big step towards stigmatizing nuclear weapons, just as the ban on landmines stigmatized those controversial weapons and pushed the superpowers to slash their arsenals.

At the initial UN negotiations in March, FLI presented a letter of support for the ban that has been signed by 3700 scientists from 100 countries – including 30 Nobel Laureates, Stephen Hawking, and former US Secretary of Defense William Perry. And in June, FLI presented a five-minute video to the UN delegates featuring statements from signatories.

“Scientists bear a special responsibility for nuclear weapons, since it was scientists who invented them and discovered that their effects are even more horrific than first thought”, the letter explains.

Formalizing a treaty to ban nuclear weapons is a big step towards a safer world, and in December, ICAN was awarded the Nobel Peace Prize for their work spearheading this effort.

The Inaugural Future of Life Award: Vasili Arkhipov

How can future generations repay someone whose actions very likely prevented global catastrophe? This is the question FLI asked when contemplating the heroics of the late Soviet naval officer, Vasili Arkhipov, who arguably saved civilization from all-out nuclear war during the Cuban Missile Crisis.

To honor Arkhipov and demonstrate our respect for civilization’s heroes, FLI members flew to London to deliver the Inaugural Future of Life Award and $50,000 to Arkhipov’s daughter, Elena, and grandson, Sergei.

The event was covered by The Times, The Guardian, The Independent, and The Atlantic.

LIFE 3.0: Living in the Age of Artificial Intelligence

What will happen when machines surpass humans at every task? Max Tegmark’s New York Times bestseller, Life 3.0: Being Human in the Age of Artificial Intelligence, explores how AI will impact life as it grows increasingly advanced and more difficult to control.

AI will impact everyone, and Life 3.0 aims to expand the conversation around AI to include all people so that we can create a truly beneficial future. This page features the answers from the people who have taken the survey that goes along with Max’s book. To join the conversation yourself, please take the survey at ageofai.org.

Tegmark spoke about the book on an FLI podcast as well as Sam Harris’ Waking Up podcast, and the book was covered by news outlets such as NPR, The Washington Post, and The Guardian.

Other Top Podcasts & Articles of 2017

Podcasts

- Life 3.0: Being Human in the Age of Artificial Intelligence With Max Tegmark

- Top AI Breakthroughs With Ian Goodfellow and Richard Mallah

- Climate Change With Brian Toon and Kevin Trenberth

- 80,000 Hours and Effective Altruism With Rob Wiblin and Brenton Mayer

- Law & Ethics of AI With Matt Scherer and Ryan Jenkins

Articles

-

90% of All the Scientists That Ever Lived Are Alive Today

By Eric Gastfriend -

Developing Countries Can’t Afford Climate Change

By Tucker Davey - Artificial Photosynthesis: Can We Harness the Energy of the Sun as Well as Plants?

By Tucker Davey - Should Artificial Intelligence Be Regulated?

By Anthony Aguirre, Ariel Conn, and Max Tegmark - Artificial Intelligence and the Future of Work

By Tucker Davey

Volunteers of the Year

Eric Gastfriend has been with FLI since the first days of its founding, and he’s been heading FLI’s Japan outreach and translation efforts, leading a team of volunteers fluent in both Japanese and English. Over the past year, Eric and his team have fostered relations with Dwango AI Lab and the Whole Brain Architecture Initiative (WBAI), both based in Japan and focused on AI R&D. Through these collaborations, they published a Japanese translation of FLI’s research priorities document in the Japanese Society for Artificial Intelligence Journal. They also interviewed Hiroshi Yamakawa, the Chief of Dwango AI Lab and chairperson at WBAI, discussing the state of AI research in Japan and its societal implications in comparison to those in the western world. Eric also wrote this year’s most popular article on FLI, “90% of All Scientists Who Ever Lived Are Alive Today,” which has been read by over 50,000 people to date.

Jacob Beebe joined FLI a year and a half ago and has been one of our most active volunteers. In particular, he is heavily involved with our Russian outreach efforts, having co-managed the recruitment and interviewing of new volunteer translators, and also proofreading their translations. Additionally, Jake has helped with FLI’s nuclear weapons awareness projects, conducting research into the social and geopolitical reasons for why countries hold a particular stance on nuclear weapons. He is currently also our “czar” of Russian social media, having set up and managed FLI’s profiles there. We look forward to working with him more in the new year.

What we’ve been up to

Lucas Perry, Meia Chita-Tegmark & Max Tegmark organized a one-day workshop with the Berggruen Institute & CIFAR on the the Ethics of Value Alignment right after NIPS, where AI-researchers, philosophers and other thought-leaders brainstormed about promising research directions. For example, if the technical value-alignment problem can be solved, then what values should AI be aligned with and through what process should these values be selected?

Richard Mallah participated in the Ethics of Value Alignment Workshop that FLI co-organized in Long Beach, CA. He alsogave a talk on AI safety entitled “Towards Robustness Criteria for Highly Capable AI” at the Q4 Boston Machine Intelligence Dinner hosted by Talla, attended by 50+ senior AI researchers and AI entrepreneurs. Richard additionally led a discussion group on AI safety at rationalist hub Macroscope in Montreal.

Jessica Cussins gave a talk on AI policy at Tencent in Palo Alto to a group that included Tencent researchers and legal scholars from China. She also gave a public comment at the San Mateo County Board of Supervisors meeting in which she supported the resolution calling on the United Nations to develop an international agreement restricting the development and use of lethal autonomous weapons.

Ariel Conn participated in the first discussion group of the N Square Innovators Network. The group is bringing together people from a diverse background to develop new methods and tools for addressing the nuclear threat and drawing greater public awareness to the issue.

Viktoriya Krakovna gave a talk at the NIPS conference on Interpretability for AI safety and Reinforcement Learning with a Corrupted Reward Channel. NIPS (Neural Information Processing Systems) is the biggest AI conference of the year, and over 8000 researchers attended this month’s event.