Contents

FLI May, 2017 Newsletter

Reducing the Threat of Nuclear War

Experts Say Nuclear Weapons Are a Bigger Risk Today Than During Cold War

Until recently, many Americans believed that nuclear weapons don’t represent the same threat as during the Cold War. However, recent events and aggressive posturing between nuclear nations —especially the U.S., Russia, and North Korea—has increased public awareness and concern. These fears were addressed at a recent MIT conference on nuclear weapons.

“The possibility of a nuclear bomb going off is greater today than 20 years ago,” said Ernest Moniz, former Secretary of Energy and a keynote speaker.

California Congresswoman Barbara Lee, another keynote speaker, recently returned from a trip to South Korea and Japan. Of the trip, she said, “We went to the DMZ, and I saw how close to nuclear war we really are.”

“We must prevent the president from launching nuclear weapons without a declaration from Congress,” Lee said.

Learn more about the event and why nuclear weapons are thought to be a bigger risk today than ever before.

Despite what you hear in the news, an atomic war between the superpowers is still the biggest threat. Delegates from most United Nations member states are gathering in New York next month to negotiate a nuclear weapons ban, and 30 Nobel Laureates, a former U.S. Secretary of Defense and over 3,000 other scientists from 84 countries have signed an open letter in support. Why?

This one-day event featured a great speaker lineup, including Iran-deal broker Ernie Moniz (MIT, former Secretary of Energy), California Congresswoman Barbara Lee, Lisbeth Gronlund (Union of Concerned Scientists), Joe Cirincione (Ploughshares), former congressman John Tierney, MA state reps Denise Provost and Mike Connolly, and Cambridge Mayor Denise Simmons. This conference addressed the political and economic realities, in an attempt to stimulate and inform the kinds of social movement needed to change national policy, with a focus on concrete steps we can take to reduce the risks.

Check us out on SoundCloud and iTunes!

Podcast: Creative AI with Mark Riedl & Scientists Support a Nuclear Ban

This is a special two-part podcast. First, Mark and Ariel discuss how AIs can use stories and creativity to understand and exhibit culture and ethics, while also gaining “common sense reasoning.” They also discuss the “big red button” problem in AI safety research, the process of teaching “rationalization” to AIs, and computational creativity. Mark is an associate professor at the Georgia Tech School of interactive computing, where his recent work has focused on human-AI interaction and how humans and AI systems can understand each other.

Then, we hear from scientists, politicians and concerned citizens about why they support the upcoming UN negotiations to ban nuclear weapons. Ariel interviewed a broad range of people over the past two months, and highlights are compiled here, including comments by Congresswoman Barbara Lee, Nobel Laureate Martin Chalfie, and FLI president Max Tegmark.

This Month’s Most Popular Articles

Global Catastrophic Risks 2017

Anthony Aguirre, Ariel Conn, Viktoriya Krakovna, Richard Mallah, and Max Tegmark all contributed to the AI section of the Global Challenges Foundation’s annual report on Global Catastrophic Risks (GCRs). The report was among the publications presented to leaders and participants of this year’s G7 meeting. The report discusses weapons of mass destruction, catastrophic climate change, ecological collapse, pandemics, artificial intelligence, and other 21st century risks.

The 2017 Genome Project Write (GP-write) event included roughly 250 people from 10 countries with backgrounds in science, ethics, law, government, and more. The project will happen in cells only, rather than producing organisms, and this work could be used to solve problems associated with climate change and the environment, invasive species, pathogens, and food insecurity. “This project not only changes the way the world works, but it changes the way we work in the world,” said GP-write lead author Nancy J. Kelly.

By Ariel Conn

Just one day before the WannaCry ransomware attack shut down 16 hospitals in the UK and hit hundreds of thousands of organizations and individuals in over 150 countries, the Director of National Intelligence, Daniel Coats, released the Worldwide Threat Assessment of the US Intelligence Community. Threats include cyber attacks, nuclear weapons, climate change, biodiversity loss, and artificial intelligence.

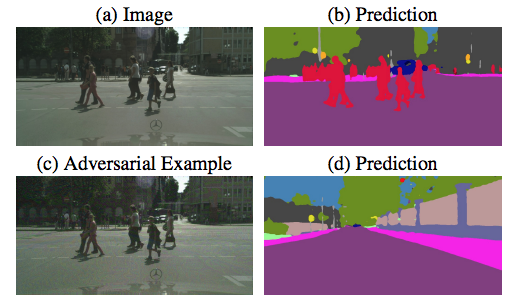

Machine Learning Security at ICLR 2017

By Viktoriya Krakovna

The 5th International Conference on Learning Representations featured many interesting papers on machine learning security. Papers included discoveries in adversarial learning on both the attack and defense sides. “My overall impression is that adversarial attacks are still ahead of adversarial defense, but the defense side is starting to catch up,” Krakovna writes.

Breakthroughs in “deep learning” have led to a rapid increase in the sophistication of AI. Such systems can effectively “program themselves” by creating much or most of the code through which they operate. The code generated by such systems can be so complex, that even the people who built and initially programmed the system may not be able to fully explain why the systems do what they do. “The opacity of modern AI systems is… one of the major barriers that makes it difficult to effectively manage the public risks associated with AI.”

What we’ve been up to this month

Ariel Conn, Lucas Perry, Meia Chita-Tegmark, and Max Tegmark helped organize and participated in MIT’s conference “Reducing the Threat of Nuclear Weapons” this month. The conference was sponsored by MIT Radius (the former Technology and Culture Forum), Massachusetts Peace Action, the American Friends Service Committee, and FLI.

Viktoriya Krakovna attended the 5th International Conference on Learning Representations (ICLR) at the end of April. The workshop included keynote speakers and panelists discussing the topics of representation learning and reinforcement learning.

Ariel Conn attended GP-write this month, which brought together journalists and lead researchers in genomics. In general, the energy at the conference was one of excitement about the possibilities that GP-write could unleash.