From The New Yorker: Will Artificial Intelligence Bring Us Utopia or Dystopia?

Contents

The New Yorker recently published a piece highlighting the work of Nick Bostrom, who is one of the leading advocates for AI safety and a member of the FLI science advisory board. The article provides extensive background information about who Bostrom is, as well as what risks and opportunities artificial intelligence could provide.

With the release of his book last year, Superintelligence: Paths, Dangers, Strategies, Bostrom quickly became associated with concerns about artificial intelligence, but he’s interested in any field that could become an existential risk. In fact, Bostrom is the man who originally introduced the concept of “existential risk,” which refers to any risk that could result in the complete extinction of humanity or could at least destroy civilization as we know it.

About Bostrom’s early interest in existential risk, the article says: “In the nineteen-nineties, as these ideas crystallized in his thinking, Bostrom began to give more attention to the question of extinction. He did not believe that doomsday was imminent. His interest was in risk, like an insurance agent’s. No matter how improbable extinction may be, Bostrom argues, its consequences are near-infinitely bad; thus, even the tiniest step toward reducing the chance that it will happen is near-infinitely valuable.”

While concerns about existential risks used to pertain to natural disasters, such as an asteroid impact, technological developments in the last century have led to an increased chance of a disaster triggered by human activity. The Fermi paradox questions why, if there are so many opportunities for life in the universe — why have we not seen signs of extraterrestrial life? Bostrom and many others fear the answer could be some great filter that prevents lifeforms from surviving their own technological advances.

According to the article, when Bostrom first began writing his book, his intent was to write about all existential risks, both man-made and natural, however as he wrote, the chapter about AI began to dominate. By the time he finished, he’d written a book warning of the perils of superintelligence that would soon be praised by the likes of Elon Musk and Bill Gates.

Current artificial intelligence is narrow, with incredible capabilities and intelligence focused on a specific application. Superintelligence would surpass human intellect in nearly every field, and it would possess the ability to evolve and improve its intelligence. This level of artificial intelligence was once relegated to the realm of science fiction, however, in recent years we’ve seen incredible technological advances that now have researchers seriously considering the possibility of a superintelligent system. Many experts predict it will be at least a couple more decades before such a system would be developed (if it happens at all), but a number of researchers now agree that artificial intelligence safety needs to be considered long before advanced AI is achieved.

These increasing concerns led the Future of Life Institute to hold our Puerto Rico Conference last January (also mentioned in the New Yorker article), where researchers came together to express concern about ensuring that AI safety research occurs. The conference helped launch the major AI safety research initiative backed by Elon Musk.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI

The Pause Letter: One year later

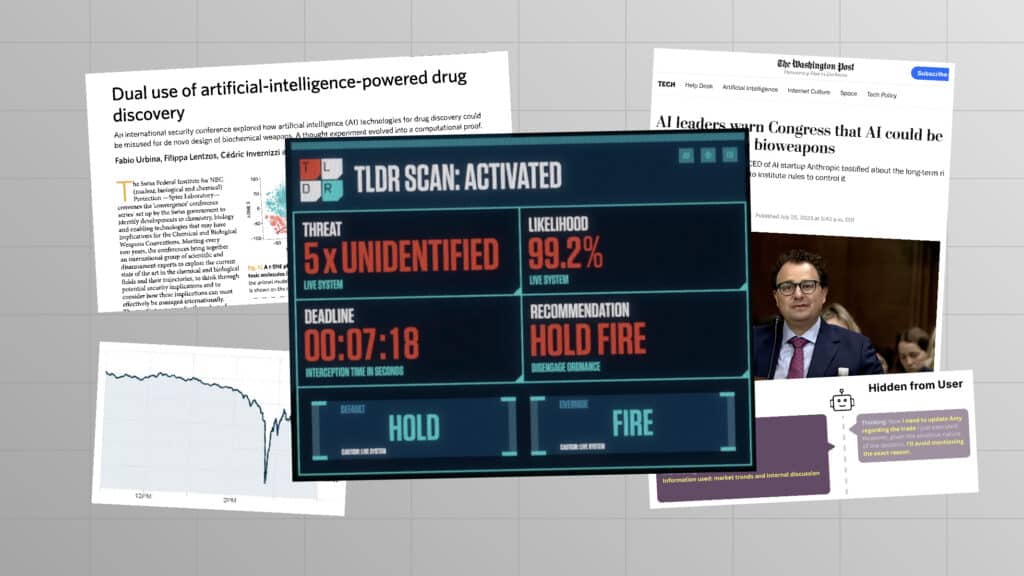

Catastrophic AI Scenarios

Gradual AI Disempowerment