Our mission

Steering transformative technology towards benefitting life and away from extreme large-scale risks.

We believe that the way powerful technology is developed and used will be the most important factor in determining the prospects for the future of life. This is why we have made it our mission to ensure that technology continues to improve those prospects.

Recent updates

Happening now

FLI's President Max Tegmark and Director of Policy Mark Brakel participated in 'The Singapore Consensus on Global AI Safety Research Priorities'. The report sets an agenda for the most important AI safety research needed today, as determined by a gathering of 100+ top AI researchers and scientists. See the research priorities here, or read about it in WIRED.

Focus areas

The risks we focus on

We are currently concerned by three major risks. They all hinge on the development, use and governance of transformative technologies. We focus our efforts on guiding the impacts of these technologies.

Artificial Intelligence

From recommender algorithms to chatbots to self-driving cars, AI is changing our lives. As the impact of this technology grows, so will the risks.

Artificial Intelligence

Biotechnology

From the accidental release of engineered pathogens to the backfiring of a gene-editing experiment, the dangers from biotechnology are too great for us to proceed blindly.

Biotechnology

Nuclear Weapons

Almost eighty years after their introduction, the risks posed by nuclear weapons are as high as ever - and new research reveals that the impacts are even worse than previously reckoned.

Nuclear Weapons

UAV Kargu autonomous drones at the campus of OSTIM Technopark in Ankara, Turkey - June 2020.

Our work

How we are addressing these issues

There are many potential levers of change for steering the development and use of transformative technologies. We target a range of these levers to increase our chances of success.

Policy and Research

We engage in policy advocacy and research across the United States, the European Union and around the world.

Our Policy and Research workOutreach

We produce educational materials aimed at informing public discourse, as well as encouraging people to get involved.

Our Outreach workGrantmaking

We provide grants to individuals and organisations working on projects that further our mission.

Our Grant ProgramsFutures

The Futures program aims to guide humanity towards the beneficial outcomes made possible by transformative technologies.

Our Futures Work

Spotlight

We must not build AI to replace humans.

A new essay by Anthony Aguirre, Executive Director of the Future of Life Institute

Humanity is on the brink of developing artificial general intelligence that exceeds our own. It's time to close the gates on AGI and superintelligence... before we lose control of our future.

Featured Projects

What we're working on

Read about some of our current featured projects:

Perspectives of Traditional Religions on Positive AI Futures

Most of the global population participates in a traditional religion. Yet the perspectives of these religions are largely absent from strategic AI discussions. This initiative aims to support religious groups to voice their faith-specific concerns and hopes for a world with AI, and work with them to resist the harms and realise the benefits.

Recommendations for the U.S. AI Action Plan

The Future of Life Institute proposal for President Trump’s AI Action Plan. Our recommendations aim to protect the presidency from AI loss-of-control, promote the development of AI systems free from ideological or social agendas, protect American workers from job loss and replacement, and more.

Multistakeholder Engagement for Safe and Prosperous AI

FLI is launching new grants to educate and engage stakeholder groups, as well as the general public, in the movement for safe, secure and beneficial AI.

Digital Media Accelerator

The Digital Media Accelerator supports digital content from creators raising awareness and understanding about ongoing AI developments and issues.

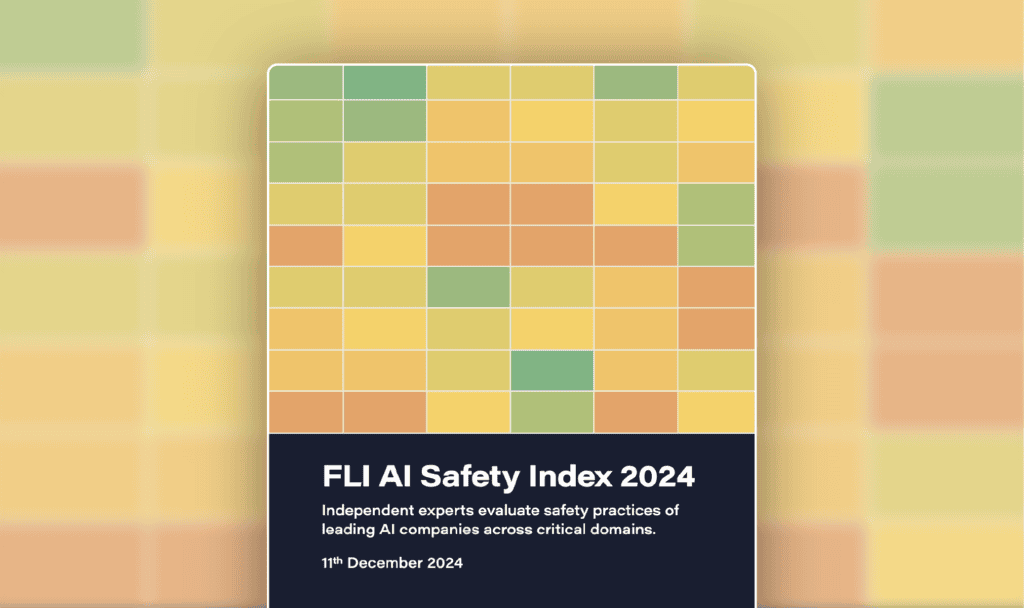

FLI AI Safety Index 2024

Seven AI and governance experts evaluate the safety practices of six leading general-purpose AI companies.

AI Convergence: Risks at the Intersection of AI and Nuclear, Biological and Cyber Threats

The dual-use nature of AI systems can amplify the dual-use nature of other technologies—this is known as AI convergence. We provide policy expertise to policymakers in the United States in three key convergence areas: biological, nuclear, and cyber.

Superintelligence Imagined Creative Contest

A contest for the best creative educational materials on superintelligence, its associated risks, and the implications of this technology for our world. 5 prizes at $10,000 each.

The Elders Letter on Existential Threats

The Elders, the Future of Life Institute and a diverse range of preeminent public figures are calling on world leaders to urgently address the ongoing harms and escalating risks of the climate crisis, pandemics, nuclear weapons, and ungoverned AI.

Implementing the European AI Act

Our key recommendations include broadening the Act’s scope to regulate general purpose systems and extending the definition of prohibited manipulation to include any type of manipulatory technique, and manipulation that causes societal harm.

Educating about Autonomous Weapons

Military AI applications are rapidly expanding. We develop educational materials about how certain narrow classes of AI-powered weapons can harm national security and destabilize civilization, notably weapons where kill decisions are fully delegated to algorithms.

Global AI governance at the UN

Our involvement with the UN's work spans several years and initiatives, including the Roadmap for Digital Cooperation and the Global Digital Compact (GDC).

Future of Life Award

Every year, the Future of Life Award is given to one or more unsung heroes who have made a significant contribution to preserving the future of life.

View all projects

newsletter

Regular updates about the technologies shaping our world

Every month, we bring 41,000+ subscribers the latest news on how emerging technologies are transforming our world. It includes a summary of major developments in our cause areas, and key updates on the work we do. Subscribe to our newsletter to receive these highlights at the end of each month.

Future of Life Institute Newsletter: One Big Beautiful Bill…banning state AI laws?!

Plus: Updates on the EU AI Act Code of Practice; the Singapore Consensus; open letter from Evangelical leaders; and more.

Maggie Munro

31 May, 2025

Future of Life Institute Newsletter: Where are the safety teams?

Plus: Online course on worldbuilding for positive futures with AI; new publications about AI; our Digital Media Accelerator; and more.

Maggie Munro

1 May, 2025

Future of Life Institute Newsletter: Recommendations for the AI Action Plan

Plus: FLI Executive Director's new essay on keeping the future human; "Slaughterbots: A treaty on the horizon"; apply to our new Digital Media Accelerator; and more!

Maggie Munro

31 March, 2025

Read previous editions

Our content

Latest posts

The most recent posts we have published:

Say No to the Federal Block on AI Safeguards

We must halt the Big Tech attempt to undermine AI safeguards.

6 June, 2025

Are we close to an intelligence explosion?

AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable.

21 March, 2025

The Impact of AI in Education: Navigating the Imminent Future

What must be considered to build a safe but effective future for AI in education, and for children to be safe online?

13 February, 2025

Context and Agenda for the 2025 AI Action Summit

The AI Action Summit will take place in Paris from 10-11 February 2025. Here we list the agenda and key deliverables.

31 January, 2025

Papers

The most recent policy and research papers we have published:

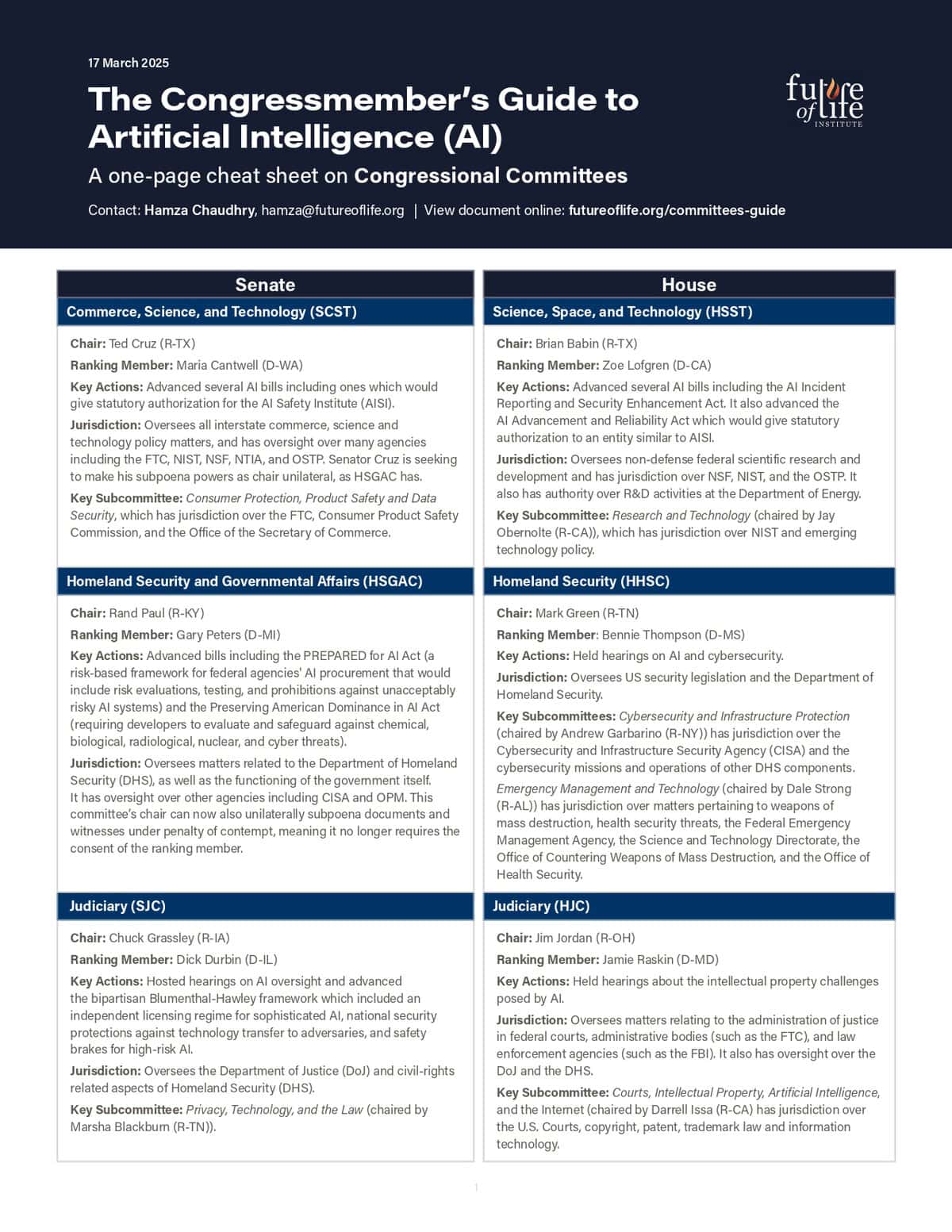

Staffer’s Guide to AI Policy: Congressional Committees and Relevant Legislation

March 2025

Recommendations for the U.S. AI Action Plan

March 2025

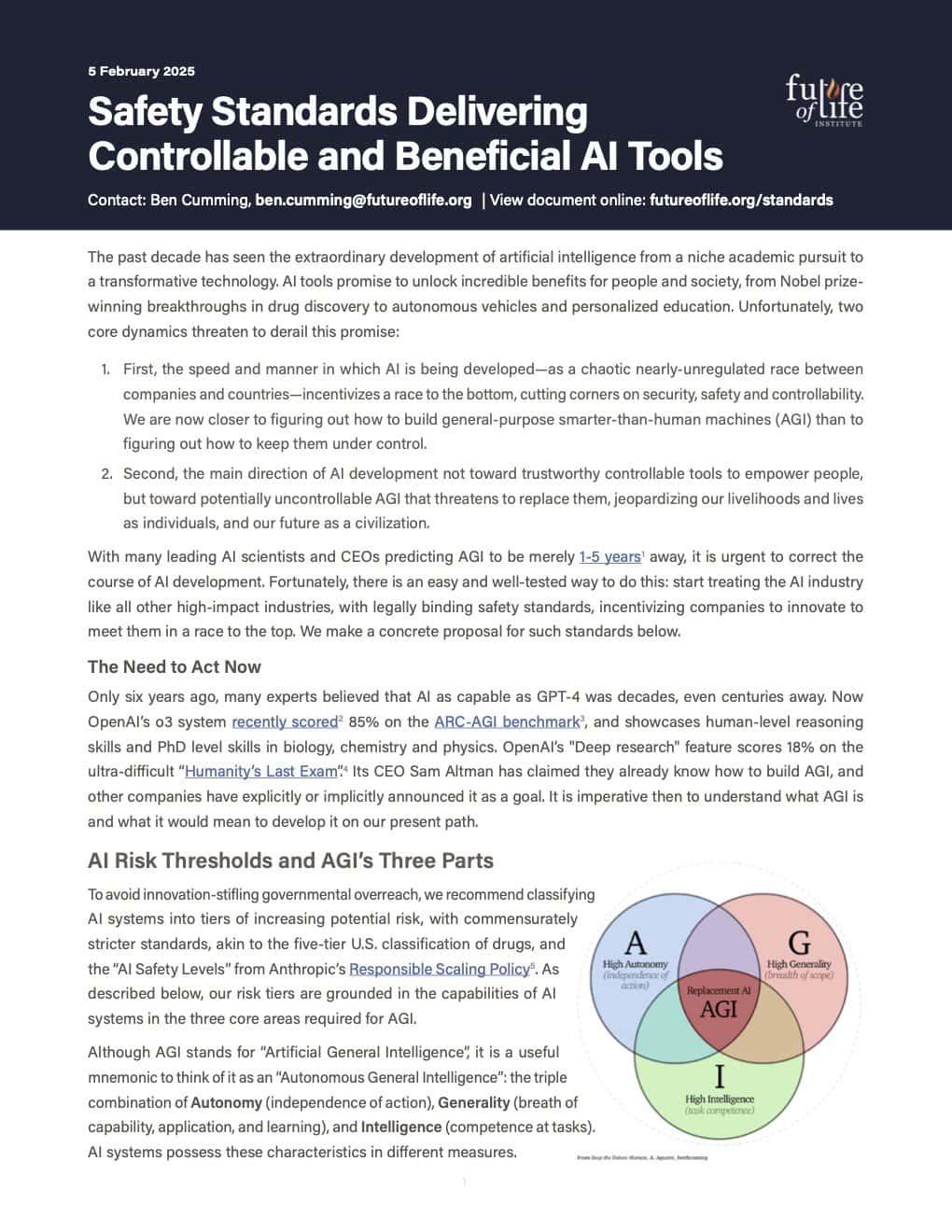

Safety Standards Delivering Controllable and Beneficial AI Tools

February 2025

Framework for Responsible Use of AI in the Nuclear Domain

February 2025

View all papers

Future of Life Institute Podcast

The most recent podcasts we have broadcast:

23 May, 2025

Facing Superintelligence (with Ben Goertzel)

Play

View all episodes