Contents

FLI March, 2017 Newsletter

The UN Negotiates a Ban on Nuclear Weapons

FLI Delivers an Open Letter from over 3,000 Scientists to the UN

Delegates from most UN member states gathered in New York to negotiate a nuclear weapons ban, where they also received a letter of support that has been signed by thousands of scientists from over 80 countries – including 29 Nobel Laureates, Stephen Hawking, and a former US Secretary of Defense.

“Scientists bear a special responsibility for nuclear weapons, since it was scientists who invented them and discovered that their effects are even more horrific than first thought”, the letter explains.

The letter was delivered at 1pm on Monday March 27 in the UN General Assembly Hall to Her Excellency Ms. Elayne Whyte Gómez from Costa Rica, who has and will continue to preside over the ongoing negotiations.

None of the supporters of these UN negotiations are naive and think that a treaty will magically rid the world of nuclear weapons—rather, the goal is to stigmatize nuclear weapons, just as the ban on landmines stigmatized those controversial weapons and pushed the superpowers to slash their arsenals.

The open letter is an expression of support from scientists for the UN negotiations to ban nuclear weapons. Over 3,400 scientists from a multitude of different disciplines have signed in recognition that, as Ronald Reagan expressed, “A nuclear war cannot be won and must never be fought.”

Most people and governments are horrified by the idea of children and other helpless civilians suffering and dying, even during war. Finding a way to prevent the unnecessary slaughter of innocents has brought over 115 countries to the United Nations in New York this week to begin negotiations of a historic treaty that would, once and for all, ban nuclear weapons.

Testimony by Sue Coleman-Haseldine, Nuclear Bomb Testing Survivor

Sue survived bomb tests near her home in Australia and has first hand experience with the deadly effects that are left by nuclear weapons long after their initial detonations. She recounted these experiences to members of the UN during negotiations for a ban on nuclear weapons, and she expressed her full support for a ban.

Testimony by Fujimori Toshiki, Hiroshima Survivor

Fujimori Toshiki addressed the United Nations on the first day of negotiations for a treaty to ban nuclear weapons. He was just 1 year and 4 months old when the bomb was dropped on Hiroshima, and he recounted the experiences of his family and community on that day to the assembly.

Check us out on SoundCloud and iTunes!

Podcast: Law and Ethics of AI

With Ryan Jenkins and Matt Scherer

The rise of artificial intelligence presents not only technical challenges, but important legal and ethical challenges for society, especially regarding machines like autonomous weapons and self-driving cars. To discuss these issues, I interviewed Matt Scherer and Ryan Jenkins. Matt is an attorney and legal scholar whose scholarship focuses on the intersection between law and artificial intelligence. Ryan is an assistant professor of philosophy and a senior fellow at the Ethics and Emerging Sciences group at California Polytechnic State, where he studies the ethics of technology. In this podcast, we discuss accountability and transparency with autonomous systems, government regulation vs. self-regulation, fake news, and the future of autonomous systems.

March Madness Campaign

The nuclear weapons threat is going from bad to worse, so we’re getting non-alternative facts out. We’ve developed popular videos and apps showing nuclear near-misses and overkill target maps, and we’ve gotten over 3,000 scientists to sign our open letter letter in support of this week’s UN nuclear disarmament negotiations. Can you help us do more? Your impact will be doubled during our March Madness Campaign, when two idealistic donors will match your gift dollar-for-dollar, up to $70,000 until April 7.

The Asilomar AI Principles

Is an AI Arms Race Inevitable?

By Ariel Conn

The AI Arms Race Principle states that “An arms race in lethal autonomous weapons should be avoided.” This principle seems simple and straightforward at first glance, but just what counts as a lethal autonomous weapon and the means by which we can avoid such an arms race are difficult and open questions. Continuing our Principles series, we interview several professors and academics for their opinions on these issues.

The Shared Prosperity Principle, developed at our Beneficial AI 2017 conference, seeks to establish the importance that the economic prosperity created by AI be shared widely. Continuing our AI Principles series, we interviewed several academics and professors for their opinions on this principle.

At our Beneficial AI 2017 conference, we developed the Risks Principle, which seeks to establish the importance of risk mitigation efforts in relation to dangers posed by advanced AI systems. We interviewed professors and academics who signed onto the principles for their opinions of this principle.

This Month’s Most Popular Articles

Using Machine Learning to Address AI Risk

This talk by Jessica Taylor serves as a quick survey of the kinds of technical problems being worked on under the “Alignment for Advanced ML Systems” research agenda at the Machine Intelligence Research Institute.

How Self-Driving Cars Use Probability

By Tucker Davey

Human drivers observe our environment and make decisions based on the likelihood of certain things happening. Autonomous systems such as self-driving cars will make similar decisions based on probabilities, but through a different process. Unlike a human who trusts intuition and experience, these autonomous cars calculate the probability of certain scenarios using data collectors and reasoning algorithms.

The AI Debate Must Stay Grounded in Reality

By Vincent Conitzer

Progress in artificial intelligence has been rapid in recent years. Computer programs are dethroning humans in games ranging from Jeopardy to Go to poker and AI is starting to outperform humans in image and speech recognition. Given this rapid change, it is important that we ensure that our conversations do not fall into speculation and science fiction.

By David Wright

China proposed a way to reduce tensions on the Korean peninsula: Pyongyang would freeze its missile and nuclear programs in exchange for Washington and Seoul halting their current round of military exercises. China also sees this as a way of starting talks between the United States and North Korea, which it believes are necessary to resolve hostilities on the peninsula.

What we’ve been up to this month

Attending UN Negotiations to Ban Nuclear Weapons

Ariel Conn, Lucas Perry, Meia Chita-Tegmark, and Max Tegmark all participated in events surrounding the UN negotiations to create a treaty to ban nuclear weapons. This included presenting the nuclear open letter to the President of the conference, hosting a side event on financial stigmatization of nuclear weapons, co-hosting a reception that brought together scientists, diplomats, and NGOs, and many other small tasks.

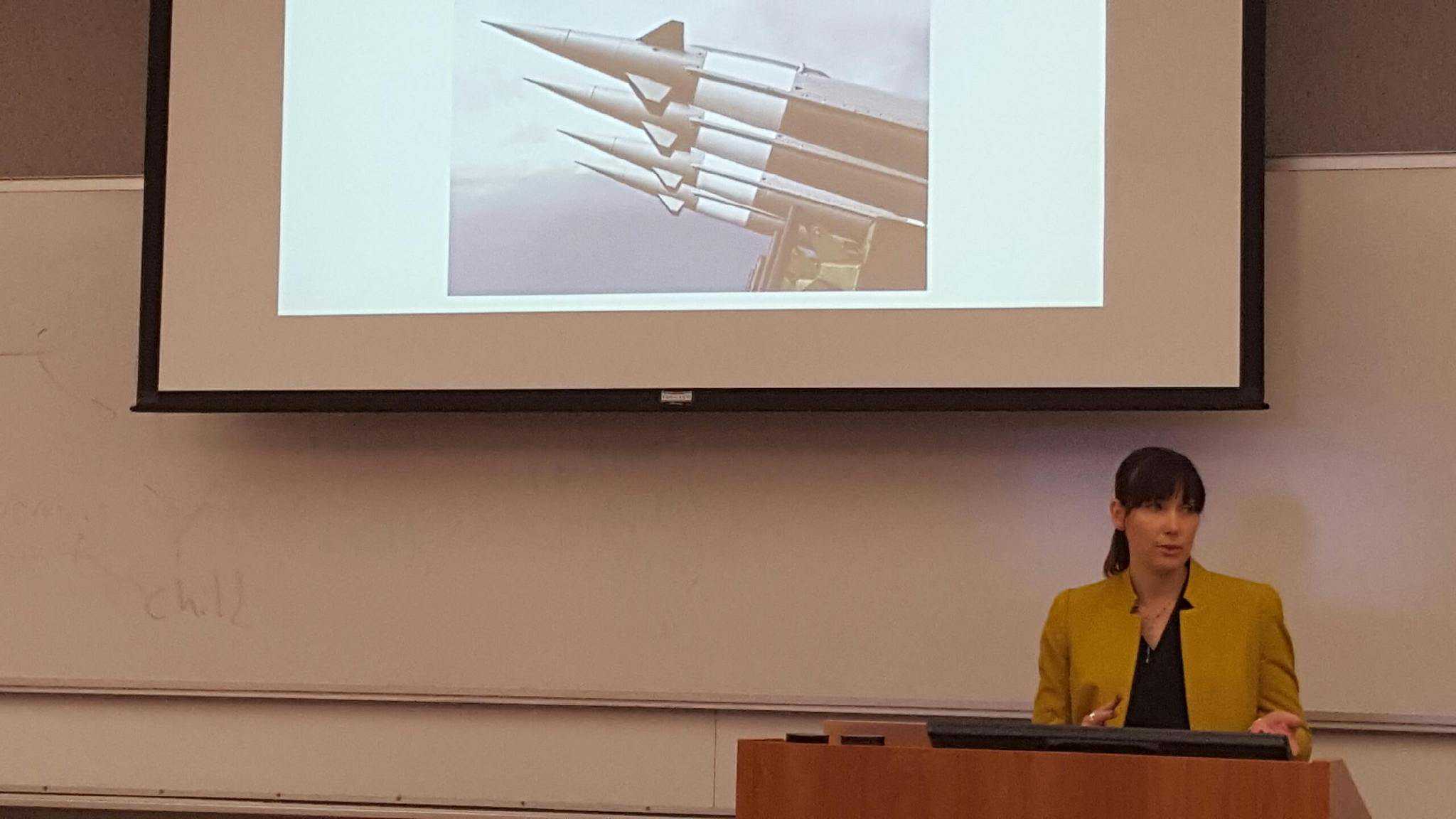

Ariel Conn was invited to speak to the International Law Society of Rutgers Law School. She discussed the current threat of nuclear weapons and the ongoing negotiations at the UN for a ban on nuclear weapons.

Richard Mallah participated in a discussion roundtable on the future of automation and work at the Harvard Kennedy School. This was an interdisciplinary conversation featuring many professions adjacent to AI exploring potential future displacement scenarios and potential remedies.

Anthony Aguirre attended the UCLA Garrick Institute Colloquium on Catastrophic and Existential Risk. This colloquium sought to connect the existential risk community with people from the formal risk analysis and other adjoining communities.