What Google’s TPUs Mean for AI Timing and Safety

Contents

The following article was written by Jim Babcock and originally posted on Concept Space Cartography.

Last Wednesday, Google announced that AlphaGo was not powered by GPUs as everyone thought, but by Google’s own custom ASICs (application-specific integrated circuits), which they are calling “tensor processing units” (TPUs).

We’ve been running TPUs inside our data centers for more than a year, and have found them to deliver an order of magnitude better-optimized performance per watt for machine learning.

…

TPU is tailored to machine learning applications, allowing the chip to be more tolerant of reduced computational precision, which means it requires fewer transistors per operation.

So, what does this mean for AGI timelines, and how does the existence of TPUs affect the outcome when AGI does come into existence?

The development of TPUs accelerated the timeline of AGI development. This is fairly straightforward; researchers can do more experiments with more computing power, and algorithms that stretched past the limits of available computing power before became possible.

If your estimate of when there will be human-comparable or superintelligent AGI was based on the very high rate of progress in the past year, then this should make you expect AGI to arrive later, because it explains some of that progress with a one-time gain that can’t be repeated. If your timeline estimate was based on extrapolating Moore’s Law or the rate of progress excluding the past year, then this should make you expect AGI to arrive sooner.

Some people model AGI development as a race between capability and control, and want us to know more about how to control AGIs before they’re created. Under a model of differential technological development, the creation of TPUs could be bad if it accelerates progress in AI capability more than it accelerates progress in AI safety. I have mixed feelings about differential technological development as applied to AGI; while the safety/control research has a long way to go, humanity faces a lot of serious problems which AGI could solve. In this particular case, however, I think the differential technological advancement model is wrong in an interesting way.

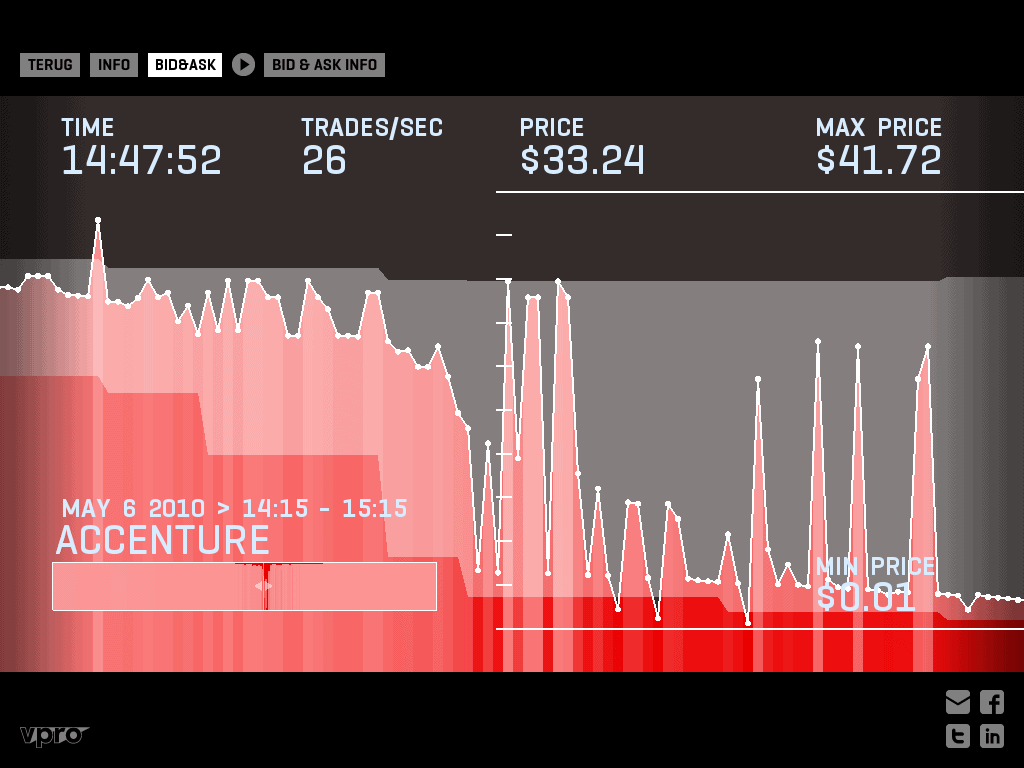

Take the perspective of a few years ago, before Google invested in developing ASICs. Switching from GPUs to more specialized processors looks pretty inevitable; it’s a question of when it will happen, not whether it will. Whenever the transition happens, it creates a discontinuous jump in capability; Google’s announcement calls it “roughly equivalent to fast-forwarding technology about seven years into the future”. This is slight hyperbole, but if you take it at face value, it raises an interesting question: which seven years do you want to fast-forward over? Suppose the transition were delayed for a very long time, until AGI of near-human or greater-than-human intelligence was created or was about to be created. Under those circumstances, introducing specialized processors into the mix would be much riskier than it is now. A discontinuous increase in computational power could mean that AGI capability skips discontinuously over the region that contains the best opportunities to study an AGI and work on its safety.

In diagram form:

I don’t know whether this is what Google was thinking when they decided to invest in TPUs. (It probably wasn’t; gaining a competitive advantage is reason enough). But it does seem extremely important.

There are a few smaller strategic considerations that also point in the direction of TPUs being a good idea. GPU drivers are extremely complicated, and rumor has it that the code bases of both major GPU

manufacturers are quite messy; starting from scratch in a context that doesn’t have to deal with games and legacy code can greatly improve reliability. When AGIs first come into existence, if they run on specialized hardware then the developers won’t be able to increase its power as rapidly by renting more computers because availability of the specialized hardware will be more limited. Similarly, an AGI acting autonomously won’t be able to increase its power that way either. Datacenters full of AI-specific chips make monitoring easier by concentrating AI development into predictable locations.

Overall, I’d say Google’s TPUs are a very positive development from a safety standpoint.

Of course, there’s still the question of how the heck they actually are, beyond the fact that they’re specialized processors that train neural nets quickly. In all likelihood, many of the gains come from tricks they haven’t talked about publicly, but we can make some reasonable inferences from what they have said.

Training a neural net involves doing a lot of arithmetic with a very regular structure, like multiplying large matrices and tensors together. Algorithms for training neural nets parallelize extremely well; if you double the amount of processors working on a neural net, you can finish the same task in half the time, or make your neural net bigger. Prior to 2008 or so, machine learning was mostly done on general-purpose CPUs — ie, Intel and AMD’s x86 and x86_64 chips. Around 2008, GPUs started becoming less specific to graphics and more general purpose, and today nearly all machine learning is done with “general-purpose GPU” (GPGPU). GPUs can perform operations like tensor multiplication more than an order of magnitude faster. Why’s that? Here’s a picture of an AMD Bulldozer CPU which illustrates the problem CPUs have. This is a four-core x86_64 CPU from late 2011.

This is exactly what a GPU is. GPUs only work on computations with highly regular structure; they can’t handle branches or other control flow, they have comparatively simple instruction sets (and hide that instruction set behind a driver so it doesn’t have to be backwards compatibility), and they have predictable memory-access patterns to reduce the need for cache. They spend most of their energy and chip-area on arithmetic units that take in very wide vectors of numbers, and operate on all of them at once.

But GPUs still retain a lot of computational flexibility that training a neural net doesn’t need. In particular, they work on numbers with varying numbers of digits, which requires duplicating a lot of the arithmetic circuitry. While Google has published very little about their TPUs, one thing they did mention is reduced computational precision.

As a point of comparison, take Nvidia’s most recent GPU architecture, Pascal.

Each SM [streaming multiprocessor] in GP100 features 32 double precision (FP64) CUDA Cores, which is one-half the number of FP32 single precision CUDA Cores.

…

Using FP16 computation improves performance up to 2x compared to FP32 arithmetic, and similarly FP16 data transfers take less time than FP32 or FP64 transfers.

| Format | Bits of exponent |

Bits of precision |

| FP16 | 5 | 10 |

| FP32 | 9 | 23 |

| FP64 | 11 | 52 |

So a significant fraction of Nvidia’s GPUs are FP64 cores, which are useless for deep learning. When it does FP16 operations, it uses an FP32 core in a special mode, which is almost certainly less efficient than using two purpose-built FP16 cores. A TPU can also omit hardware for unused operations like trigonometric functions and probably, for that matter, division. Does this add up to a full order of magnitude? I’m not really sure. But I’d love to see Google publish more details of their TPUs, so that the whole AI research community can make the same switch.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

The Pause Letter: One year later

Catastrophic AI Scenarios

Gradual AI Disempowerment